Insider Brief

- A new study by physicists Jens Eisert and John Preskill finds that while quantum computing is advancing rapidly, reaching fault-tolerant, application-scale systems will take far longer and require solving major engineering and conceptual gaps.

- The researchers highlight progress in hardware and algorithms but warn that current devices—such as Google’s 103-qubit and IBM’s 5,000-gate experiments—remain limited by noise and scalability barriers despite demonstrating controlled multi-qubit operations.

- They conclude that no single hardware platform yet shows a clear path to dominance and that the first real quantum advantages will likely emerge in scientific simulation before expanding to commercial use.

- Photo by geralt on Pixabay

With a constant stream of announcements about research advances from the world’s universities and quantum companies, there is little doubt that quantum computing is rapidly surmounting obstacles once thought intractable.

Quantum computing has entered a period of visible progress, but a new study published in the pre-print server arXiv warns that the road from noisy laboratory prototypes to machines capable of useful work will be longer, harder and less predictable than many in the field anticipate.

In their paper, physicists Jens Eisert of Freie Universität Berlin and John Preskill of the California Institute of Technology describe the technical — as well as the conceptual — gaps separating current devices from the fault-tolerant systems required for dependable applications. The analysis divides the next decade of development into a sequence of hurdles: moving from error mitigation to active error correction, from limited correction to scalable fault tolerance, from early heuristic algorithms to mature and verifiable ones and, finally, from small-scale simulations to credible quantum advantage in practical computation.

The researchers argue that while quantum computers can already perform some operations beyond the reach of the world’s most powerful supercomputers, they don’t believe that any have as yet produced an outcome that carries practical or economic value. They frame the task ahead as a transition from today’s “noisy intermediate-scale quantum,” or NISQ, machines to what they call “fault-tolerant application-scale quantum,” or FASQ, systems—machines capable of running long, error-free computations.

Progress With Limits

This criticism isn’t meant to ignore the current progress.

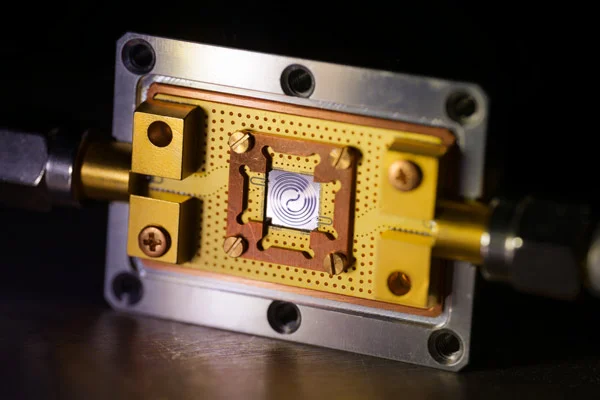

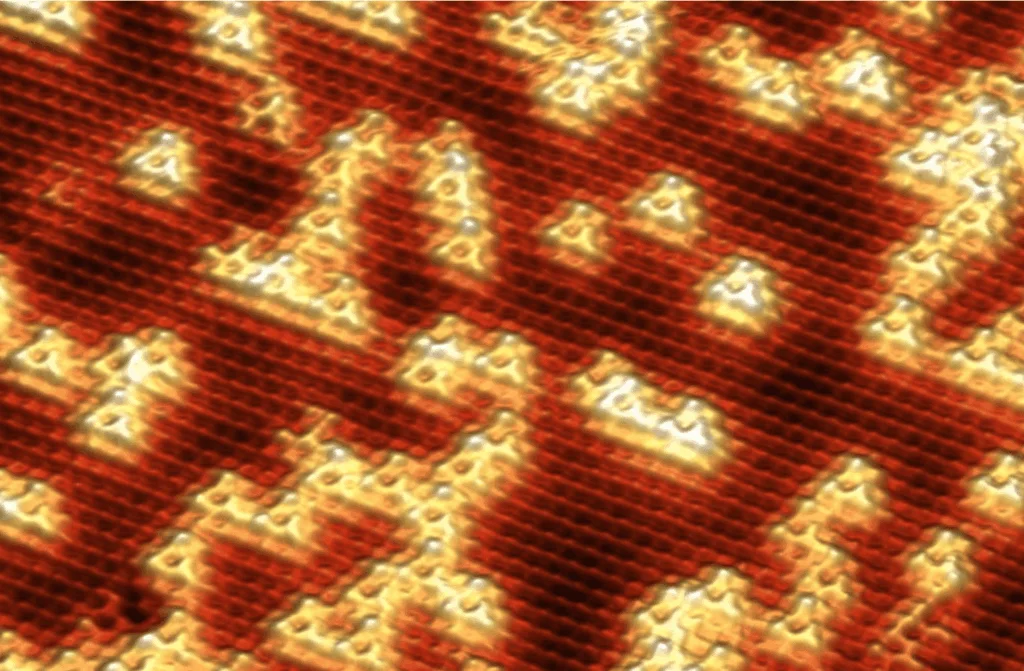

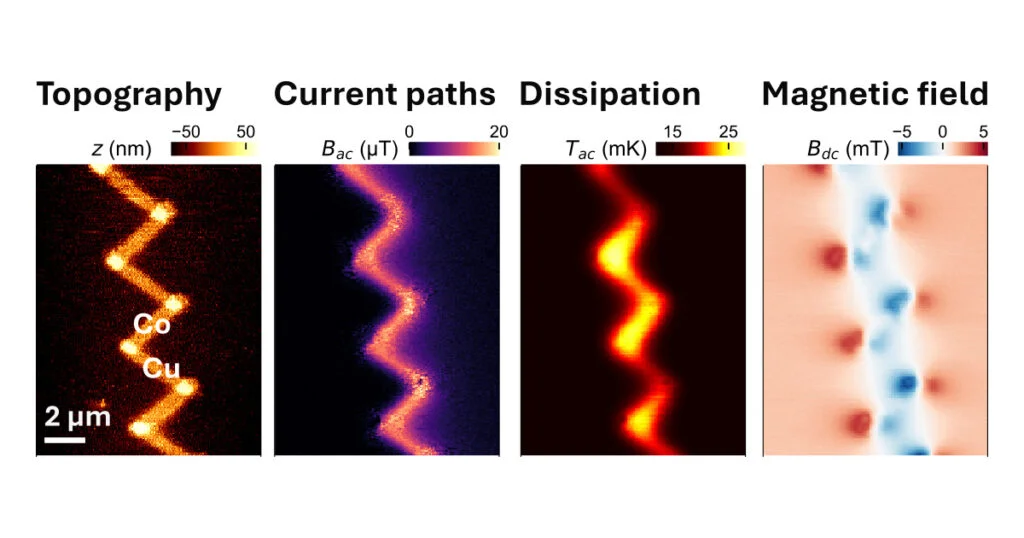

Eisert and Preskill describe the current generation of hardware as an impressive engineering achievement. Experiments using superconducting qubits, trapped ions and neutral atoms in optical tweezers have all surpassed the 100-qubit mark. Two-qubit gate error rates now approach 0.1 percent on the best superconducting devices and slightly higher for ions and atoms. Measurement and single-qubit errors have dropped below one percent. These results suggest that the fundamental physics of qubits is increasingly well understood and that laboratories can execute quantum operations at meaningful scale.

But the gap between what can be done and what must be done remains daunting.

Quantum information is delicate, and the slightest noise can derail calculations. To reach a level of reliability comparable to classical computers, quantum systems must employ quantum error correction, which means to encode information across many physical qubits to protect a smaller number of logical qubits.

The study calculates that even a modest 1,000 logical-qubit processor suitable for complex simulations could require around one million physical qubits, assuming current error rates. Today’s largest chips contain only hundreds.

This scaling problem is not just a matter of adding more qubits because error correction introduces time overhead as well as hardware overhead. Each logical operation must be verified repeatedly through rounds of measurement, with results fed into a classical processor that calculates corrections on the fly.

The researchers write that fault-tolerant quantum computers will, by necessity, be hybrid with quantum machines heavily dependent on fast classical computation to decode error syndromes and maintain stability.

Near-Term Tools

Before error correction becomes practical, researchers are relying on a stopgap technique known as quantum error mitigation.

Rather than eliminating noise, mitigation uses statistical post-processing to infer what a perfect result would have been. Techniques such as zero-noise extrapolation and probabilistic error cancellation can extend the useful circuit depth of present-day machines, allowing thousands to tens of thousands of operations where only hundreds were previously reliable.

The cost, however, grows exponentially with circuit size as each extra layer of gates multiplies the number of experimental samples needed to extract a clean signal. For large circuits, the sampling overhead becomes prohibitive. The study indicates that error mitigation is therefore a bridge, not a destination — a necessary method for extracting science from current hardware but one that cannot scale indefinitely.

Despite these limits, error mitigation has enabled valuable experiments. Google’s Quantum AI group, for example, used error mitigation to run random circuit sampling tasks on a 103-qubit processor with 40 layers of two-qubit gates. IBM has demonstrated similarly deep circuits with thousands of gates. These tests do not yet solve meaningful problems, but they show that modern superconducting devices can manipulate thousands of interacting quantum elements with predictable results.

The authors describe this period as the emergence of an era when quantum processors capable of reliably performing around a million quantum operations, or “megaquop” era. Such systems, they suggest, could reach the threshold where certain scientific simulations and optimization tasks become infeasible for classical computers to match.

Toward Fault Tolerance

The theory behind fault-tolerant quantum computation has existed for nearly three decades. It establishes that if physical errors can be pushed below a specific threshold — roughly between one in a hundred and one in a thousand operations — then logical errors can be reduced exponentially simply by using larger codes. In principle, arbitrarily long computations become possible.

In practice, reaching those thresholds and keeping devices stable remains difficult. The study uses the surface code, a leading approach to quantum error correction, as an example. For physical error rates around one in a thousand, each logical qubit may require several hundred physical qubits to achieve a target logical error rate of one in one hundred billion operations. Additional auxiliary qubits are needed for measurement and gate operations, bringing total requirements to the million-qubit scale.

Eisert and Preskill point to new directions that might ease the overhead. More sophisticated qubit designs, such as fluxonium qubits, cat qubits stabilized by two-photon dissipation, or topologically protected qubits, could reduce the need for massive redundancy by improving native error rates. Early fault-tolerant demonstrations on trapped-ion and neutral-atom systems already show multiple logical qubits operating with error rates lower than their underlying physical qubits, though these tests rely on post-selection and have yet to perform many rounds of correction.

Importantly, the researchers report that no single hardware platform has a clear advantage. Superconducting circuits offer speed and fabrication maturity, while atomic systems offer long coherence times and high connectivity.

“We have focused here on superconducting circuits, ion traps, and Rydberg tweezer arrays because these are the quantum computing modalities that are now sufficiently advanced for pioneering explorations of quantum error correction,” the team writes. “But with the fault-tolerant era just beginning to dawn, it remains far from clear which modality has the best prospects for reaching broadly useful quantum computers.”

Future large-scale machines may, indeed, combine these features or use entirely new architectures.

Algorithms and Advantage

Hardware alone will not deliver quantum advantage, according to the researchers. The study devotes equal attention to the software side and specifically, the need to move beyond trial-and-error algorithms toward rigorously defined methods with measurable speedups.

Variational quantum algorithms, which use a feedback loop between quantum circuits and classical optimizers, have dominated recent research because they run on noisy devices. They have been applied to chemistry, combinatorial optimization and machine learning. Yet, after years of testing, no clear case has emerged where a variational algorithm outperforms the best classical solvers. Problems such as barren plateaus, where gradients vanish and learning stalls, make training these hybrid models unpredictable.

Eisert and Preskill lean toward a more incremental approach built around “proof pockets” — small, well-characterized subproblems where quantum methods can be shown to confer advantage. Accumulating enough of these isolated cases could eventually establish a defensible claim for end-to-end utility.

Beyond the NISQ era, fully error-corrected systems could implement more formal algorithms such as quantum linear solvers, Grover’s search, or Shor’s factoring method. These algorithms provide mathematically proven speedups but require far deeper circuits and much lower error rates than today’s machines can achieve. The study notes that even with fault tolerance, realizing practical gains will depend on the time and energy costs of moving data in and out of quantum memory and interpreting quantum results in classical terms.

Science Before Profit

Eisert and Preskill predict that the first truly useful applications of quantum computing will emerge not in finance or cryptography, but in physics, chemistry and materials science. Quantum simulators — or, machines designed to reproduce the behavior of quantum systems directly — are already revealing new states of matter and testing theoretical models of magnetism and superconductivity.

These early simulations are more valuable to scientists than to investors. Their results expand understanding of quantum dynamics, but the path to economic payoff would still be uncertain. Analog simulators, such as Rydberg atom arrays, can explore systems far larger than digital qubit arrays but lack the flexibility and accuracy needed for general-purpose computing. Digital, error-corrected simulators will eventually supersede them, but only after the overhead of fault tolerance is reduced to manageable levels.

The paper indicates that the competition between quantum and classical simulation teams remains fierce. Results once touted as quantum milestones have been quickly matched by improved classical algorithms. But this isn’t a bad thing, they add. This dynamic, they argue, is healthy and will continue to drive both communities forward until the advantage becomes undeniable.

You Say You Want an Evolution

The study’s final message is cautious optimism, looking at progress not as an overnight quantum success, but as an evolution toward practicality.

Quantum progress will come in stages — the megaquop, gigaquop, and teraquop eras — each expanding the range of possible applications, they write. True fault-tolerant, application-scale quantum computing remains a distant goal, but continued improvements in hardware, error correction and algorithm design are bringing it closer.

Eisert and Preskill close with a reminder from the history of classical computing. When John von Neumann wrote to colleagues in 1945 about high-speed electronic computers, he predicted that their most important uses would be those no one could yet imagine.

Von Neumann wrote: “[T]he projected device […] is so radically new that many of its uses will become clear only after it has been put into operation […]. [T]hese uses which are not, or not easily, predictable now, are likely to be the most important ones. Indeed they are by definition those which we do not recognize at present because they are farthest removed from what is now feasible, and they will therefore constitute the most surprising and farthest-going extension of our present sphere of action …”

Quantum computing, they suggest, will follow the same trajectory. Its most transformative effects will likely arrive unannounced, after years of incremental work by scientists closing the many gaps that still stand between theory and advantage.

For a deeper, more technical dive, please review the paper on arXiv. It’s important to note that arXiv is a pre-print server, which allows researchers to receive quick feedback on their work. However, it is not — nor is this article, itself — official peer-review publications. Peer-review is an important step in the scientific process to verify results and findings.