Insider Brief

- Researchers propose a modified Quantum Volume test that eliminates the need for classical simulations, addressing scalability challenges in benchmarking quantum computers.

- The new parity-preserving and double parity-preserving benchmarks allow direct determination of high-probability outcomes, reducing computational costs while maintaining the advantages of the original test.

- Experimental validation on IBM’s Sherbrooke quantum processor suggests these methods provide an efficient and scalable alternative to classical simulation-heavy benchmarking.

Benchmarking quantum computers is becoming increasingly difficult as systems scale beyond 100 qubits. A new study on the preprint server arXiv proposes a solution: a modified Quantum Volume (QV) test that eliminates the need for classical simulations, a major limitation of the current method.

The study, conducted by researchers in Poland, introduces two new versions of the QV test — the parity-preserving and double parity-preserving benchmarks. Parity preservation means making sure that the total number of 1s in a group of quantum bits (qubits) stays either even or odd throughout a calculation, which helps track and reduce errors.

Both parity preserving approaches replace standard quantum circuits with structures that allow direct determination of high-probability outcomes, removing the need for computationally expensive classical verification. The researchers argue that these modifications preserve the advantages of the original QV test while making it feasible for large-scale quantum devices.

Addressing the Scalability Problem

Quantum Volume, introduced as a widely accepted performance benchmark, measures the largest square circuit a quantum processor can execute with a high level of accuracy. The test evaluates a quantum device’s ability to implement complex circuits reliably, making it an essential metric for tracking progress in the field.

However, the QV test has a significant bottleneck: it requires classical simulation of quantum circuits to determine high-probability outcomes, known as the heavy output subspace. This requirement becomes impractical beyond systems with around 100 qubits, a threshold that current quantum processors are approaching.

“To obtain heavy outputs, the QV test relies on classical simulation of generic quantum circuits, which becomes prohibitively expensive,” the researchers write in their paper.

A New Approach

To address this challenge, the researchers propose two alternative QV tests that sidestep classical simulation entirely. Their primary modification involves using parity-preserving quantum gates — gates that maintain the parity (even or odd sum) of qubits throughout the computation. This allows the heavy output subspace to be known in advance, eliminating the need for classical verification.

The first approach, the parity-preserving benchmark, modifies the structure of the quantum circuits while keeping the number of two-qubit interactions the same. The researchers argue that this change has minimal impact on experimental implementation but significantly reduces computational costs.

“Since the interaction part is unaffected, the number of fundamental two-qubit gates, 3 in case of CNOTs, remains unchanged,” they write in the paper.

The second approach, the double parity-preserving benchmark, refines the test further by tracking parity within two separate qubit subsets. This additional constraint ensures that certain types of errors — those that do not affect overall parity — are also detected. The researchers explain that while such errors are rare, they are still useful to track.

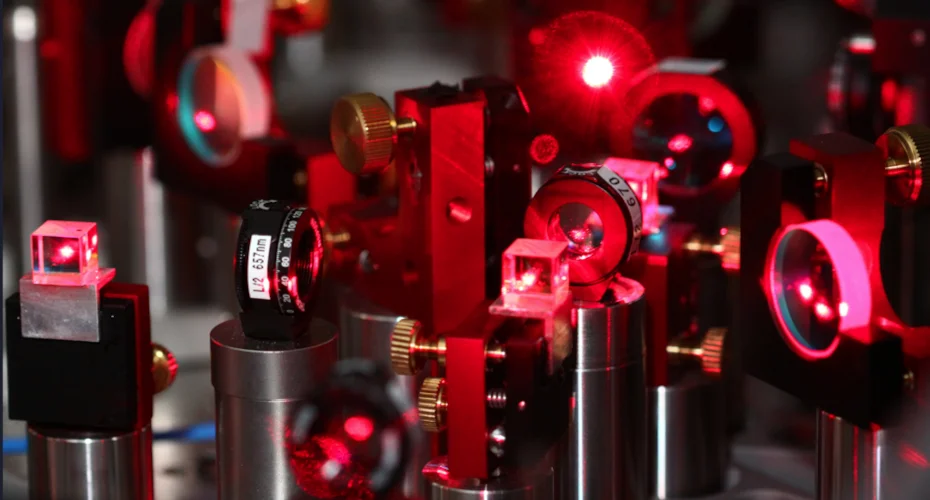

Testing on IBM’s Quantum Hardware

To validate their approach, the researchers conducted experiments on IBM’s Sherbrooke quantum processor, comparing their modified QV tests to the standard version. The team writes that each of 60 randomly generated circuits was executed 900 times.

They found that their parity-preserving benchmarks produced results consistent with the original QV method while significantly reducing computational overhead.

The Quantum Volume test on the IBM Sherbrooke quantum computer required generating random quantum circuits and simulating them on a classical device, according to the study, whereas the new methods avoided this step altogether.

They also simulated their methods using the Qiskit library to explore how error rates affect different benchmarks. Their results suggest that, for near-term quantum processors, parity-preserving benchmarks can provide an efficient and scalable alternative to the classical simulation-heavy QV test.

Implications and Limitations

By eliminating the need for classical verification, the modified QV tests allow benchmarking to scale with quantum hardware. This is critical as quantum computers move beyond the reach of classical simulation, making it difficult to validate their outputs with conventional methods.

The researchers acknowledge that their approach makes certain trade-offs. While their parity-preserving circuits preserve key features of the QV test, they impose additional structure on the quantum circuits being tested. This could affect how the results generalize across different quantum hardware platforms. They also note that while their method significantly reduces computational complexity, it does not eliminate all sources of error in benchmarking.

Future Directions

Despite these limitations, the study provides a promising path forward by introducing two modifications to the Quantum Volume benchmark that address its scalability issue while maintaining its fundamental benefits.

The researchers suggest further validation of their methods on larger quantum processors, as well as exploring hybrid benchmarking techniques that incorporate elements of both classical and quantum verification. They also highlight potential applications of their approach beyond benchmarking, such as using parity-preserving circuits to estimate noise levels in quantum devices.

The research team included: Rafał Bistroń, Marcin Rudziński, Ryszard Kukulski and Karol Życzkowski, all of Jagiellonian University.

Online pre-print servers help researchers gain swift feedback on their studies, but are not officially peer reviewed. The paper is quite technical and can provide a deeper technical dive than this summary article — you can find the paper here.