Guest Post by Yuval Boger, Chief Marketing Officer, QuEra Computing

In the rapidly evolving world of quantum computing, quantum error correction stands as a critical linchpin to the successful operation of these advanced machines.

Quantum error correction is important because it addresses the inherent instability of qubits (quantum bits). Qubits are incredibly sensitive to their environment. This sensitivity makes quantum systems an excellent candidate for building highly precise sensors but poses a significant challenge for building quantum computers; even the slightest environmental disturbance can cause a qubit to lose its quantum state, leading to computational errors.

Where do errors come from?

Some common sources of errors in quantum computing are:

- Phase flip error (dephasing): A qubit in a superposition of |0⟩ and |1⟩ states can be disturbed by its environment, causing it to lose the phase information of its quantum state. This is known as a phase flip or dephasing error. The ratio of the dephasing time (how long the qubit can maintain its phase information) to the time required to perform a single quantum operation is a crucial parameter in quantum computing. This ratio, often called “Quantum Coherence Ratio” or “Qubit Quality Factor”, essentially determines how many operations can be performed before the accumulation of errors renders the results unreliable.

- Bit flip errors (depolarization): Various environmental factors, such as thermal vibrations, electromagnetic waves, and even cosmic rays, can cause qubits to flip from their |0⟩ state to |1⟩ state, or vice versa. This is known as a bit flip error, and it’s a type of depolarizing error. These errors can lead to computational inaccuracies.

- Gate Operation Errors: Quantum gates are used to manipulate qubits during a quantum computation. However, these operations are not always perfect and can introduce errors. This is measured both for gates operating on a single qubit, as well as two-qubit gates. The current state of the art in two-qubit gate fidelity is around 99.9%, which means that approximately one in every 1000 two-qubit operations will result in an error.

Complex calculations typically require more time, qubits and gates, and thus decoherence, noise and gate errors limit the complexity of quantum algorithms. While today’s qubits are approaching an error rate of 10-3, large-scale quantum algorithms require much lower error rates, in the order of 10-10 to 10-15. Since the gap is about 12 orders of magnitude, we need a paradigm shift; continued improvements in qubit error rates won’t be enough to unleash the potential of quantum. This is where error correction comes in.

Error mitigation and error correction

To reduce the impact of errors, even before implementing error correction, several vendors are working on error mitigation techniques. Error mitigation techniques often involve identifying and minimizing the effect of errors on the results of quantum computations by using statistical methods. For instance, techniques like error averaging perform quantum computations multiple times and then averages the results. Post-processing aims to improve the results of a quantum computation after it has been performed to reduce the impact of errors through statistical bootstrapping and resampling. Noise extrapolation involves running the same quantum computation multiple times with varying levels of artificially added noise, and then comparing the results to extrapolate a ‘noise-free’ result.

Unlike error mitigation, error correction aims to detect and correct errors directly. Quantum error correction (QEC) codes are designed to protect quantum information. This is where the key concept of logical qubits come in. A logical qubit is a quantum bit of information that is protected from errors by encoding it across multiple physical qubits. The term “logical” is used to distinguish it from the “physical” qubits that make up the computer’s hardware. The logical qubit is the unit of quantum information that we are ultimately interested in preserving and manipulating. In essence, we are trying to go from many physical qubits with 10-3 or 10-4 error rates to fewer logical qubits with 10-10 or 10-15 error rates.

The concept of a logical qubit has a parallel in classical error correction methods. In classical computing, a logical bit is a bit of information that is protected from errors by encoding it across multiple physical bits. For example, in a simple repetition code, a logical bit of ‘0’ might be encoded as ‘000’, and a logical bit of ‘1’ as ‘111’. If an error causes a flip of one physical bit, say from ‘000’ to ‘100’, we can still correctly infer the logical bit by looking at the majority of the physical bits.

However, there’s a crucial difference between classical and quantum that makes QEC much more challenging: due to the no-cloning theorem in quantum mechanics, qubits cannot be simply replicated, unlike classical bits that can be easily replicated. Thus, QEC must instead use more complex encodings that allow errors to be detected and corrected.

There are several prototypical codes that have been widely used for quantum error correction.

- Shor’s code, proposed by Peter Shor (yes, the same Shor as in Shor’s algorithm) in 1995, encodes a single logical qubit into nine physical qubits and can correct for arbitrary errors in a single qubit.

- Steane’s code encodes one logical qubit into seven physical qubits and has a convenient set of logical gate operations as well as efficient methods for good state preparation.

- Surface codes work by arranging physical qubits on a two-dimensional grid to encode a single logical qubit. Surface codes can correct for errors that affect up to floor(sqrt(n)/2), where n is the number of physical qubits that are part of that surface code. Surface codes are appealing, compared to other known codes, as they as they have one of the highest known circuit-level error correction thresholds.

- Topological and qLDPC codes are general categories of codes where qubits are arranged in more complex structures. These codes are a promising area of research, but the increased complexity makes them more challenging to use. Recent publications from IBM (paper here) and a group of collaborators from University of Chicago, Harvard, Caltech, University of Arizona and QuEra (paper here) have shown that new qLDPC codes could require only one-tenth the number of input qubits compared to the surface codes, and thus are a promising area of research.

Surface codes are often paired with a technique called ‘lattice surgery’, which is a way to perform logical operations between qubits on a 2D planar architecture. The term “lattice surgery” comes from the way logical qubits are visualized in these topological codes. The logical qubits are often represented each as a square checkerboard “lattice”, with logical information contained in each. “Surgery” refers to the process of manipulating these lattices to perform logical operations.

In lattice surgery, we manipulate logical operations by merging and dividing these lattice structures. Imagine this as combining two checkerboards to form a larger one or splitting one to create two smaller ones. When we merge, we’re performing a quantum measurement, and when we split, we’re creating quantum entanglement. To illustrate, if we want to perform a logical CNOT operation between two logical qubits (each housed in its own lattice), we create a third, temporary lattice. This temporary lattice is then merged and divided with the two logical qubits in turn. A significant benefit of lattice surgery is its compatibility with a 2D planar layout, making it suitable for many platforms that only allow short-range connections on a flat chip.

How many physical qubits does one need?

The number of qubits needed depends on the quality of the qubits and the fidelity of the gates that operate on them. On one extreme, if the physical qubits and quantum gates were perfect, a logical qubit would need only one physical qubit because no error correction is needed. Conversely, when the error rates increase, each logical qubit needs a larger and larger number of physical qubits to function properly. This is analogous to the classical intuition: if the classical bit-flip error rate is high, we might need a 5- or 7-bit repetition codes, whereas if the error rate is low, a 3-bit repetition code might suffice. In today’s error rates, a high-quality logical qubit might require 100-1000 physical qubits, depending on the desired resilience to errors.

The number of qubits also depends on the specific application. For instance, simulating complex molecules might require more qubits than solving certain physics problems that have natural hardware mappings.

A 2022 paper by Microsoft researchers titled “Assessing requirements to scale to practical quantum advantage” maps that tradeoff between error rate, and size for various applications. It also compares the expected runtime of such an algorithm as a function of the time to complete each quantum operation (this time varies greatly between different quantum implementations). We can see, for instance, that if the error rate and cycle time are low, a quantum chemistry algorithm might require just over a million qubits and about a month to complete, whereas with a higher error rate, that same algorithm might require five million qubits and several month.

Current state of the art

The amount of intensive corporate and academic research into QEC is hardly surprising, considering how important QEC is for the future of quantum computing. Despite very impressive results, it is clear that we are still a long way from practical and usable QEC.

Here are some recent state-of-the-art results:

A Nature publication from the Google Quantum AI team reports a demonstration of a single logical qubit that uses a surface code to suppresses errors, specifically showing that error rates became lower with greater code distance (code distance is the minimum number of errors that would cause an undetectable logical error). The team also highlighted the challenges it faced, such as the need for high-fidelity qubits and gates, precise calibration, and fast, low-latency classical control and readout.

Another Nature publication, this time from the University of Innsbruck, demonstrated a fault-tolerant universal set of gates on two logical qubits in a trapped-ion quantum computer. They observed that the fault-tolerant implementation showed suppressed errors compared to a non-fault-tolerant implementation.

An IBM team published in the ArXiv a demonstration of an error-suppressed encoding scheme using an array of superconducting qubits. The error-suppressed creation of a specific entangled quantum state demonstrated a fidelity exceeding that of the same unencoded state on any pair of physical qubits on the same device.

Another ArXiv publication, this time from a Quantinuum team, compared two different implementations of fault-tolerant gates on logical qubits on a twenty-qubit trapped-ion quantum computer. This report also found that the fidelities of the two-logical-qubit gate exceeded those of a two physical-qubit gate.

Last, this Arxiv paper from a Yale group demonstrates the use of quantum error correction to counteract decoherence, showing that they are able to remove errors faster than the rate these errors corrupt the stored quantum information.

Scalability concerns

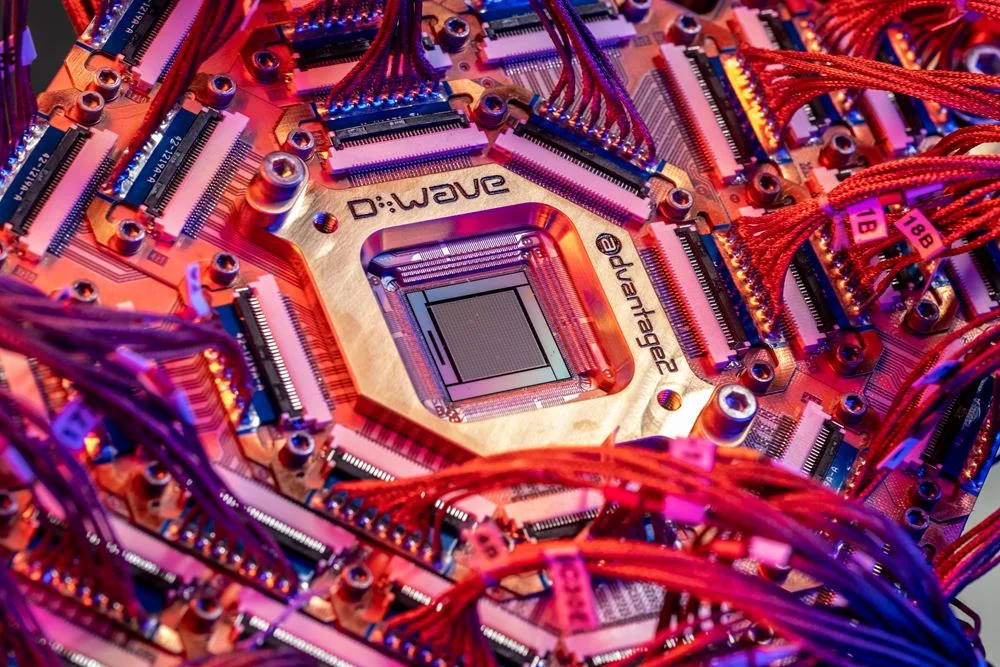

One reason that recent reports typically demonstrate only one or two logical qubits is the limited number of physical qubits available on current quantum machines. Even the most advanced systems, such as IBM’s Seattle machine and QuEra Computing’s Aquila machine, possess only a few hundred qubits each.

Scaling the number of physical qubits in quantum computing presents a significant challenge: managing the multitude of control signals required to operate the millions of physical qubits needed to generate an adequate number of logical qubits for executing meaningful operations. For instance, Google’s impressive 72-qubit quantum computer requires three controls per qubit, leading to hundreds of high-performance control signals for the entire system. In stark contrast, a high-end classical computer, despite housing billions of transistors, only necessitates approximately 1000 external controls. Even a 4K television screen, with over eight million pixels, operates with just a few thousand control signals. So, how can we scale quantum computers without the need for millions of control signals?

A recent study published in Nature by researchers from Harvard and QuEra offers a promising approach using a neutral-atom computer. In this setup, each qubit is represented by a single atom. An array of laser beams traps multiple atoms and performs quantum operations on them by illuminating nearby atoms with light at specific wavelengths. The researchers introduced a method for transporting groups of qubits (for example, a logical qubit) between different zones, each serving a specific function—long-term memory, processing, or measurement and readout. Consequently, control signals for processing are only needed in the processing area, irrespective of the total number of qubits in the system. Moreover, because the light required for a quantum operation can simultaneously illuminate a group of qubits, quantum operations on logical qubits could potentially be performed concurrently by shining light on all the participating physical qubits. This innovative approach is illustrated in the following diagram and further explained in the accompanying video [see here from Harvard, originally published in Nature].

Source: Nature, Apr 20, 2022

Looking ahead

As we track the progress of several groups, it’s reasonable to expect significant advancements in the coming months and years. Some possible manifestations of such progress:

- Additional improvements in the fidelity of single- and two-qubit gates and coherence time.

- Innovative approaches to construct logical qubits more efficiently.

- Demonstrations of simple algorithms that use more than two logical qubits.

- Demonstration of larger code distances, showing logical qubits thar are increasingly robust.

- Additional demonstrations of the superior performance of logical qubits relative to their physical counterparts.

Summary

In the rapidly evolving landscape of quantum computing, quantum error correction (QEC) stands as an unsung hero, quietly underpinning the successful operation of these advanced machines. As we continue to push the boundaries of quantum computing, the role of QEC will only become more crucial. It is the silent guardian that will enable us to navigate the quantum landscape, ensuring the stability and reliability of our quantum computations. In the quest for quantum advantage, QEC is not just an unsung hero; it is an indispensable ally.

Yuval Boger is the chief marketing officer for QuEra, a leader in neutral atom quantum computers.

For more market insights, check out our latest quantum computing news here.