Insider Brief

- A new industry report finds real-time quantum error correction has become the central engineering challenge shaping national strategies, investment priorities and company roadmaps.

- Hardware platforms across trapped-ion, neutral-atom, and superconducting technologies have crossed error-correction thresholds, shifting focus toward decoding hardware, system integration and classical bandwidth limits.

- Governments and companies face significant obstacles, including talent shortages, rising system complexity, and the need to validate architectures against measurable performance and cost targets.

Real-time quantum error correction has become the industry’s defining engineering hurdle, reshaping national strategies, private investment, and company roadmaps as the race for utility-scale quantum computers tightens, according to a new technical study spearheaded by Riverlane.

The Quantum Error Correction Report 2025, written in partnership with Resonance, indicates that quantum computing has crossed a turning point. Error correction, once treated as an abstract theory, is now the axis around which government funding, commercial strategies and scientific priorities revolve. The report, based on interviews with 25 global quantum computing and QEC experts, including 2025 Nobel laureate John Martinis, finds that all major quantum companies are now pursuing error correction and that a growing share treat it as a competitive edge rather than a research milestone.

The shift marks a broader transition from early lab demonstrations to the first attempt at building full-stack machines capable of reliable work.

The findings show rapid acceleration in error-correction research, rising investments linked to fault-tolerant performance and heightened pressure on companies to integrate classical systems that can process error signals as fast as qubits generate them. The study describes an industry leaving behind early noisy machines and entering a phase driven by engineering integration, real-time decoding hardware, and a widening workforce gap.

The New Center of Gravity

The report identifies real-time quantum error correction as the industry’s main bottleneck. Quantum bits, or qubits, lose information easily, and error correction is the system of checks and encoding methods that keep information alive long enough for useful calculations. But the analysts indicate the barrier is no longer only the qubits themselves. Instead, it is the classical electronics that must process millions of error signals per second and feed back corrections within about one microsecond.

Researchers cited in the study describe this as a shift from physics-focused problems to full-stack engineering.

The challenge now involves building fast decoding hardware, integrating it with control systems and managing data rates that could reach hundreds of terabytes per second. To give some idea of this extreme management challenge, the volume is comparable to a single machine processing the streaming load of a global video platform every second.

These requirements are pushing quantum companies to redesign their systems around error correction rather than bolt it on later. According to the report, more than half of companies profiled are actively implementing error correction through dedicated internal teams and that more are elevating it to a strategic priority. The number of firms treating error correction as a differentiator doubled since last year.

The Hardware Milestones

Multiple hardware platforms crossed the performance threshold needed for error correction in the past 12 months, according to the study. That threshold represents the point at which physical error rates are low enough for error-correction schemes to reduce errors faster than they accumulate.

The report highlights several milestones. Trapped-ion systems reached two-qubit gate fidelities above 99.9 percent. Neutral-atom machines demonstrated early forms of logical qubits. Superconducting platforms showed improved stability in larger chip layouts. These hardware advances mark what the report describes as the first consistent evidence that logical qubits — encoded qubits designed to survive errors — can outperform physical ones in real devices.

Google’s demonstration of a below-threshold memory system last year is cited in the report as a key moment, showing that textbook designs could be reproduced in hardware on a larger scale. Other experiments at Harvard and industrial labs followed, signaling that error correction is moving out of theory and into routine testing.

The report finds that this progress is forcing companies to make hard choices about which error-correction codes to back. Surface codes remain the most mature option, but a wave of new interest has emerged around quantum LDPC codes, bosonic codes and hybrid designs that mix different codes for different tasks. The number of peer-reviewed error-correction papers tripled year over year, indicating a broad expansion of code development.

A New Competitive Landscape

The report shows that fewer companies now rely on error mitigation or suppression techniques, which were popular during the era of noisy intermediate-scale devices. These methods can reduce error impacts but cannot support large-scale computation. The number of firms using error correction grew from 20 last year to 26 this year, a 30 percent jump that reflects a clear pivot away from near-term approaches.

The analysts report that some companies are still testing multiple code types or workflow designs because they remain uncertain about which architecture will ultimately scale. Others are pursuing partnerships that divide the quantum stack into specialized layers. Hardware teams are pairing with separate groups working on control electronics, decoding algorithms, or application software.

An important point repeated throughout the report is that no single qubit technology is positioned to dominate all applications. Instead, different modalities appear suited to different tasks. Some offer geometric flexibility, some allow long-lived states, and others integrate more directly with optical networks.

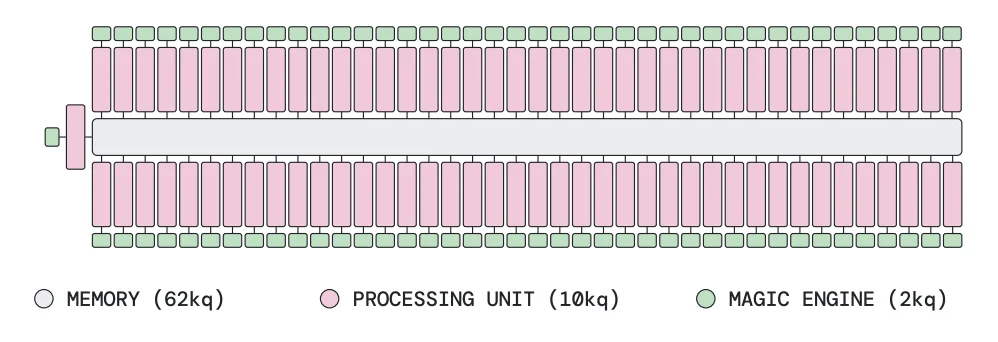

The report suggests future machines may combine modules built on different platforms or even run different error-correction codes for memory, logic, and state preparation.

National Funding and Industrial Strategy

Government spending patterns reflect the growing importance of error correction.

The report estimates that Japan now leads public quantum investment with nearly $8 billion committed, much of it allocated in 2025.

The United States follows with $7.7 billion, driven in part by the Department of Defense’s Quantum Benchmarking Initiative. The study describes this program as a structure built around objective, measurable progress toward a large-scale machine rather than a race to reach an arbitrary milestone.

Under this framework, companies are evaluated on their ability to meet system-level hurdles, including cost, reliability, and integration of the full quantum stack. The initiative plans for the Department of Defense to procure a full utility-scale machine by 2033 and is now spending hundreds of millions of dollars to determine which companies are on track. The report says this structure forces firms to validate their architectures rather than rely on long-term claims.

European and Canadian programs also appear to be shifting toward evaluation-based models. The study points to stronger cross-border partnerships, including collaborations in the United States, United Kingdom and Canada. Facilities such as national testbeds are now focusing on verification, benchmarking, and joint work across the quantum stack.

China’s funding level is not formally confirmed, but the report cites external estimates that place its investment around $15 billion. The study notes that broader geopolitical competition is unlikely to center on qubit counts alone but on the cost and reliability of full-stack, error-corrected systems.

A Growing Workforce Crisis

The report surfaces a headline concern about workforce shortages as one of the most serious risks to scaling error correction. The global quantum workforce numbers around 20,000 people, but only 1,800 to 2,200 work directly on error correction. These include a smaller core of specialists and a larger group of engineers focused on enabling technologies such as control electronics, classical algorithms and system integration.

Most quantum companies report persistent difficulties in hiring. Half to two-thirds of open roles go unfilled, according to the study. The report projects demand for error-correction specialists to grow severalfold by 2030, driven by the need for real-time systems, expanded decoding hardware, and cross-disciplinary expertise in machine learning, signal processing, and chip design.

Training programs remain limited, and the report emphasizes that traditional physics departments cannot meet the demand alone. The most urgent needs arise in classical engineering fields that sit close to the hardware, especially areas tied to low-latency processing.

The study concludes that the next phase of quantum development will be driven by system integration rather than leaps in raw qubit numbers. Researchers cited in the report say monolithic systems with millions of qubits are unlikely. Instead, modular approaches are expected to dominate, connecting smaller units through quantum networking links. Companies that do not invest in networking technology may end up with smaller, isolated machines that cannot match the scale of networked systems.

The report also emphasizes the growing importance of cost, noting that the most successful architecture may not be the highest performing but the cheapest to scale with a reliable supply chain. This aligns with national strategies that prioritize economically viable pathways toward a utility-scale computer.