Insider Brief

- Google’s Quantum AI team released a five-stage framework to guide the development of useful quantum computing applications, shifting focus from hardware milestones to verified, real-world utility.

- The researchers highlight a lack of progress in the middle stages of the framework — identifying hard problem instances and linking algorithms to practical applications — and call for stronger funding and collaboration in these areas.

- Google urges the creation of open-source tools, cross-disciplinary partnerships, and new measures of success that value demonstrated usefulness over qubit counts.

How Is Google AI Defining a Clear Roadmap for Useful Quantum Computing?

Google’s five-stage roadmap is a structured model that explains how quantum algorithms move from abstract theory to real-world applications, guiding researchers toward measurable usefulness instead of hardware-driven milestones.

“Sometimes, history happens all at once. A few short years can usher in decades of progress and innovation. We’re now seeing that with quantum computing. Forty years of research, work and investment are converging, and the grand challenge of building large-scale, capable quantum computers is within humanity’s reach.” — Ryan Babbush, Director of Research, Quantum Algorithms and Applications

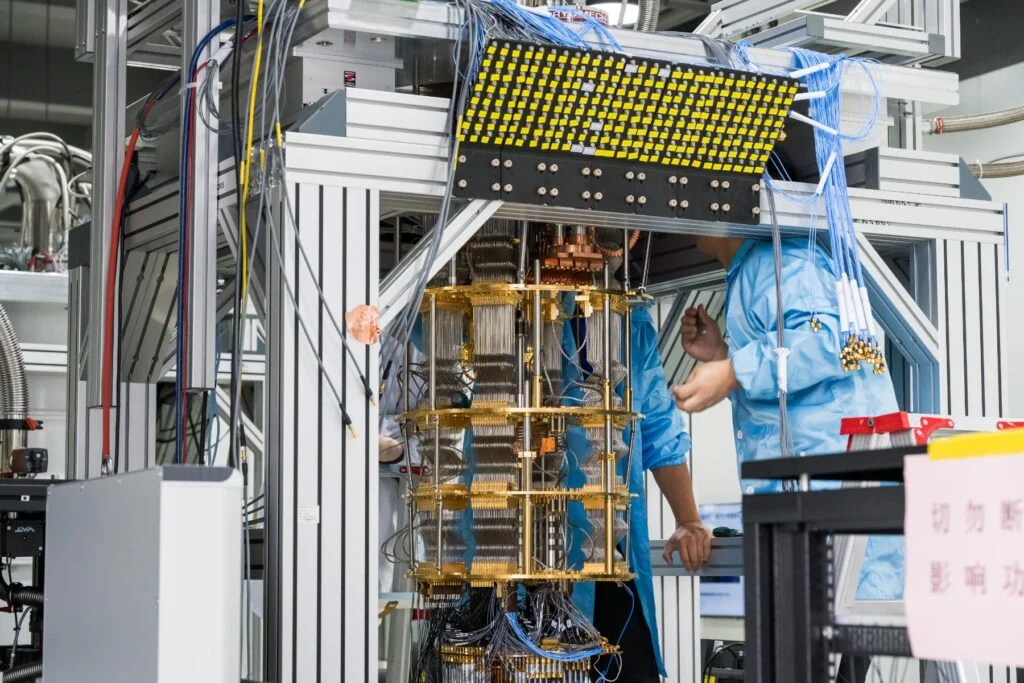

As real quantum computers come within humanity’s reach, Google’s Quantum AI team has proposed a five-stage framework to tighten scientists’ grasp on developing useful quantum computing applications, arguing that the next era of progress depends as much on sociology and verification as on hardware and algorithms.

In a new paper published on the pre-print server arXiv, the researchers present what they call a “conceptual model of application research maturity” designed to help the field move from abstract theory toward practical, real-world use.

What Are the Five Stages of the Quantum Advantage Framework?

The framework spans five stages:

- Discovering new algorithms

- Identifying hard problem instances

- Demonstrating advantage on real-world tasks

- Optimizing for implementation, and finally

- Deploying quantum solutions in production workflows

“Justifying and sustaining the investment in research, development and infrastructure for large-scale, error-corrected quantum computing hinges on the community’s ability to provide clear evidence of its future value through concrete applications,” the team writes. “Further, in order to maintain technological momentum, it is critical to match investment growth and hardware progress with algorithmic capabilities.”

Stage One: Discovery and Decline

The first stage, “discovery of new quantum algorithms in an abstract setting,” covers the earliest conceptual work – the mathematical foundations that make quantum computing distinct. But the researchers warn that fewer scientists are focused on this stage than in the past.

“We observe that despite a dramatic increase in the size of the quantum computing field throughout the last decade, a smaller percentage of researchers today seem to be working on certain foundational topics in algorithms than in the past,” they write, adding: “This trend is particularly concerning in the context of post-quantum cryptography. The security of such schemes can only be established by a robust community effort to find quantum attacks.”

The researchers attribute this decline to structural incentives that encourage “risk-averse behavior.” They call for funding mechanisms that reward “originality, problem-finding, and careful exploration of hard ideas (including well-documented negative results), not only incremental improvements.”

They add that a single discovery can redirect years of research.

“There is a large potential upside: a short paper with a genuinely new idea can redirect years of effort,” the team writes. “Evaluation and funding mechanisms ought to reward originality, problem-finding, and careful exploration of hard ideas (including well-documented negative results), not only incremental improvements, which would empower more researchers to aim for breakthroughs.”

| Element | Explanation | Impact |

|---|---|---|

| Focus of Stage One | Discovery of new quantum algorithms in abstract mathematical settings | Lays the foundation for all future quantum work |

| Current Decline | Fewer researchers working on fundamental algorithms compared to past years | Slows progress in post quantum cryptography and core innovations |

| Core Challenge | Risk averse incentives push researchers toward incremental work | Discourages breakthroughs and high impact discoveries |

| Google Recommendation | Funding programs that reward originality and exploration | Increases likelihood of transformative algorithmic ideas |

Overall, Stage One highlights the need for renewed investment in bold, foundational research that can unlock future quantum breakthroughs.

Stage Two: Finding Hard Instances

The second stage involves identifying problem instances that show quantum advantage — a bridge between theory and practice that the researchers claim remains thinly populated. The researchers define this stage as one where a quantum computer can efficiently generate concrete problem instances — even contrived ones — where a quantum computer is expected to outperform classical algorithms.

They acknowledge that Stage II is both essential and often underestimated.

“Despite its critical role as a bridge from theory to practice, Stage II is frequently overlooked by the quantum community, and its difficulty is often underestimated,” they write. “However, those working at the intersection of quantum computing and cryptography often do focus on Stage II results because cryptosystems based on computational security usually require average-case notions of problem complexity.”

Even seemingly simple examples, they note, rarely hold up to real-world scrutiny. In fact, the researchers report that many algorithms remain theoretical because their advantages exist only in abstract models, not in concrete, testable problems..

The researchers call Stage II is just as central to the quest for quantum advantage as Stage I, and argue that its scarcity underscores a fundamental issue:

“Stage II is not merely a prerequisite for useful applications — it represents a fundamental question at the core of understanding the complexity of quantum physics,” they write.

| Element | Explanation | Impact |

|---|---|---|

| Focus of Stage Two | Identifying concrete tasks where quantum systems outperform classical ones | Creates a bridge between theory and real usefulness |

| Current Challenge | Often underestimated and overlooked by broader community | Few verified quantum advantage examples exist |

| Why Hard Instances Matter | They validate algorithmic claims in real settings | Supports investment and motivates further discovery |

| Google Recommendation | Stronger focus on problem complexity and cryptographic average case analysis | Strengthens pathways toward demonstrable quantum advantage |

Stage Three: Bridging the Theory-to-Application Gap

Outside of cryptanalysis and quantum simulation, few algorithms have advanced to what the paper calls Stage III — establishing quantum advantage in a real-world application. The researchers write that this is the most significant bottleneck in delivering value.

They report that the imbalance is both technical and cultural.

“A significant concern is the relative lack of academic attention devoted to Stage III compared to the earlier stages,” they write. “Within the research community, Stage I and II work is sometimes (in our view, unfairly) perceived as more ‘fundamental’ and thus, more important than Stage III.”

The researchers add that the field has a growing portfolio of abstract algorithms but a severe scarcity of demonstrated, practical use cases. The team team describes this gap as an impediment to the overall health of the field.

Stage III is also described as requiring “a rare, cross-disciplinary skill set: that can connect abstract theory with practical problems and that it “demands breadth over depth.” They contrast this with quantum simulation, where physicists and chemists often already have domain expertise.

The team cautions that “problem-first” approaches — starting from a user’s challenge — rarely lead to successful quantum applications.

They write: “A more effective strategy is the “algorithm-first” approach: begin with a known quantum primitive (e.g., quantum simulation) that offers a clear advantage, and then search for real-world problems that map onto the required mathematical structure. We posit that generative artificial intelligence might be a useful tool for helping to bridge the knowledge gap between disparate fields.”

In case there’s too much jargon in the quote, A quantum primitive is a core building block — a basic capability that quantum computers perform especially well. Quantum computers have a few of those capabilities, such as simulating molecules or searching through vast amounts of data.

Based the researchers’ recommendations, rather than starting with a business challenge such as optimizing delivery routes, teams might begin with a known quantum primitive like quantum simulation, which naturally excels at modeling complex molecular interactions. From there, they would look for real-world applications that fit the same mathematical framework — such as designing new materials for batteries, developing pharmaceuticals, or improving catalysts for clean energy.

To accelerate this process, Google Quantum AI is building a “Compendium of Super-Quadratic Quantum Advantage,” a public guide to known algorithms that could inspire domain experts to find new applications. The researchers say large language models could assist in this effort.

“The agent’s task would not be to invent, but to recognize: to scan its knowledge base for real-world problems that match the structure of known quantum speedups, even if they are described using different terminology in another field’s literature.” the researchers write. “Our team has already seen early success with this approach using an internal Google tool based on Gemini.”

They argue that cultural change may be just as important as technical discovery.

The team writes that its backing of the Google Quantum AI XPRIZE Quantum Applications competition was meant to inspire more researchers to tackle the challenges of Stage III.

| Element | Explanation | Impact |

|---|---|---|

| Focus of Stage Three | Demonstrating real world quantum advantage | Defines practical value of quantum computing |

| Current Challenge | Very few algorithms have progressed to Stage Three | Gap between theory and industry needs widens |

| Skill Requirement | Requires cross disciplinary expertise spanning physics, algorithms and real world domains | Limits number of teams able to advance Stage Three work |

| Google Recommendation | Begin with quantum primitives rather than user problems | Improves likelihood of mapping tasks to real quantum advantages |

Stage Four: Engineering the Advantage

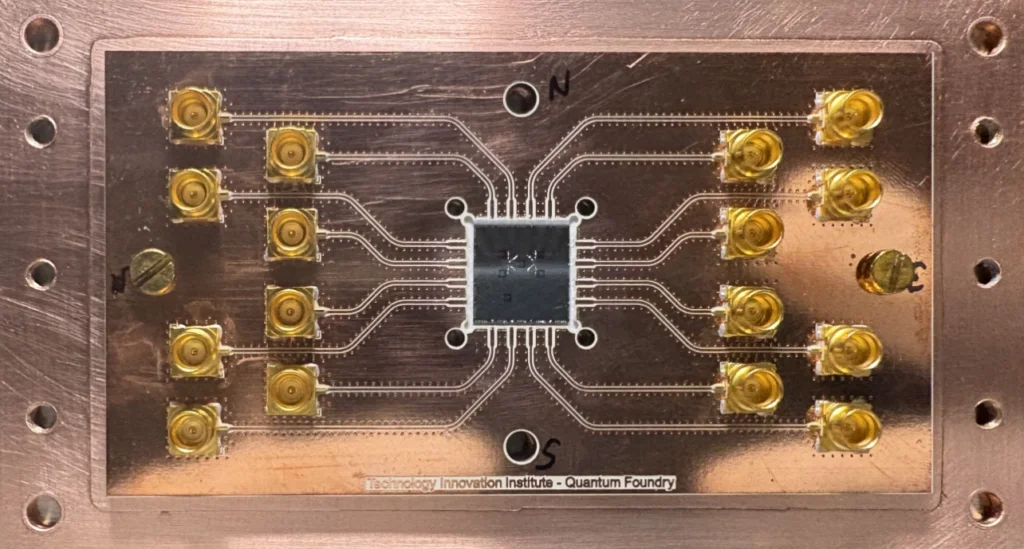

Once a viable use case is identified, the work moves into Stage IV — optimizing, compiling and estimating resources for implementation. This stage, according to the researchers, marks a shift “from asymptotic analysis to concrete, finite resource costs, such as the exact number of qubits and gates required.”

Word of warning: this part of the process can be detailed and slow.

“This can be extraordinarily tedious, difficult to verify, and imprecise,” the researchers warn. “As a case in point, several researchers from the authors’ team worked for well over a year on an 80-page paper just to determine the constant factors in an algorithm that was introduced and described asymptotically in a preceding 7-page paper.”

The paper credits sustained Stage IV research with reducing the resources required for key tasks — such as simulating molecular systems or breaking RSA encryption — by many orders of magnitude over the last decade, according to the researchers The researchers also point to the emergence of new tools like Qualtran, Q#, QREF, and Bartiq that automate parts of the process and make estimates more consistent.

As the field enters the “early fault-tolerant era,” the researchers emphasize that algorithm development must be coordinated with hardware design.

They add: “… we caution that simple rules of thumb such as ‘focus on reducing circuit depth and the number of logical qubits’ are often insufficient (and sometimes actively harmful) for guiding the search for early fault-tolerant algorithms.”

The fifth and final stage — application deployment — remains prospective. The researchers note that no quantum computation has yet demonstrated a clear advantage on a real-world problem, though early efforts like certified random number generation hint at what the path might look like.

Ultimately, the framework reframes the conversation around quantum progress from hardware milestones to application maturity.

| Element | Explanation | Impact |

|---|---|---|

| Focus of Stage Four | Resource estimation including qubits, gates and overhead | Transforms theory into implementable systems |

| Current Challenge | Verification is slow and extremely tedious | Delays deployment of promising algorithms |

| Supporting Tools | Qualtran, Q Sharp, QREF, Bartiq | Improve automation and consistency of estimates |

| Google Recommendation | Coordinate algorithm design with hardware constraints | Prepares for early fault tolerant quantum systems |

Stage Five: Deployment and Next Steps

In a companion blog post written by Ryan Babbush, Google’s Quantum AI team outlined several priorities for accelerating progress toward useful quantum computing. The researchers emphasize expanding support for the middle stages of their framework — the transition from theoretical algorithms to demonstrable applications. They call for governments, funding agencies and private investors to focus on research that verifies and scales potential quantum advantages.

They also point to the need for shared, open-source tools that make application research more reproducible, such as resource estimators and algorithm libraries. Google plans to continue promoting external collaborations and competitions, including its Quantum AI XPRIZE, to encourage cross-disciplinary teams to explore practical use cases.

The post concludes that real progress will depend on tighter integration between quantum engineers, AI researchers and specialists in fields such as chemistry, materials science, and logistics. It argues that future milestones should be measured less by qubit counts and more by the number of problems quantum computers can solve more effectively than classical ones.

In addition to Babbush, the research team included: Dave Bacon, Andrew Childs, Sergio Boixo, Jarrod McClean, Hartmut Neven, John Platt, Masoud Mohseni, Thomas E. O’Brien, Vadim Smelyanskiy, Peter Love and Austin G. Fowler. They represent Google Quantum AI and collaborating institutions including the University of Maryland and Tufts University.

For a deeper, more technical dive, please review the paper on arXiv. It’s important to note that arXiv is a pre-print server, which allows researchers to receive quick feedback on their work. However, it is not — nor is this article, itself — official peer-review publications. Peer-review is an important step in the scientific process to verify results.

| Element | Explanation | Impact |

|---|---|---|

| Focus of Stage Five | Deployment into real production environments | Defines measurable quantum utility |

| Current Status | No confirmed real world quantum advantage yet | Represents the industry’s ultimate open goal |

| Research Needs | Open source tools, interdisciplinary teams and verification pipelines | Supports scalable application research |

| Google Recommendation | Focus on usefulness over qubit counts | Shifts the field toward practical, demonstrated value |

Frequently Asked Questions

What is Google’s five-stage framework for quantum computing?

Google’s Quantum AI team developed a five-stage framework to guide the development of useful quantum computing applications, spanning from discovering new algorithms to deploying quantum solutions into production workflows.

What are the five stages in Google’s framework?

The five stages are: Stage I – Discovery of new quantum algorithms in abstract settings, Stage II – Identifying hard problem instances that show quantum advantage, Stage III – Demonstrating advantage on real-world tasks, Stage IV – Optimizing for implementation and resource estimation, and Stage V – Application deployment into production.

Why did Google create this framework?

The framework shifts focus from hardware milestones to verified, real-world utility. Google argues that justifying and sustaining investment in quantum computing requires clear evidence of future value through concrete applications.

What is the biggest bottleneck in quantum computing development?

The researchers highlight a lack of progress in the middle stages of the framework — identifying hard problem instances and linking algorithms to practical applications. Outside of cryptanalysis and quantum simulation, few algorithms have advanced to Stage III, establishing quantum advantage in a real-world application.

What is the “algorithm-first” approach?

Rather than starting with a business challenge, the algorithm-first approach begins with a known quantum primitive that offers a clear advantage, then searches for real-world problems that map onto the required mathematical structure. Google suggests this is more effective than the “problem-first” approach.

What is a quantum primitive?

A quantum primitive is a core building block or basic capability that quantum computers perform especially well, such as simulating molecules or searching through vast amounts of data.

How can AI help quantum computing development?

Google suggests that generative artificial intelligence might help bridge the knowledge gap between disparate fields. AI could scan its knowledge base for real-world problems that match the structure of known quantum speedups, even if they are described using different terminology in other fields’ literature.

What should future quantum milestones measure?

The framework argues that future milestones should be measured less by qubit counts and more by the number of problems quantum computers can solve more effectively than classical ones.

What does Google recommend for accelerating quantum progress?

Google calls for governments, funding agencies and private investors to focus on research that verifies and scales potential quantum advantages, particularly supporting the middle stages of the framework. They also recommend creating shared, open-source tools for more reproducible research.