It is difficult to overstate the transformational potential of quantum technologies. Advances in the field will have far reaching implications in areas ranging from time-keeping, through imaging and sensors, to communication and computing. Commercial applications of computing might take the longest to realise but we are truly excited by the progress we have seen in the past 24 months.

In the first of this two-part series, we provide an accessible introduction to quantum computing technology and the key challenges it faces on the road to commercialisation. The second part will focus on the anticipated applications, provide an analysis of the activity in the early stage UK ecosystem, and discuss key investment considerations.

1. Premise of quantum computing — it’s all about the qubit

In brief, quantum computing (“QC”) is a technology for storing and processing information based on the science of quantum mechanics.

To understand the basics of QC, it is first useful to go through some of the concepts used in traditional (or ‘classical’) computing. Classical computers use “bits” as their basic data-processing and data storage unit. The storage and computational capacity of a computer depends on its bit count. The supercomputers of today have been made possible because scientists have been able to double the transistor (and thus bit) count per machine every couple of years (Moore’s law has been surprisingly accurate at describing the pace of innovation). However, we may be close to the limit of how small a transistor can be made, which naturally limits further significant computational gains for classical computers.

A key property of bits is that they can only take a state of 0 or 1 at any one time. The basic computational unit of QCs, on the other hand, is quantum bits or “qubits”. Qubits harness the principles of superposition and entanglement to represent data:

- Superposition (illustrated on the left) is a principle of quantum mechanics describing the notion that objects can exist in multiple states simultaneously. Thanks to this property, qubits can exist in a superimposed state of 0 and 1, including and all states in-between — note this is fundamentally different from a bit, which has a definite value of 0 or 1.

- Entanglement is the ability of two particles to have correlated quantum states, i.e. to behave in a coordinated and dependent way even if significant distances separate them.

Qubits are probabilistic in nature. They do not have a pre-determined and knowable state. Instead, they exist simultaneously in multiple different states that are said to be ‘coherent’ with each other, i.e. the overall state of the system can be mathematically represented as a sum of all the individual states:

- Complex number coefficients are used to represent the weight of each state. For a 2-qubit system the state can be described and store information using 4 coefficients: α |00⟩ + β |01⟩ + γ |10⟩ + δ |11⟩ such that |α|²+|β|² +|γ|² +|δ|² = 1. As a comparison, a 2-bit system can be described with 2 values, one per bit.

- When the system is measured (i.e. observed in any way), only one of the states can be detected. For example, a qubit exists simultaneously in the states of 0 and 1 but when it is measured, it will revert to a state of either 1 or 0, thereby resulting in some loss of information. Hence, to an observer who measures the system qubits behave like bits.

The main advantages of QCs vs. classical computers relate to superior computational power and, by implication, superior problem-solving capabilities (both faster and more complex) enabled by manipulating information using qubits.

- Thanks to superposition, a qubit can store more information than a bit (multiple states vs. one).

- The principle of entanglement allows the states of qubits to be correlated even when they are far apart. This ensures that the information encoded in a QC system grows exponentially with the qubit count, i.e. 2 qubits can handle the same amount of data as 4 classical bits, 3 qubits are equivalent to 8 bits, 4 qubits to 16 bits, and so on. The information is encoded in the complex coefficients that describe the state.

- Superposition and entanglement enable qubits to process all combinations of 0s and 1s at the same time; bits do the calculations in succession.

However, we should emphasise that the ‘superiority’ is dependent on the particular problem and use case, i.e. QCs are unlikely to replace classical computers completely. Instead, as discussed in the second article in this series, QCs will excel at particular problem types and due to their unique properties will enable us to solve new types of challenges.

The following table provides a comparison between classical and quantum computing.

To illustrate the transformational potential QCs could have, consider that the processing power in a smartphone equates to 10–15 qubits, a high-end laptop is equivalent to about 25–30, while the most advanced supercomputers could be matched by 50 qubits. QC prototypes with 50 qubits already exist but these tend to be unstable and prone to errors.

2. The QC stack — a new approach to software and hardware development required at every level

The QC stack is analogous to the one used in classical computers. However, a new approach is needed at almost every level.

Quantum Computing: Progress and Prospects by Emily Grumbling and Mark Horowitz provides an excellent overview of the QC stack. The schematic below illustrates the high level components of a QC hardware (beige) and software (purple) stack.

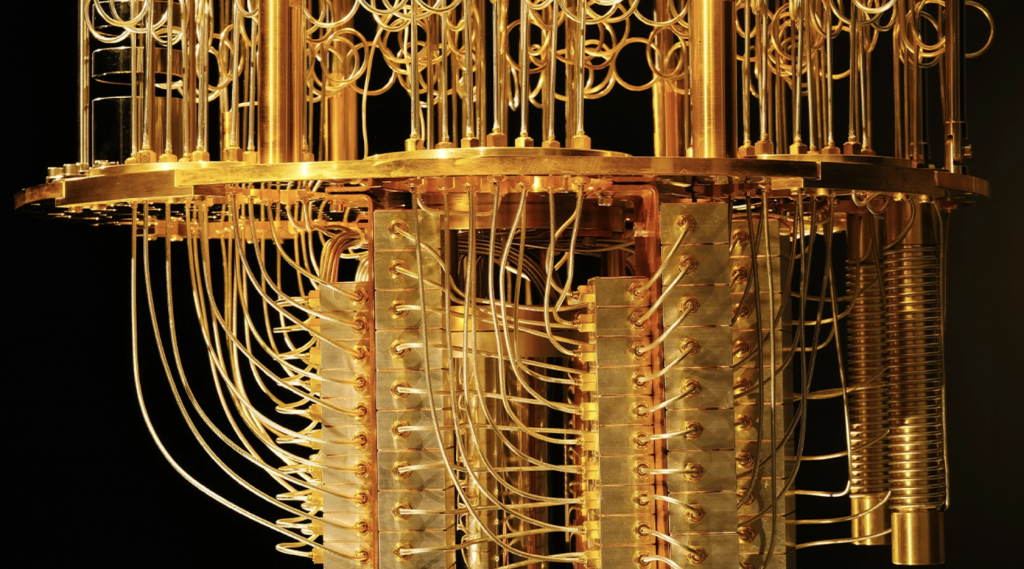

Starting with hardware, the quantum processor consists of both classical and quantum technologies housed in four layers:

- Quantum data pane — houses the physical qubits and any the circuits needed to perform measurement and gate operations. The signals used in this pane are analog.

- Control and measurement pane — contains the hardware needed to convert the digital signals of the control processor (i.e. those describing the desired operations) to the analog signals that can be interpreted by the qubits. It also converts the analog measurements of the qubit state back into the digital signals. The control signals that drive the operations are usually handled by sophisticated generators that are built with classical technologies.

- Control processor pane — determines the sequence of gate operations and measurements that the algorithm requires. It can use these measurement outcomes to inform subsequent quantum operations. Error correction is also performed in this layer. The control processor converts compiled code to instructions for the control and measurement pane.

- Host processor pane — a classical computer that provides all of the software development tools and services users need to create applications to be run on the control processor.

The hardware would be practically useless, unless a software layer is developed to translate application commands into a machine-readable code. The software stack for QCs has three high-level components:

- Quantum programming language — new approaches are required compared to classical computers in order to exploit some of the inherent properties of quantum mechanics. Different levels of abstraction in software and those might use different language. Programmers operating at the highest level of abstraction can operate independently of the constraints of the specific system architecture.

- Compilers translate algorithms into machine-readable code, they are crucial when optimising execution speed of the system and can reduce the number of qubits required. Compilers can be built in parallel or independently of hardware using simulator tools and resource estimators.

- Due to the fragility of the qubit states, error correction is critical for developing a large scale QC. Error correction requires redundant qubits that store information about the quantum state and have low error rates, achieved thanks to quantum error correction code. These qubits are called logical qubits. A classical measurement mechanism is used to measure the system and determine errors, which can be corrected using the information in the logical qubits.

Current state-of-the-art quantum devices require significant optimisation. Algorithms are often designed for and individually optimised to the specific hardware implementation. Hence, software and hardware development is performed concurrently. In fact, the vast majority of hardware manufacturers started off by adopting a full-stack approach, i.e. taking ownership of both hardware and software. This is not too dissimilar to the way classical computers evolved in the early days of their development. We expect increasing specialisation to occur over time for QCs too.

3. Maintaining coherence and low error rates while scaling remains the key challenge

The inherent properties of quantum mechanics that are key to the potential power of QC also make such machines incredibly difficult to build, control and scale. While the scientific theory behind QC began to formalise in the 1980s, these are still early days for the technology.

There are four constraints inherent in the design of a QC:

- Coherence is fragile — qubits need to remain coherent while processing information but coherence requires perfect isolation from the outside environment. Such isolation is not practical. Thus, qubits decohere over time. In other words, qubits have a finite and usually short coherence time. This is unhelpful as it causes qubits to lose information and disrupts any ongoing algorithms. Significant research effort has gone into improving coherence times.

- To generate entanglement, qubits need to interact with each other — entanglement is achieved by qubits interacting directly with each other or via another medium. In both cases, this requires additional ‘overhead’, which adds to the complexity of the system and could result in a machine employing more qubits than can be used in calculations.

- Information stored in qubits cannot be copied — this is the so-called ‘no-cloning’ principle in quantum mechanics. Copying of data is central to the way software in classical computers works but QC will require a different approach. The ‘no-cloning’ principle is a great asset when it comes to communication that uses quantum keys for encryption — no malicious party will be able to copy the key. This makes quantum encryption ‘unhackble’, unlike most encryption algorithms used currently (those rely on complex mathematical functions that could be compromised given enough compute power).

- Lack of noise immunity — unlike classical computers, quantum mechanics and qubits are analog by nature, meaning that small errors add up and grow in magnitude as the QC performs operations. This significantly impacts the quality and complexity of calculations performed. Errors could come from interaction with the environment, qubit design, logic gate design, and imperfections in the signals used to manipulate the qubits. Classical computing uses copies of intermediate state to provide error correction but this is not available to QC (see the point above). Thus, control hardware and algorithms need to be employed to minimise the error propagation. The quality of operations is measured by the error rates (probability that a gate operation returns the wrong output) or the gate fidelity (probability that a gate operation returns the correct output).

Maximising coherence times (stable qubits) while minimising error rates is key to the development of a universal QC. However, both are difficult to maintain with scale. Scientists are experimenting with several qubit technologies to help solve the challenge.

4. Qubit architecture — no winning configuration yet

Consensus is still lacking on the most effective qubits technology. Research from academics and corporates focuses on several different types of qubits 1) Superconducting, 2) Ion Trap, 3) Photons, 4) Quantum dot, 5) Neutral Atom, and 6) Topological. Superconducting and Ion Trap qubits are the most popular technologies employed currently but they face serious scaling challenges.

The details of the various approaches are beyond the scope of this introductory overview, but the table below provides a comparison of the most important characteristics.

The special properties of qubits are theoretically sound but volatile and therefore particularly difficult to preserve. Current consensus suggests scientists are at least 10 years away from creating a resilient control environment that minimises errors and maximises coherence time at scale. Effective error correction techniques have been introduced in small quantum devices to improve qubit quality and coherence but the power of these machines has not been sufficient to deliver exciting use cases. Without scale and greater control, QC are unlikely to live up to the promise of unleashing a new paradigm of commercial applications.

That said, as discussed in Part 2 of this series, NISQ (noisy intermediate-scale quantum) machine could provide useful applications on a shorter time frame.

The second part of this series will explore potential applications for Quantum Computing, as well as the commercial progress achieved to date. Stay tuned.

We’d like to thank Andrii Iamshanov (AegiQ Advisor), Ilana Wisby (CEO of Oxford Quantum Circuits), Max Sich (CEO of AegiQ), and Richard Murray (CEO of Orca Computing), whose feedback and guidance contributed to the above work.

For more market insights, check out our latest quantum computing news here.