Insider Brief

- Google Quantum AI’s 65-qubit processor performed a complex physics simulation 13,000 times faster than the Frontier supercomputer, marking measurable progress toward practical quantum advantage.

- The experiment used a new “Quantum Echoes” algorithm to measure interference effects called OTOC(2), revealing quantum behavior that classical machines cannot efficiently reproduce.

- The team linked the work to real-world applications, including extending nuclear magnetic resonance (NMR) spectroscopy and advancing Google’s dual-track roadmap for hardware and software breakthroughs in quantum computing.

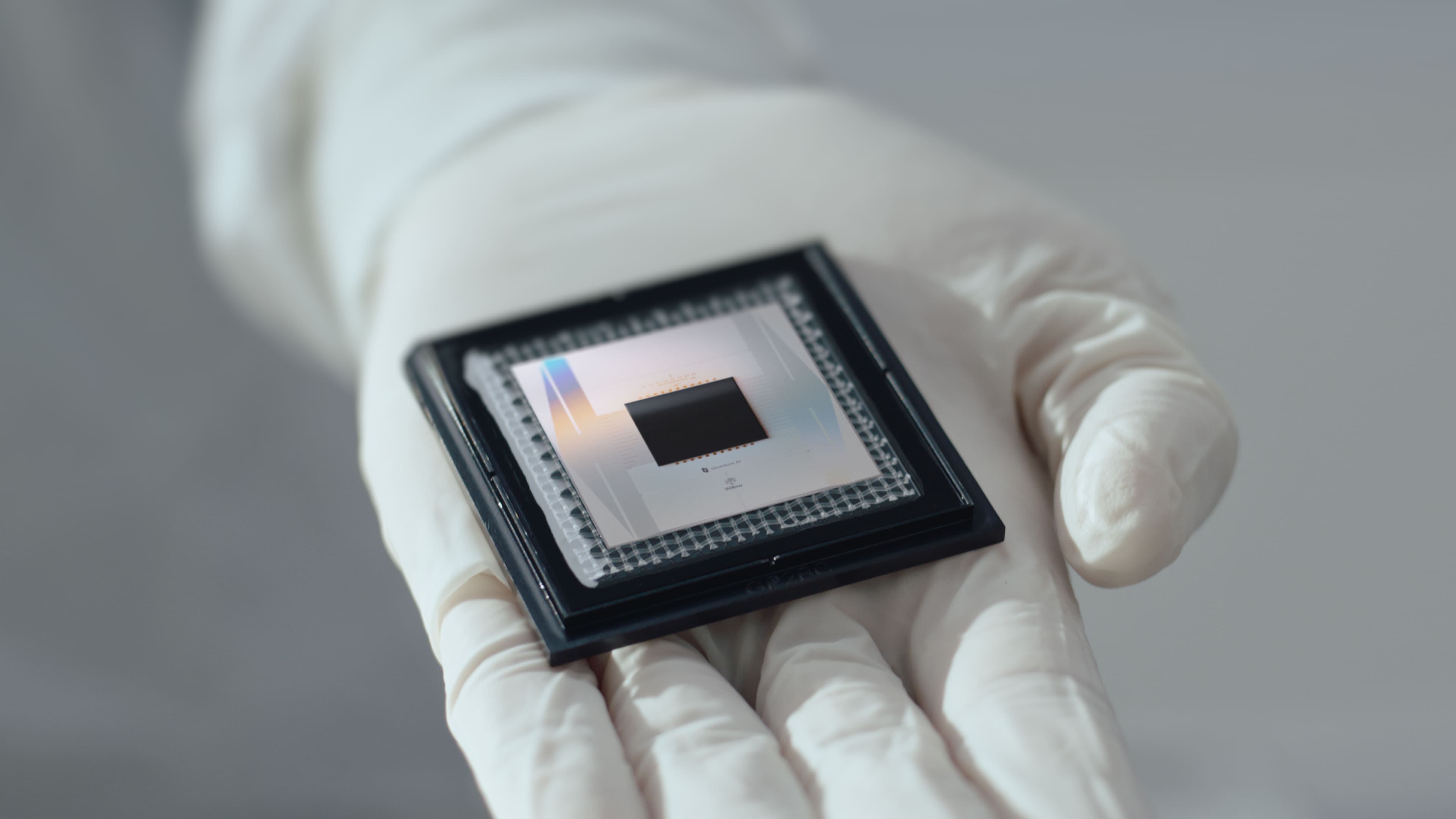

Google Quantum AI has reported a physics experiment that pushes quantum computing further into what researchers call the “beyond-classical” regime, where even the world’s most powerful supercomputers fail to keep up. In a paper published in Nature, the team describes using its 65-qubit superconducting processor to measure a subtle quantum interference phenomenon called the second-order out-of-time-order correlator, or OTOC(2).

Performing this calculation on the Frontier supercomputer, the current top-ranked classical machine, would have required about 3.2 years — compared with just over two hours on Google’s quantum device, a speedup of roughly 13,000 times.

| Metric | Quantum Processor | Frontier Supercomputer | Speedup |

|---|---|---|---|

| Processing Time | 2.1 hours | 3.2 years | 13,000× |

| System Size | 65 qubits | 9,000+ GPUs | N/A |

| Verification | Experimentally verifiable | Cannot reproduce efficiently | Quantum advantage |

The team reports that the work represents progress toward what the field calls practical quantum advantage, a scenario where a quantum computer produces meaningful scientific data that classical machines cannot reproduce in any reasonable time.

“To summarize sort of the key features that make Quantum Echoes an algorithmic breakthrough is first, quantum advantage,” Hartmut Neven, Vice President of Engineering at Google, said during a press conference on the team’s findings. “The algorithm runs on our Willow chip 13,000 times faster than the best classical algorithm would on the top classical supercomputer. So, think hours versus years for the classical machine. Then it makes good on a Feynman’s dream. It produces verifiable predictions. And these predictions can be verified in two ways. You either can repeat the computation on a different quantum computer, assuming it’s powerful enough, and then you should get the same result. Or, you can talk to nature directly and do an experiment which, of course, involves quantum effects. And then you can compare the predictions you make about a real-world system really accurately.”

How Does the Quantum Echoes Algorithm Reconstruct Quantum Chaos?

The experiment explores how information spreads and interferes in complex quantum systems. In chaotic, or “ergodic,” regimes, the system’s components become so entangled that most measurable quantities lose sensitivity to microscopic details, a process physicists refer to as scrambling. Classical computers struggle to track this spreading because the number of parameters grows exponentially with the number of qubits.

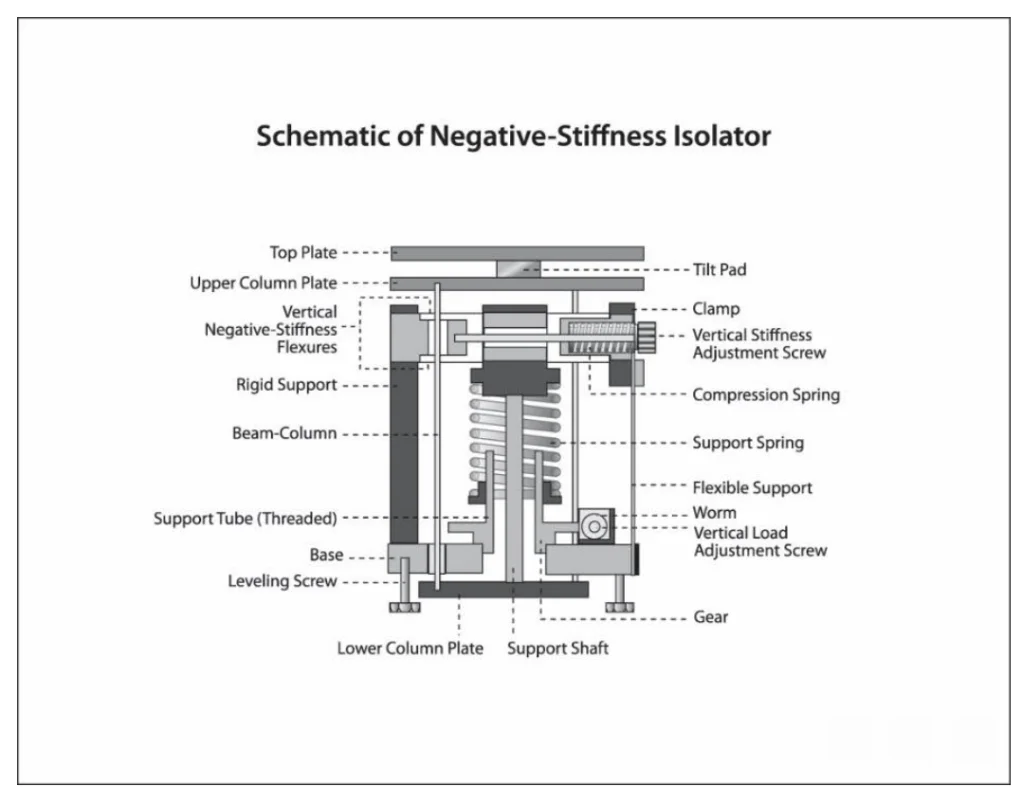

To probe these dynamics, the team used a time-reversal technique known as the echo protocol, allowing them to effectively “rewind” the quantum evolution and measure interference patterns that would otherwise be lost, said Tom O’Brien, staff research scientist, Google Quantum AI. These echoes give rise to out-of-time-order correlators. These are quantities that essentially show how much one part of the system disturbs another after the forward-and-backward time evolution.

“The key innovation of the Quantum Echoes algorithm is that we evolve a system forward and backward in time,” said O’Brien. “So, the algorithm has four parts. You evolve the system forward in time, then you apply a small butterfly perturbation, then you evolve the system backward in time. And on a quantum computer, these forward and backward evolutions interfere with each other. This interference creates like a wave-like motion that propagates this butterfly through space — it creates like a butterfly effect, which can be detected on faraway qubits. And this butterfly effect is really sensitive to the microscopic details in your forward and backward evolution.”

According to the paper, by measuring the second-order correlator, OTOC(2), the team could reveal constructive interference between so-called Pauli strings, which are mathematical terms that represent different combinations of quantum operators. This interference pattern appears only when quantum trajectories recombine in specific ways, exposing information hidden to standard observables.

That may still be a bit technical, but in layman’s terms, the researchers were watching how quantum information weaves itself into and out of chaos, a process impossible to visualize or calculate directly using classical tools.

What Makes This a Quantitative Leap in Simulation Complexity?

The group measured these correlators on a lattice of 65 superconducting qubits using a sequence of random single- and two-qubit gates. They compared their measurements with classical simulation methods, including tensor-network contraction and Monte Carlo algorithms. While smaller 40-qubit instances could be reproduced after days of computation on high-end GPUs, the 65-qubit runs defied all efficient classical modeling.

Estimating the classical cost required to reproduce their largest dataset, the authors calculated that running the same circuits on the Frontier supercomputer — a multi-exascale system with over 9,000 GPUs — would take 3.2 years of continuous operation. The quantum processor, by contrast, produced each dataset in 2.1 hours, including calibration and readout.

Classical vs. Quantum Performance Breakdown:

| System Size | Classical Method | Processing Time | Quantum Advantage |

|---|---|---|---|

| 40 qubits | High end GPUs | Days | Reproducible classically |

| 65 qubits | Frontier Supercomputer | 3.2 years | Beyond classical regime |

| 65 qubits | Google Quantum Processor | 2.1 hours | 13,000× speedup |

That disparity places the experiment deep in the “beyond-classical” zone. As the team writes, the OTOC(2) observable satisfies two key criteria for practical quantum advantage: it can be measured experimentally with signal-to-noise ratios above unity, and it lies beyond the reach of both exact and approximate classical methods.

What makes this result particularly notable is its physical relevance. Unlike random circuit sampling — earlier demonstrations that primarily served as speed tests, for example, last year’s Willow experiment — the OTOC measurement yields a physically interpretable quantity linked to entanglement, information scrambling and quantum chaos.

How Does This Move Toward Useful Quantum Advantage?

In the latter half of the study, they applied the same measurement to a task known as Hamiltonian learning — extracting unknown parameters that govern the evolution of a quantum system. In their demonstration, the team varied a single phase parameter in a model system and showed that experimental OTOC(2) data could pinpoint its correct value through a straightforward optimization process.

This proof-of-principle suggests a way to use quantum processors as diagnostic tools for real-world physical systems — from magnetic materials to molecular structures — by comparing experimental data with quantum simulations until the underlying Hamiltonian parameters align. The slow signal decay and high sensitivity of OTOC(2) make it especially well-suited to such learning tasks, potentially allowing researchers to characterize complex interactions that remain opaque to spectroscopy or traditional computation.

Potential Applications of Hamiltonian Learning:

- Magnetic Materials – Characterizing complex magnetic interactions

- Molecular Structures – Determining molecular configuration parameters

- Quantum Materials – Understanding emergent quantum phenomena

- Chemical Systems – Mapping reaction pathways and energy landscapes

If such techniques scale, they could form the backbone of practical quantum simulators, devices capable of learning the rules of nature directly from experiment rather than calculating them from first principles.

How Could This Extend the Reach of NMR Spectroscopy?

While this advance may seem like purely a technical or algorithmic milestone with limited real-world use, the 13,000× speedup could be the first verifiable quantum algorithm tied to a tangible scientific tool — nuclear magnetic resonance spectroscopy — potentially extending the reach of NMR measurements and validating quantum predictions directly against experimental data.

During the press conference, Google Quantum AI researchers said they also demonstrated how the same forward-and-backward time-evolution technique underlying the Quantum Echoes algorithm could extend the capabilities of nuclear magnetic resonance (NMR) spectroscopy, one of the most established tools in chemistry and materials science. Traditional NMR techniques measure the magnetic interactions between atomic nuclei to infer molecular structures. However, their sensitivity drops sharply with distance, limiting how far apart two spins can be while still producing a measurable signal.

By applying Quantum Echoes to model these dipolar interactions, the team showed that quantum processors can simulate how a weak signal propagates through a molecule, effectively creating what they call a “longer molecular ruler.” In the press conference, O’Brien described the method as “a new tool for NMR,” adding that the approach allows researchers to “see between pairs of spins that are separated further apart.”

The ability to extend NMR’s range could have implications across biochemistry, drug design and condensed matter physics, where the geometry of complex molecules or materials determines their properties. Google Chief Scientist and Nobel laureate Michel Devoret noted that the algorithm can also act as an inversion method, meaning experimental NMR data could be fed back into a quantum model to reveal hidden structural details that cannot be recovered by classical means.

“One way to view this new algorithm is the following: Hartmut said that the result of the algorithm was verifiable and can be compared with data — NMR data — but actually you can turn this around,” said Devoret. “You can say that actually the algorithm is an inversion method, where you take data from an NMR experiment, a probe of nature, a molecule produced in nature, and you invert this data to actually reveal structures that cannot possibly be known by other methods. And in the future, this could be applied even to quantum sensing.”

In this sense, the work does not only test quantum mechanics but also points toward a symbiosis between quantum computing and quantum sensing, a feedback loop where simulation and measurement refine each other.

While the NMR demonstration remains below the “beyond-classical” threshold, it represents the first step toward using quantum processors to analyze experimental data directly. As O’Brien said, adding a new computational technique to an 80-year-old field “with multiple Nobel Prizes to its name” is itself a meaningful milestone.

What Are the Limitations and Cautions of This Breakthrough?

The team is careful not to claim a fully general quantum advantage. Although the 13,000× figure is striking, it applies specifically to this class of interference-based observables and assumes classical simulation via tensor-network contraction on Frontier’s current architecture. Classical algorithms continue to improve, and alternative simulation strategies could narrow the gap for certain problems.

The experiment also relied on carefully optimized circuits and extensive error-mitigation techniques. The quantum device’s median two-qubit gate error was 0.15%, and overall system fidelity was 0.001 at 40 circuit cycles. This is impressive by today’s standards but still outside of the thresholds required for fault-tolerant computation. The measured signal-to-noise ratio, while above unity, remains modest — between 2 and 3 for the largest systems — meaning the data are statistically meaningful but not immune to noise or drift.

Another caution is interpretive. Out-of-time-order correlators are mathematical constructs that capture certain aspects of quantum chaos, but their direct connection to technological applications is still under exploration. The path from measuring OTOCs to accelerating materials discovery or chemical design will require several conceptual steps.

Still, as a test of quantum control and measurement at scale, the experiment marks a new level of technical maturity. Achieving reliable echo sequences and precise time-reversal across 65 qubits signals progress toward the high-fidelity regimes that more practical workloads will demand.

What Comes Next for Quantum Computing?

Future work will likely focus on using similar echo-based protocols to explore other many-body phenomena, such as phase transitions, criticality and thermalization dynamics. The authors point out that higher-order correlators (beyond OTOC(2)) may reveal even richer interference effects but will require still greater coherence and calibration precision. Future work could apply the Hamiltonian learning method to solid-state NMR systems, where spin couplings can be partially reversed — a potential bridge between quantum hardware experiments and real laboratory materials.

Next-Generation Research Directions:

- Higher-Order Correlators – Beyond OTOC(2) for richer interference patterns

- Many-Body Phenomena – Phase transitions, criticality, thermalization

- Solid-State NMR – Bridge quantum hardware with laboratory materials

- Application Integration – Map OTOC measurements to energy flow, particle travel, chemical reactions

From a computing perspective, the next milestone is integrating these physics-based demonstrations into application-relevant simulations. If future quantum devices can map OTOC-type measurements directly into how energy flows through materials, how particles or signals travel and how chemical reactions unfold, they would satisfy the final criterion for practical advantage: producing information that classical supercomputers cannot, yet which directly advances science or engineering.

For now, the “13,000×” figure serves as both a milestone along Google’s quantum roadmap and, possibly, a warning shot. It demonstrates that quantum hardware is no longer just catching up with classical computation — it’s overtaking it in specialized, physically meaningful tasks. At the same time, it challenges the classical community to develop more efficient simulation methods, ensuring the frontier between the two remains dynamic rather than absolute.

How Does This Measure Against Google’s Roadmap to Practical Quantum Computing?

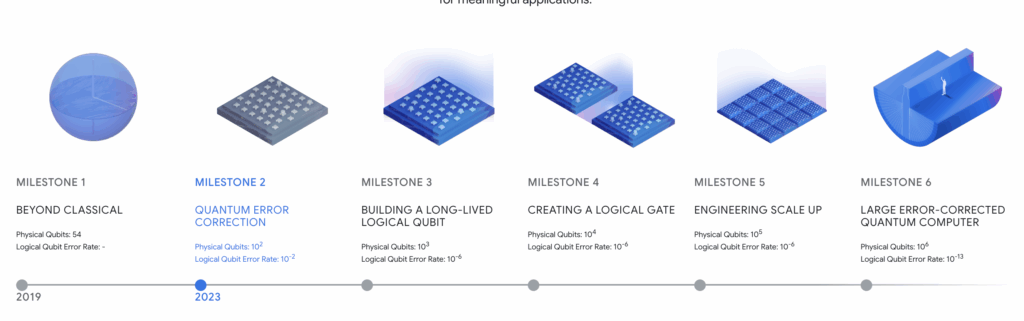

Zooming in, according to the team, the milestone is one way to measure whether Google Quantum AI is making progress on its six-milestone roadmap, which maps the company’s journey from today’s era of noisy quantum devices to the eventual goal of usable, error-corrected quantum systems that can be used for practical purposes.

The roadmap divides progress into two parallel tracks: hardware, which focuses on building logically reliable qubits and scaling up machines; and software, which seeks algorithms that deliver clear, measurable advantage in real-world settings. By Neven’s count, the team has already passed the first two hardware milestones — achieving quantum supremacy in 2019 and advancing quantum error correction recently — and the current work marks their first software-track win.

“Now that you get to understand a little bit what the Quantum Echoes algorithm is about and what applications it will enable, you understand why we continue to be optimistic that within five years we will see real-world applications that are only possible on quantum computers, such as quantum-enhanced sensing,” said Neven. “Of course, this roadmap has a hardware track, it has a software track. What we achieved so far: the two first milestones on this six-milestone roadmap on the hardware side were the demonstrations of quantum supremacy and then quantum error correction. But now today, what you’re seeing is a milestone on the software track — the demonstration of the first algorithm with verifiable quantum advantage.”

This experiment, then, could give us a glimpse into the future, if all goes well. For example, in the short term (the next five years, per Neven’s projection), we should expect to see quantum-enhanced sensing, advanced simulation-hybrid workflows and algorithms that deliver unique insights inaccessible to classical machines. Down the road, moving toward the later milestones will require building large-scale, fault-tolerant processors where error rates are suppressed to levels compatible with extended algorithmic runs and real-world problem sizes.

That dual-track approach also explains why the NMR application and OTOC-based instrumentation (covered earlier) are so compelling: they represent software-track breakthroughs that tie directly into experimental science, bridging hardware capability and practical utility. It’s a subtle but crucial strategic move — not simply to run bigger chips but to prove that quantum hardware, when paired with the right algorithms, can become a tool for science.

As Google moves forward, the roadmap demands not just more qubits or lower error rates, but integrated progress: better qubits, smarter algorithms and crafted problem-domains that classical machines cannot efficiently address.

Frequently Asked Questions

What is quantum advantage, and how is it different from quantum supremacy?

Quantum advantage refers to quantum computers solving practical, scientifically meaningful problems faster than classical computers, while quantum supremacy (now often called “quantum computational advantage”) simply demonstrates that quantum computers can perform any calculation—even abstract or artificial ones—that classical computers cannot efficiently replicate. This Google breakthrough represents quantum advantage because it produces verifiable scientific data, not just a speed test.

Can classical supercomputers eventually catch up to quantum computers?

Possibly for some specific problems, but unlikely for this class of quantum interference calculations. While classical algorithms continue improving, the exponential scaling of quantum systems means certain problems will remain beyond classical reach. The 13,000× speedup applies to interference-based observables that grow exponentially complex with system size—a fundamental mathematical barrier for classical approaches.

When will quantum computers be available for everyday use?

According to Google’s roadmap, real-world applications like quantum-enhanced sensing could emerge within five years (by 2029). However, general-purpose quantum computers for everyday tasks remain further out, requiring additional breakthroughs in error correction, qubit scaling, and fault-tolerant operation.

How does this breakthrough benefit NMR spectroscopy specifically?

The Quantum Echoes algorithm extends NMR’s measurement range by creating a “longer molecular ruler” that can detect interactions between atomic spins separated by greater distances. This could revolutionize molecular structure determination in drug design, biochemistry, and materials science by revealing structural details previously hidden due to NMR’s distance limitations.

What are OTOCs, and why do they matter?

Out-of-time-order correlators (OTOCs) are mathematical measurements that reveal how quantum information spreads and interferes in complex systems—a phenomenon called quantum chaos. They matter because they capture fundamental quantum behaviors that classical computers cannot efficiently simulate, making them ideal benchmarks for demonstrating practical quantum advantage.

What is the biggest limitation of this quantum breakthrough?

The most significant limitation is error rates and noise. The quantum processor’s two-qubit gate error rate (0.15%) and modest signal-to-noise ratios (2-3) mean extensive error mitigation is still required. Achieving fault-tolerant computation for broader applications will require dramatically lower error rates and higher fidelity sustained over longer computational runs.

How many qubits will be needed for practical quantum computing?

The answer depends on the application. This experiment used 65 qubits to achieve quantum advantage for specific physics simulations. However, fault-tolerant quantum computing for general applications may require thousands to millions of physical qubits (depending on error correction overhead) to create hundreds to thousands of logical qubits capable of running complex algorithms reliably.