Exposing the emptiness of the h-word: how claims of hyperbole ring hollow; and how nobody, not even today’s experts, can possibly know the limits of Quantum.

By Shai Phillips

President, PSIRCH

In a monthly segment in The Quantum Insider, PSIRCH’s President, Shai Phillips, will conduct a first-of-its-kind audit of broad industry-internal accusations of exaggeration in quantum computing and associated fields. How much truth there is to them will be the focus of this series.

“We are in the business of making ludicrous claims about the future… and we have to keep actually delivering on those promises… but we’re deadly serious about building a genuine quantum computer VERY VERY QUICKLY.”

Pete Shadbolt – Chief Science Officer, PsiQuantum

-

- Expert views in Quantum support the outlook that quantum computing is set to change the world, meaning far-reaching generalized projections regarding Quantum are valid and not hyperbolic.

- There is strong evidence quantum computing will aid in artificial intelligence/ machine learning, including with exponential speedups when quantum data is involved, in training AI, and in generalizing, thus some skeptical statements by experts do not tell the full story, and can be misleading to various audiences.

- Even expert claims of hyperbole, largely due to the impracticality of qualifying all remarks, can be inaccurate, ironically hyperbolic, and have the potential to retard progress in Quantum. Thus, a cessation of such claims will be beneficial.

Part III – The Problem with Theory

For various reasons, many quantum experts who participated in conversations that led to the findings and discussions in this series were not able to be quoted by name. I am truly grateful to these unsung heroes for their valuable help in getting to the bottom of some highly complex subject matter. This series is dedicated to them.

“MARTY… YOU’RE NOT THINKING FOURTH DIMENSIONALLY!”

Last month we considered both sides of the complexity theory debate, including one arguably exaggerated claim of the h-word from Scott Aaronson. But that’s far from the end of the story. It must be crystal clear that what Aaronson was protesting was the notion that quantum computers may exponentially speed-up TSP (travelling salesman – an np-hard problem) based on current theoretical understanding, and not based on what the advent of fault tolerant quantum computers might shine a light on. We need to take a step back and breathe. We are at step two of a hundred-mile journey, and we are peering into the abyss of a new technology, the trajectory of which no one, neither layperson nor expert, can predict. Oppenheimer’s famous quote: “theory will only take you so far” was echoed time and again in the recent eponymous film, and illuminates one of the key issues in the quantum industry today: for those who don’t expect proof of the technology’s ability to scale before it’s even been built, others ask for “realism” – as if we knew what that even is! “We must be realistic about what quantum computing can actually do”, they say. But nobody really knows what that is, and so realism is a slippery concept, because it is based on severely limited current understanding, not the much-augmented understanding of tomorrow, and all the knowledge that brings.

The funny thing is, current theory is frequently incorrect, we just don’t know it until it becomes outdated. Much of “current theory” never stays current for very long. If it did, the earth would still be flat, the periodic table of elements may be limited to fire, earth, air, and water, and the smallest particle in the universe would be a grain of sand. Yes, this is reductio ad absurdum, but it makes the point. We should take “current theory” with a pinch of salt. Which is why labeling anything “a fact” (see last month’s column), especially when it is not proven, is a brazen overreach. It is thought, but not proven, that NP-complete problems can’t be fully solved by a quantum computer. It’s true that the experts largely, if not invariably, agree on this point, but the extent to which theory could change once we achieve fault tolerance may be dramatic. So, perhaps we should simply admit that we just don’t know and leave it at that.

(For an article series such as this, which promises no exaggeration, this next portion may seem pretty “pie-in-the-sky”, so it’s requested to receive it in the intended spirit – the ONLY point being made here is that “current theory” changes, and nothing else):

In his ’08 article, Aaronson echoes Abrams/Lloyd: adding nonlinear terms to quantum mechanics could indeed result in a quantum computer’s ability to solve NP-complete problems, but claims this leads to fantastical results like faster than light speed. Again, Aaronson is correct only according to the rubric of current theoretical understanding, but change the framework and look at things through a different lens, and nonlinearity in quantum mechanics may not need to bend space and time to add enhanced power to quantum computers. One such place where we may someday find this is in the area of quantum gravity. It has long been the holy grail of science to reconcile general relativity with quantum mechanics. Yet the first is a non-linear theory, so reconciling them may someday require finding a non-linear approach to quantum mechanics, if not a linear theory for gravity. In either case, I doubt we’d need to invent a warp drive to get there, perhaps just revise our approach. While this is largely conjecture, there is nonetheless a degree of corroboration in the academic literature, even for such far-reaching ideas…

One such paper, published in the prestigious journal, Nature, by Giulio Chiribella and Zixuan Liu in 2022: “Quantum Operations with Indefinite Time Direction”, declares its “providing a framework for potential extensions of quantum theory.” It alludes to how their theoretical and experimental work on indefinite time direction and indefinite causal order (their “quantum time flip”) may one day raise ‘the open question whether these operations may be physically accessible in new regimes, such as quantum gravity’ and whether ‘this higher order framework is expected to contribute to the study of quantum gravity scenarios, as envisaged by Hardy’ (‘Towards Quantum Gravity…’ 2007). If the theory of time & causality can be changed, linearity is certainly up for grabs too. (P.s. articles on ‘time’ papers seem to have gotten the quantum hype police’s knickers in quite a twist, so we’ll likely return to it later, especially on its going ‘beyond simulation’.)

To that end, we must give credit to the pioneers working to achieve what most dismiss:

There are several publications by scholars at reputable institutions proposing novel methods for incremental progress in solving NP-complete problems by using quantum algorithms. One particular NP-complete problem receiving a lot of attention in this endeavor is the Boolean Satisfiability problem (also referred to as ‘3SAT’). Discussing such papers goes beyond the scope of this article, but a quick online search will yield plenty of results. Until fault tolerant quantum computers arrive, we may not know the answer to this for certain. On the other hand, these are nothing new to Aaronson, who asserts that all those he has seen to date continuously ignore key questions. What we are asking here, he says, in terms of complexity theory, is the following: ‘Is NP-complete contained in BQP?’ The professor gives that about a 3% chance, but it’s also possible a classical algorithm may arise to achieve the same result so in truth, we just don’t know.

Also to be considered as we look to the future is talent in the quantum industry. This cannot be overstated, because one person can and often has changed the entire course of things. As a quantum headhunter, this matter is particularly close to my heart, and reminds me of a line from another fun movie I quite enjoyed, “Iron Man”. Distraught at his scientist’s failed attempts to build the “arc reactor”, the bad guy roars: “Tony Stark was able to build this in a cave! With a box of scraps!” To which the scientist replies: “I am not Tony Stark”. And that can make all the difference. The mind of one person can take down our precious “current theory” in one fell swoop. I can’t help but think that a non-defeatist mindset plays into being that one great iconoclast. Anecdotal though this next point may be, it behooves us to remember that quantum technology was born of physics. We could never move forward without a big interdisciplinary effort, but the rest of us, we inherited it. We weren’t born to it like the physicists. And maybe that’s why I’ve often found physicists, those who truly appreciate the magic of it, to be most optimistic about how far quantum will reach, while engineers are often neutral, and computer scientists perhaps the most skeptical of all. Anecdotal but apt. It was thus far from surprising to see Aaronson scathingly trash physicist, Michio Kaku’s “Quantum Supremacy” book. In truth, I haven’t read it, but it tickled me that the stereotype held. (N.b. From what I’ve heard, the book is more of a long-term projection of a very distant quantum future, rather than a look at an initial stage of quantum utility. It’s on my list.)

Not to be forgotten is the fact that, as we race forward in the effort to achieve quantum advantage, many modalities are emerging. One type, analog quantum computers, are having particular success. QuEra is a quantum computing company that uses neutral atoms and the phenomenon of the Rydberg blockade for quantum computation that is well-suited to solving optimization problems amongst others. QuEra has both analog and digital modes. Recently, QuEra was able to achieve near-quadratic speed-up of an NP-hard problem called the MIS (or ‘Maximum Independent Set’) problem, as Sheng-Tao Wang, et al. showed. (N.B. While quadratic speedup for exponentially hard problems does not result in a polynomial time solution, but rather a smaller exponential, reducing to a smaller exponential can at times be useful. More on this in later chapters.) Since this month’s segment was written, QuEra, in association with The Lukin group at Harvard University, has shown such strong additional progress that it has caused something of an inflection point in the industry, with notable skeptics suddenly seeming to change their tune – some subtly, some not so subtly – so we will need to return to take a closer look at this at some point in a future column.

In summary: it’s a valid perspective to assert that current theoretical understandings of quantum computation will change as the technology matures. In fact, it already has, to some extent, in large degree aided by Aaronson himself, as we will now see…

NISQ-ERA BREAKTHROUGHS AND “NEGATIVE HYPE”

Since Aaronson’s 2008 popular article, in our present era of “Noisy Intermediate-State Quantum” (NISQ) devices, several breakthroughs have surfaced quite precociously, publicized in such outlets as Quanta Magazine. One of these came about in a seminal 2022 paper by Takashi Yamakawa and Mark Zhandry of NTT / Princeton: “Verifiable Quantum Advantage without Structure”, in which it was shown certain unstructured problems are solvable by a quantum computer [exponentially] faster than a classical one (“They… proved that any classical algorithm must be slower by an exponential factor” [n.b. original paper terms superpolynomial]). Until this point, when it came to unstructured black-box problems, we were all very much under the impression Grover’s (quantum) algorithm and its quadratic (square root) speedup was pretty much as good as it was ever going to get! We never dreamed we would see exponential speedups for search problems in the same ilk as Shor’s algorithm for factoring large numbers. But why?

Well, Shor’s algorithm relies on the structural fixture of “periodicity” in its construct, and this led to the Aaronson-Ambainis conjecture that no exponential speedup can be achieved by quantum computers for unstructured decision problems that do not benefit from a similar crucial crutch. This was published in an earlier 2014 paper: “The Need for Structure in Quantum Speedups”. Now, it must first be said that these two papers do not contradict each other, because for unstructured non-decision problems like ‘search’, the conjecture does not apply, nor was it ever claimed to. As Yamakawa and Zhandry assert, their “results do not contradict [the] conjecture… since they are for search problems as opposed to decision problems. In particular, [their] quantum algorithm does not compute a function but rather samples from a set of possible values. Assuming the conjecture shows that this is inherent.” Moreover, the conjecture never claimed to be a full proof. In fact, attempts to resolve it ultimately fell flat, such as Keller and Klein’s retracted preprint.

Yamakawa and Zhandry’s [exponentially] faster quantum algorithm for unstructured non-decision search problems, an exalted feat by which Aaronson was not unimpressed, proves “there are NP search problems solvable by BQP machines but not BPP machines” (i.e. solvable by quantum computers, but not by classical computers). When asked, Prof. Aaronson remarked: if he could go back and rewrite his aforementioned 2008 popular article, referencing unstructured problems, this feat would indeed merit a mention.

Still, despite the fact that everything here seems to line up nicely, before we tie it off and put a pretty pink bow on it, let’s actually take a direct look at two main assertions from these two papers, side-by-side:

- “Perhaps the central lesson gleaned from fifteen years of quantum algorithms research is this: Quantum computers can offer superpolynomial speedups over classical computers, but only for certain “structured” problems.”

Aaronson / Ambainis

- “We show, perhaps surprisingly, for certain search problems in NP, random oracles do in fact give provable unconditional superpolynomial quantum speedups.”

Yamakawa / Zhandry

In the first of these contrasting principal points, the decision problem caveat is notably absent. Main points can often be the only thing casual readers absorb, say via second-hand reading, so this may raise questions (especially of a speaker who spent last week’s APS March meeting talking about an eye-roll-inducing “hammer of hype”). For instance:

- For anyone so concerned with so-called “hype”, shouldn’t one’s own headline encapsulations at least try to be less negatively hyperbolic?

- If one’s own negative hyperbole is discovered in retrospect, shouldn’t just as big an effort be made to proliferate clarification?

- To what extent is such framing motivated by an agenda to impose seeming limits on quantum computing by hook or by crook?

- Given aggressive efforts at “negative hype”, should we be taking claims of “hype” with a pinch of salt these days?

- Should we be attributing a degree of hypocrisy to claims of “hype”, as “negative hype” may be just as misleading?\

Yamakawa and Zhandry toppled at least the surface understanding of current theory. Which other algorithms are yet to come that may challenge current theory even further?

Another breakthrough that might arguably be viewed as quite similarly far-reaching, and which preceded the above paper in the spring of 2018, by Ran Raz of Princeton and the Weizmann Institute, and Avishay Tal of Stanford, was also featured in Quanta Magazine. Raz and Tal solved a longstanding question and found a class of problem only a quantum computer can solve, no matter how advanced classical computers may become in future. They achieved “(oracle) separation of BQP and PH”. It was based on a Fourier transform problem known as “Forrelation” that Aaronson had himself introduced a decade earlier. They showed that a quantum computer could solve the problem in just one shot, whereas no such classical algorithm exists: a classical computer could try forever and never solve it. Useful or practical application remains at issue, but isn’t that how it usually starts?

One final academic breakthrough is that of Bravyi, Gosset, and Koenig, published in the magazine, Science – “Quantum Advantage with Shallow Circuits”, which showed further the potential for superiority of quantum computation over classical, in that solutions of relational problems in “constant time” created separation between “analogously defined classical and quantum computational models”. As Aaronson notes in his Blog, ‘Shtetl Optimized’, with many parallel processors, classical computers compete. Still, a big step forward. And this is just the start, folks. Before we have fault tolerance, before universal quantum computers exist at scale, already the theoretical status-quo is a-changin’.

QUANTUM UTILITY ALREADY: THE “THEORY-V-PRACTICE” DISCONNECT

The triumphs do not all belong to academia. In fact, industry has arguably advanced quantum computing in equal measure, if not more so, with recent breakthroughs. We begin with the most renowned of these in 2019, when Google’s superconducting quantum processor, Sycamore, accomplished, for the first time, Quantum Supremacy. This was headline-news at the time, and it was proclaimed, though not completely undisputed, that it would take a classical supercomputer around ten thousand years to accomplish the same result. The task to be accomplished by Sycamore was a rough analog of boson sampling, namely, random circuit sampling. Its utility is called into question, and so we may have quantum supremacy without commercial advantage in this instance. However, we sometimes need to put aside the idea of usefulness at such an early stage. Some of the most profound scientific wins have come out of events that, initially, could claim no useful purpose beyond discovery. Then, they changed the world.

Not long after Google, others began to accomplish this same feat of quantum advantage – the ability of a quantum computer to do in seconds what a classical computer would take thousands of years to achieve. The first of these was Hefei National Laboratory for Physical Sciences at the University of Science and Technology of China, led by Jian-Wei Pan, using a photonic system. Later, Xanadu did the same, as outlined in a publication in Nature: “Quantum computational advantage with a photonic quantum processor”. Draw your own conclusions about photonics in the modality race! Google returned to the headlines again last year with a global industry-first practical demonstration of the viability of “error correction” as the number of qubits scale up. This is a bigger deal than it sounds, as without error correction, the viability of the entire paradigm of quantum computing would be called into question. In fact, “Fault Tolerance”, which is essentially the ability for quantum computing to scale to practical use, is quite surely dependent on this idea of error correction for the foreseeable future, probably forever.

Lastly, and perhaps most impressively of all, is that quantum computers in industry have already been shown to exceed classical computational capabilities for practical commercial use: Quantum Annealers have already been used extensively in commerce. Early stage though they may be, just like their gate-based counterparts, nonetheless they are leading the charge in providing a commercial edge even today.

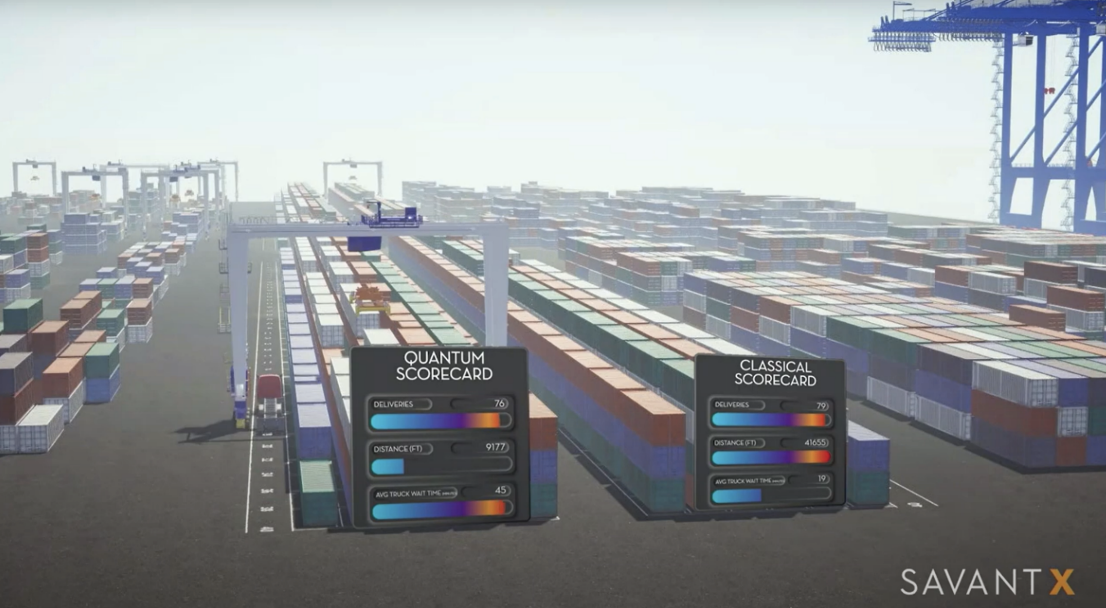

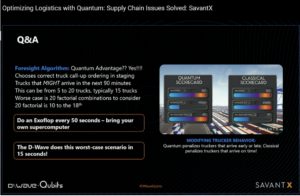

An apt example of this is the work SavantX did for Port of Los Angeles using a D-Wave quantum annealer. They were able to optimize the container deliveries at Pier 300 to impressive effect. And they were not shy about measuring the results against classical compute. While the classical computer results rewarded truck drivers for showing up late, awarding them shorter wait times, the quantum computer dramatically reduced distance traveled for the same number of deliveries, whilst simultaneously penalizing drivers for late arrivals by longer wait times. Quantum advantage was attained. David Ostby of SavantX did some quick math as we spoke, and estimated a roughly 700,000-year period for classical compute to depart from its “dumb” greedy algorithm, utilize the superior method instead, and produce an equivalent caliber of optimized results.

Most importantly, the SavantX story shows how sometimes theory doesn’t match up with the realities of commercial practice. Sure, there may be some theoretical classical computational basis for disputing this result or that, but as Bob Sorensen of Hyperion Research put it at D-Wave’s Qubits conference, end-users simply don’t care about the controversies happening in the quantum community, over this theory or that modality, they just want to see performance enhancements, and from Mastercard to Deloitte, they seem to be getting what they want out of quantum computing already.

There is a manifest disconnect between theoreticians’ expectations and real-world scenarios. If SavantX had even attempted to produce the same result using classical supercomputers (or ‘HPC’), the cost would have been inordinately more expensive by sums in the tens of millions of dollars – utterly impractical, and for no other reason than appeasing theoretical perfectionism and fixation on attaining mathematical proof prior to commercial application. Sure, classical compute might be able to measure up, but how many parallel processors would it take in certain cases? Thousands? Millions? Billions? And at what cost? Finding, hiring, paying suitable coders comes into play, as do other resource constraints not always considered by theoreticians. Aaronson, at Q2B, implored us to at least first prove these things work before industry starts getting involved to try to get practical results. Taking that approach, Port of LA’s Pier 300 would not have achieved over three-times resale value. Who knows how many other opportunities may be lost awaiting the quintessential “spherical chicken in a vacuum”.

And as if that weren’t enough, we may next turn our attention to an even more recent advance by IBM and UC Berkley, in the landmark Nature paper: “Evidence for the utility of quantum computing before fault tolerance” by Youngseok Kim et al. In this paper, it is shown via ZNE (zero noise extrapolation), that quantum computers can promptly become useful with near-term effect, and hold a significant edge over mightiest classical methods. To quote the paper itself: “…this represents evidence for the utility of quantum computing in a pre-fault-tolerant era.” Notice we’re not talking about quantum advantage here – the ability for quantum computers to do things of unknown benefit better, but compelling evidence that quantum computers may perform useful tasks soon. This is monumental, because it only gets better from here. Quite predictably, there was immediate backlash at this claim, and a rush to try to demonstrate the parity of classical computation to the task, and thus negate the claims of the paper. In the wake of this, the bold quantum lead at IBM, Jay Gambetta, took to social media to quiet the skeptics: “The flurry of classical simulation results reported last week…and our own follow-up work with UC Berkeley… have only strengthened our confidence in this being evidence of quantum utility.” Already. Today. We can glimpse practical, useful quantum advantage, whatever the theoreticians may say.

(Tune in next time, as other approaches show quantum advantage trumping theory.)

If you found this article to be informative, you can explore more current quantum news here, exclusives, interviews, and podcasts.