This article provides an overview of NISQ Quantum Computing, what it means and why it matters. It is deliberately high-level for the non-technical reader.

What Does NISQ Stand for?

Noisy Intermediate Scale Quantum (NISQ) computing is a term coined by John Preskill in 2018 which noted that current quantum computers at the time (and indeed still in 2023) are prone to considerable error rates and limited in size by the number of logical qubits (or even physical qubits) in the system. In short, this means they are unreliable to perform general computation.

Although getting to this stage has taken considerable effort on the research budgets of universities and other academically focused establishments over the last few decades, we are still at a point where a quantum computer is typically no better at solving problems than classical computers.

Due to this fundamental fallibility, some experts within the industry predict a so-called ‘quantum winter’. Others believe the engineering challenges to overcome the NISQ era will keep the industry stuck for decades whilst the more optimistic in the industry believe that the market will emerge from the NISQ era in the next couple of years.

What is the NISQ Era?

Are we in the NISQ era? Yes, we are now in the era of NISQ which equates to quantum devices that don’t have many useful qubits and possess high error rates. Whilst the industry has moved out of purely operating in the lab, commercially available quantum computers suffer from errors — detecting and correcting these is currently a core challenge.

However, as the number of physical qubits goes up and we improve our understanding of Quantum Error Correction (QEC) experts expect we will witness a shift from the NISQ era to more reliable quantum hardware and software. Late last year (in a preprint) and earlier this year (in Nature) a team at Google demonstrated Quantum Error Correction works not just in theory, but in practice.

Though in the NISQ era, we still face many ongoing challenges, which include enhancing the performance of qubits, reducing noise and developing far better error correction methods. The headway we have made so far this decade is likely to continue, bringing us out of the NISQ era and to the post-NISQ epoch of a fully error-corrected system with thousands to several million qubits.

Our Take on NISQ Computing

According to Chris Coleman, a Condensed Matter Physicist and Consultant to The Quantum Insider, “the NISQ era is quantum computing’s version of the first wave of A.I. Although still needing to overcome limitations, in many instances we’re seeing the foundation being laid for bigger things to come and there is no doubt that the field is making steady progress. This can be seen across the ecosystem.”

Coleman continued by saying advancements in performance are being driven by innovation not only on the quantum plane, but also through supporting systems such as cryogenics, optics, and control and readout. This is thanks, he believes, to intensive research and the engagement between academia and industry. This showcases the supply chain’s eagerness to adapt to changing demands of the field.

“NISQ is also characterized by the development of dynamic compilation strategies that make quantum systems more user-friendly and to squeeze out as much processing power as possible from current devices,” said Coleman. “Error correction and benchmarking are major themes in NISQ, and it is here we see interdisciplinary research across both the quantum hardware and theory to help push us out of this noisy error-prone phase. Although error correction may be one of the biggest barriers to current systems, there are already several routes to fault tolerance that are being pursued.”

Another important aspect of NISQ era is accessibility, he added, by way of the impressive number of systems that have come online and are available on the cloud, offering a diverse range of systems for exploration. This accessibility is helping to drive not only fundamental research but also aiding early adopters and the search for near-term applications.

“When we take a step back and look at NISQ computing,” said Coleman, “we see a multi-faceted field with a lot of tough problems that still need to be solved, but also a dedicated community of researchers and industry professionals working together to overcome these challenges.”

Conclusion

In the next few years, we expect the progress in quantum computing to continue to advance rapidly as new quantum devices with more qubits and better coherence times are developed, enabling researchers to perform more complex quantum computations and potentially demonstrate a quantum advantage over classical algorithms for specific problems.

However, the limitations of NISQ-era devices, such as their susceptibility to noise and errors, equates to the fact they most are unlikely to be able to solve large-scale problems. This means in the longer term, the future of quantum computing will likely involve the development of error-corrected quantum computers capable of solving much larger and more complex problems than current NISQ devices.

Still, before all that happens, NISQ-era quantum computing will continue to be an exciting area of research with many potential applications, such as in optimization, cryptography and materials science. Another likely scenario to unfold will be the emergence of new hybrid classical-quantum computing architectures that combine the advantages of classical and quantum computing to counter problems beyond the reach of both classical and quantum systems.

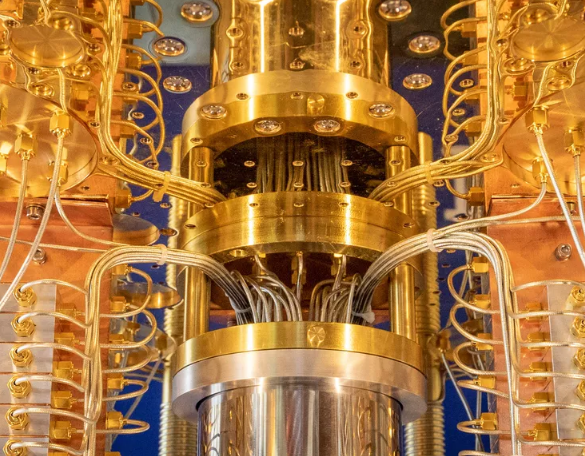

Featured image: Credit: CNet

If you found this article to be informative, you can explore more current quantum news here, exclusives, interviews, and podcasts.