Insider Brief

- A team of ParityQC and the University of Innsbruck physicists report on a novel approach to universal quantum computing based on the ParityQC Architecture in two papers recently published in Physical Review Letters and Physical Review A.

- The researchers propose a universal gate set for quantum computing, with all-to-all connectivity and intrinsic robustness to bit-flip errors.

- The team suggests that ParityQC Architecture can be employed efficiently for the creation of a universal quantum computer.

PRESS RELEASE — A team of ParityQC and the University of Innsbruck physicists has developed a novel approach to universal quantum computing based on the ParityQC Architecture. In two papers recently published in the influential journals Physical Review Letters and Physical Review A, they propose a universal gate set for quantum computing, with all-to-all connectivity and intrinsic robustness to bit-flip errors. This method allows to overcome some of the main limitations of these devices and could be the basis for the next generation of quantum computers.

The fundamental rules of quantum mechanics that make quantum computing possible also impose fundamental restrictions. These restrictions, such as the ‘no-cloning theorem’, are a substantial obstacle in the current quest to create a universal quantum computer – one that would make it possible to perform arbitrary quantum operations. A group of physicists within ParityQC and the University of Innsbruck (Michael Fellner, Anette Messinger, Kilian Ender and Wolfgang Lechner) have recently developed a new form of universal quantum computing that overcomes some of these fundamental restrictions, by using the ParityQC (LHZ) Architecture. The concept was outlined in two papers, “Universal Parity Quantum Computing” and “Applications of Universal Parity Quantum Computation”, that were published in Physical Review Letters and Physical Review A. The scientists present a universal gate set for quantum computing that allows all-to-all connectivity and intrinsic robustness to bit-flip errors.

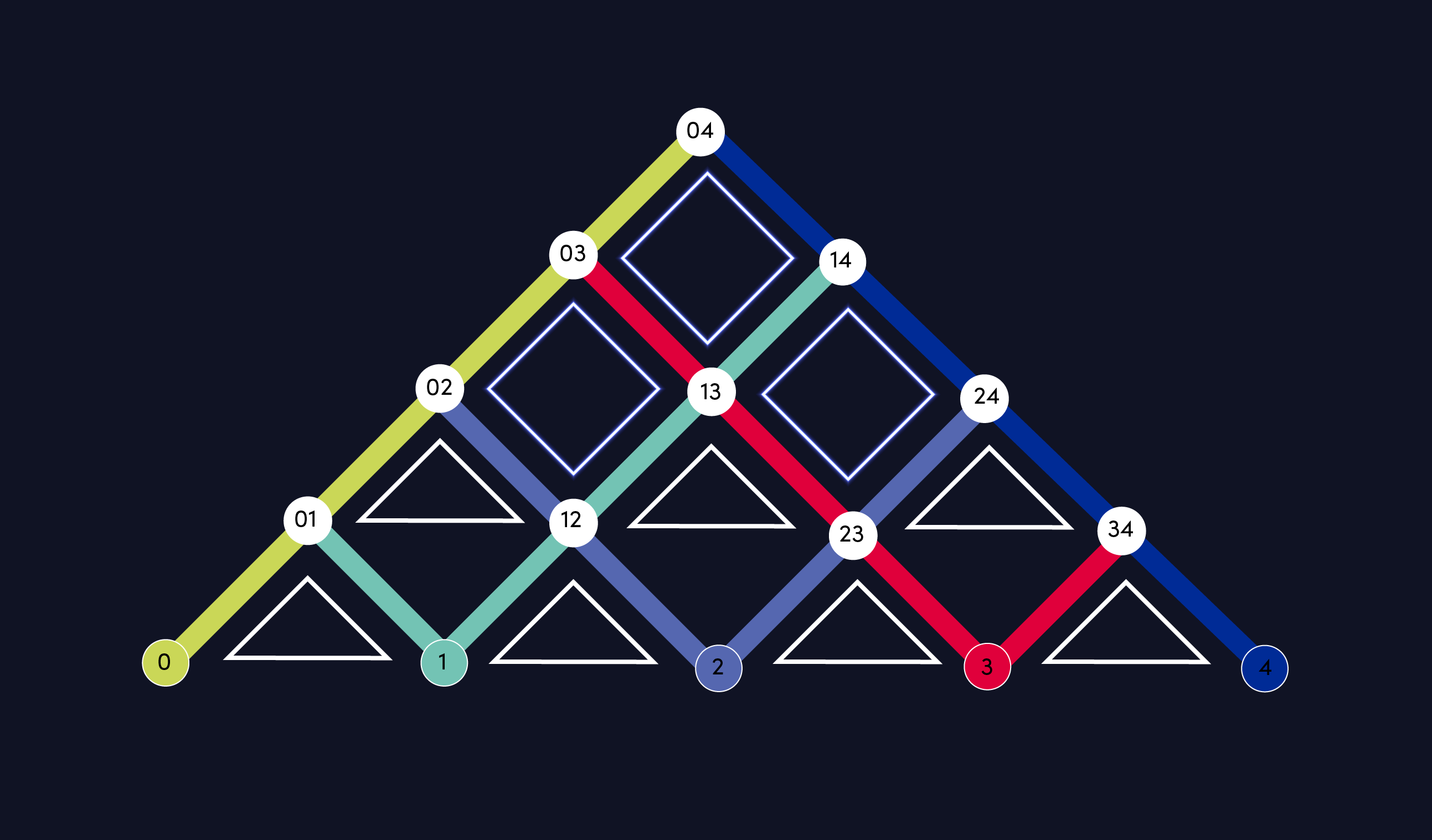

At the root of this important achievement is the ParityQC Architecture. Also known as LHZ Architecture –after the authors Wolfgang Lechner, Philipp Hauke and Peter Zoller — this novel type of encoding was discovered in 2015 to wide acclaim within the scientific community. “This architecture was originally designed for optimization problems,” recalls Wolfgang Lechner, co-founder and co-CEO of ParityQC and professor at the University of Innsbruck. “In the process, we reduced the architecture to a minimum in order to solve these optimization problems as efficiently as possible. The physical qubits in this architecture do not represent individual bits but encode the relative coordination between the bits. This means that not all qubits have to interact with each other anymore”.

The recently published research shows that the ParityQC Architecture can be employed efficiently for the creation of a universal quantum computer. The main reason is that quantum computers implementing the architecture can perform operations between two or more qubits on a single qubit. “Existing quantum computers already implement such operations very well on a small scale,” explains the author Michael Fellner. “However, as the number of qubits increases, it becomes more and more complex to implement these gate operations”. The two papers show that through the ParityQC Architecture it is possible to perform certain operations, such as Shor’s algorithm for factoring numbers, quickly and efficiently.

Another remarkable characteristic of this new method is that it offers hardware-efficient error correction. Error correction is a necessity in order for quantum computers to be functional despite the so-called ‘quantum noise’, but this requires a higher number of qubits and can make the system size too big to manage. “Our model operates with a two-stage error correction: the first, bit flip error or phase error, is prevented by the hardware used. There are already initial experimental approaches for this on different platforms. The other type of error can be detected and corrected via the software” say the authors Anette Messinger and Kilian Ender. This capacity for software correction comes naturally with the ParityQC Architecture, as an intrinsic property of how the information is encoded.

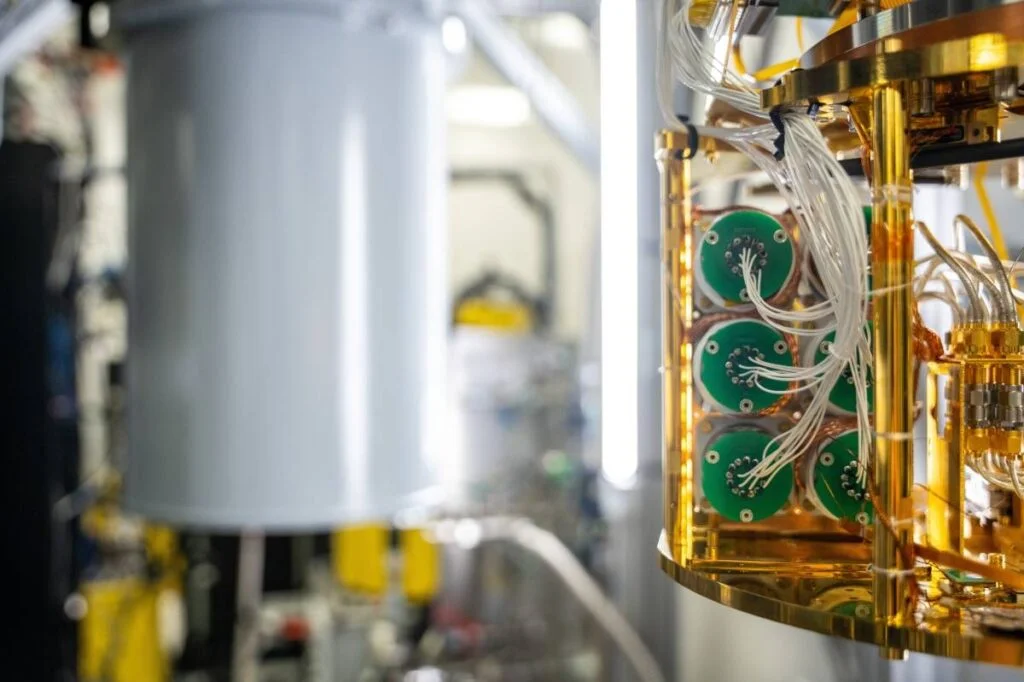

ParityQC is already working with partners from both academia and industry on possible implementations of the new model, which could be the basis for the next generation of quantum computers. The research will also be put into practice within the DLR Quantum Computing Initiative, in a collaborative project that ParityQC is part of. The project is aimed at building modular ion-trap quantum computers based on a universal quantum architecture, that can be scaled up to thousands of qubits.

The research was financially supported by the Austrian Science Fund FWF. This project is funded by the FFG. www.ffg.at

For more market insights, check out our latest quantum computing news here.