Insider Brief:

- The team at Quanscient has successfully completed quantum-native multiphysics simulations and was able to run a computational fluid dynamics (CFD) simulation, solving the 1D advection-diffusion equation, using its quantum-native Quantum Lattice-Boltzmann Method (QLBM) algorithm on a real quantum computer with good accuracy.

- The team of Valtteri Lahtinen, Ljubomir Budinski, Ossi Niemimäki, and Roberto A. Zamora Zamora says the traditional approach to solving PDEs on quantum computers boils down to solving the linear systems of equations using some of the known quantum algorithms for that purpose. This is problematic, especially on the NISQ-era devices, which at best can run a small variational system but with rather limited success. And while algorithms meant for the fault-tolerant era, such as HHL, are clever in their use of quantum advantage, they are also forbiddingly complex to implement on these near-term devices. A more fundamental problem is in focusing on the speed-up and scale of the classical methods rather than rethinking the original problem in light of the capabilities of quantum computers.

- The team aims to address these issues with its quantum-native approach, continuously developed with an in-house quantum circuit optimization methodology, of key importance to enable the running of these simulations on real devices.

QUANTUM COMPUTING RESEARCH NEWS — Tampere, Finland/August 11, 2022/Quanscient — Recently, we achieved a significant milestone here at Quanscient in quantum-native multiphysics simulations.

By quantum native, we mean that the algorithm encodes the physics of the original problem, in some sense, directly into the quantum system.

That is, in a quantum-native simulation, we have a clear and direct analogy between the evolution of the quantum system and the process it models.

The milestone we achieved marks the dawn of a new era in multiphysics simulations.

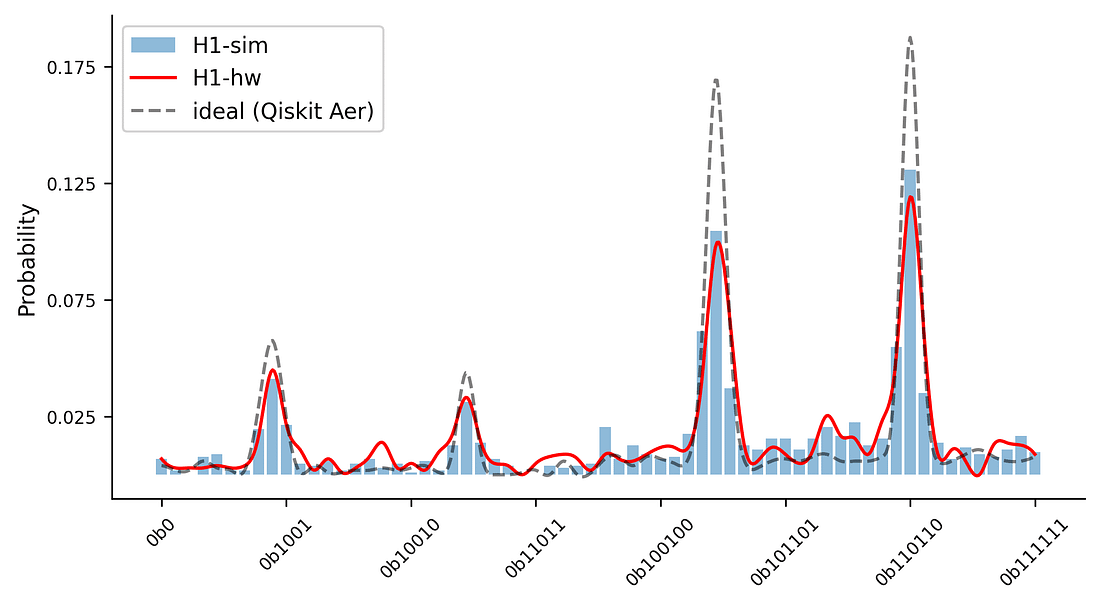

We were able to run a computational fluid dynamics (CFD) simulation, or more precisely, solve the 1D advection-diffusion equation, using our quantum-native Quantum Lattice-Boltzmann Method (QLBM) algorithm on a real quantum computer with good accuracy.

That is to say; we have reasonably accurate results and not just noise.

Even though this was only a small 1D problem with 16 computational data points, this marks the beginning.

We now know that today’s NISQ devices can natively run a macro-scale physics simulation using our quantum-native approach.

The question is, how far can we take this?

What resources does it take?

Our algorithm scales exponentially with the number of qubits, meaning that if we have 100 qubits, the number of physical computation points we can model is in the ballpark of 2¹⁰⁰.

Looking at the size of devices today, we could then, in principle, solve staggeringly huge systems.

“How many qubits does it take?” is a question we hear often, but it’s not the number of qubits that matters so much as what the qubits are capable of.

Given a more complex problem, it is inevitable that the algorithm’s complexity grows too.

In the simplest terms, this means that also the depth of the circuit is growing and that the number of expensive gates is growing.

How much can the device handle before the noise takes over?

At Quanscient, we are not manufacturing quantum hardware. We likely won’t have much say in the error rates of the up-and-coming NISQ devices.

What we can affect is how sensitive our algorithms are to the noise: how deep the circuits grow and how the qubit connections are handled.

The gist is this: the algorithms can be rewritten without changing the outcome. In other words, two circuits may look very different while being essentially equivalent.

When we begin thinking of a quantum solution to a classical problem, we proceed with physical principles in mind. The problem gets translated to a setting suited to quantum states, probability distributions, measurements, etc.

From this, we glean an algorithm to be tested, analyzed, and tweaked further on simulators and even real devices.

In the beginning, it reflects the original physical reasoning; after a few rounds, not so much anymore.

The first form could even be called human readable, while the more optimized iterations will gain more efficiency.

Circuit optimization in itself is a well-known practice, and a more fundamental diagrammatic analysis is also possible (with ZX-calculus (https://zxcalculus.com/), for example).

As the configurations and capabilities of different devices vary, also the algorithms need to be finetuned separately for different hardware: there is no silver bullet.

Reaching milestones

We have achieved a significant concrete milestone on our roadmap to quantum advantage in multiphysics simulations.

This roadmap consists of six concrete steps.

While this is a continuous effort, each milestone marks a significant breakthrough in quantum multiphysics simulations.

- Prototype QLBM solvers running on a quantum simulator ✓

- Concrete evidence for quantum-native macro-scale physics simulations on a NISQ device ✓

- Extending to 2D and 3D simulations on a real quantum computer

- Size of the quantum-native simulation on par with the best classical hardware

- Quantum acceleration

- Simulations on a scale infeasible to solve on classical hardware

Naturally, it is difficult to pinpoint when exactly each of these is within our reach and whether the order given above is precisely how things will happen.

Be that as it may, Quanscient is taking major steps forward on this roadmap as we speak.

We will keep messaging about these achievements: stay tuned for more!

SOURCE: Quanscient

For more market insights, check out our latest quantum computing news here.