When perusing news on science and tech, I’m often reminded of the quote, “You aren’t doing it wrong if no one knows what you’re doing.” Some discoveries seem so far-fetched they are hardly believable, and in some cases actually aren’t. Take for instance, Elizabeth Holmes and her $10 billion brainchild, Theranos, with its claims to be able to test blood for multiple life threatening conditions in a single drop – sure to be a case study in every ethics class Millennials and Gen Zers take in coming years. It seems that if you’re first to market and beat your chest loudly enough, the masses will simply believe what it is you’re pitching; frankly, no matter how responsible the consumer, it is not feasible for the lay person – or even an expert in a different field – to have all available information to prove or disprove a claim, with the perpetually increasing long-tailed knowledge required of experts in immature technologies like quantum information and computing.

Investigators and other responsible consumers of news might disagree with that quote, then, insisting that you may very well be doing it wrong, even if no one else knows what you’re doing. Google’s recent (September 2019) declaration that they have achieved quantum supremacy belies the question, “Do we even have a method of checking the veracity of such a capability?” The intention of this piece is not at all to imply that Googles leaked claim is fabricated, but it does serve to remind of the dictum: trust but verify. In other words, if the capability is beyond the computing power of current classical computers, then anyone could falsely claim they have a superior encryption or problem solving device. It would be akin to devising a calculator that solves problems no one can solve by hand, but with output that is actually just jibberish, not the correct solution. Who would be able to say differently? Until a competitor comes along with the same computing ability or there is verifiable evidence of input and correct output, the claim is just that.

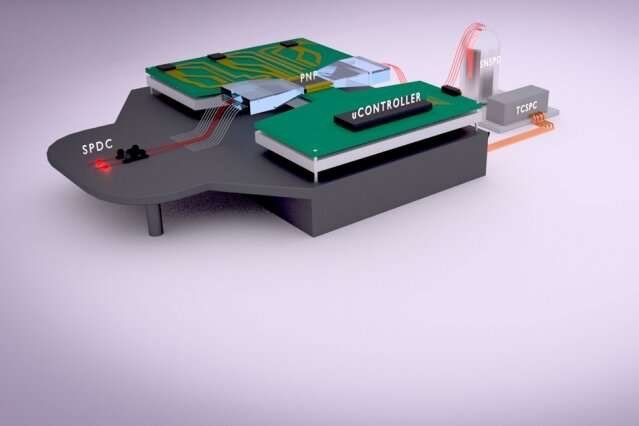

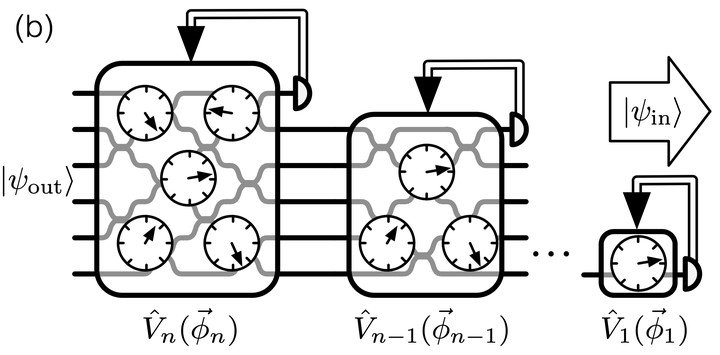

This brings us to an important development that has been in the works for the past year or more. A new protocol called Variational Quantum Unsampling (VQU) is important to the discussion of measuring the accuracy of a quantum chip’s computing ability. Using VQU, researchers at MIT and Google Quantum AI Laboratory, along with others, have designed a novel system wherein complex problems too difficult for modern Turing machines to solve can be deconvoluted, as demonstrated in their January 2020 paper in Nature Physics. In the paper they state, “In our approach, one can variationally train a quantum operation to unravel the action of an unknown unitary on a known input state, essentially learning the inverse of the black-box quantum dynamics.” This ground-breaking method takes an output signal – that’s been scrambled more than a three-egg omelet -and unscrambles it to identify its input state. If the VQU model predicts an input state that matches the known input state, it is an indication of a successful computation by the chip. The proof of concept was performed via an exercise called boson sampling on a custom Noisy Intermediate Scale Quantum (NISQ) chip – more specifically, a custom quantum photonic processor. The ramifications of this type of valuation, however, are much more far-reaching than its intended design for quantum verification; physical problems like molecular modeling may also lay claim to the usefulness of VQU. Other potential applications are optimal quantum measurement, quantum sensing and imaging, and ansatz validation (the embedded atom method).

“The dream is to apply this to interesting problems in the physical world,” says first author Jacques Carolan of MIT; and what interesting problems we will solve are only imaginable.

Citation:

Carolan, J., Mohseni, M., Olson, J.P. et al. Variational quantum unsampling on a quantum photonic processor. Nat. Phys. (2020) doi:10.1038/s41567-019-0747-6