Insider Brief

- A NERSC study finds that algorithmic advances and ambitious industry roadmaps could bring quantum computing into practical use for DOE scientific workloads within the next decade.

- The report highlights rapid declines in qubit and gate requirements, steep projected gains in hardware capacity, and the importance of execution speed measured by the proposed SQSP benchmark.

- Researchers caution that progress is uncertain and will require continued innovation, error-correction advances and close collaboration between government, academia and industry.

The U.S. Department of Energy’s main computing facility has mapped how quantum computers may shift from promise to utility within the decade.

A new analysis by researchers at the National Energy Research Scientific Computing Center (NERSC) and Lawrence Berkeley National Laboratory (LBNL) finds that the resources required to solve key scientific problems on quantum machines have steadily dropped, while company roadmaps show hardware capabilities rising at a steep pace. The study, posted on arXiv, projects that the two trends will soon intersect — opening a window for quantum systems to address workloads that now dominate federal supercomputing.

The report examined NERSC’s scientific workload, which supports more than 12,000 DOE researchers. More than half of current compute cycles are consumed by problems in materials science, quantum chemistry, and high-energy physics, domains where classical methods struggle and quantum devices could excel.

The researchers found that quantum resource estimates — measured in qubits and quantum gate operations — have declined sharply over the past five years. Improvements in algorithms, along with deeper insight into how to structure problems, have cut required qubits by factors of five and gate counts by factors of hundreds or even thousands in benchmark cases such as the FeMoco molecule, according to the researchers.

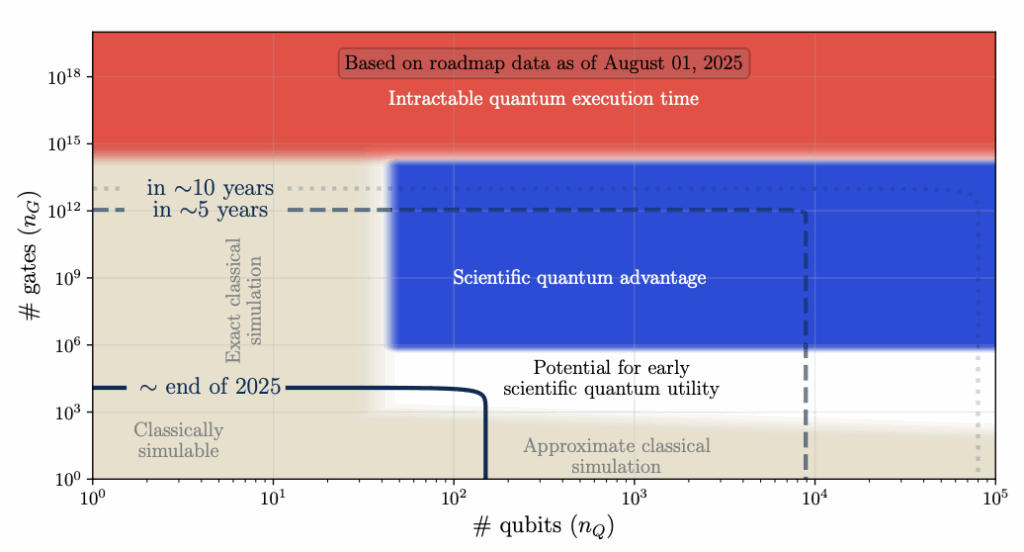

At the same time, public roadmaps from ten quantum computing companies — spanning superconducting circuits, trapped ions, and neutral atoms — predict machines capable of scaling by as much as nine orders of magnitude within ten years. When overlaid against algorithmic requirements, the projections suggest that large-scale quantum systems could align with scientific needs within five to ten years.

Three Main Domains of Impact

The study highlights differences across fields, but focuses on how quantum computing could affect three major domains of study — areas that require vast computational resources.

Materials science problems, such as modeling spin systems or lattice models, map naturally onto qubits and appear closest to reaching “quantum advantage,” the point where quantum machines can beat classical approaches.

For example, electrons that strongly interact with one another give rise to unusual states of matter — including high-temperature superconductivity, magnetism, and topological phases — that quickly overwhelm classical computers because the calculations grow exponentially more complex, the researchers report..

Quantum chemistry stands out as a mature testbed, with resource estimates dropping fastest as algorithms evolve. Ground-state energy calculations for benchmark molecules that once required infeasible levels of computation are now within a range that future hardware may handle.

It’s an area that could lead to scientific advances in several areas, according to the researchers.

They write: “The field of quantum chemistry addresses a number of important scientific and technological challenges, from designing efficient catalysts for sustainable fuels and industrial processes to developing advanced materials for batteries, photovoltaics, and quantum information technologies Quantum chemistry stands out as a leading target for Quantum Computing (QC), as QC has the potential to revolutionize our ability to simulate molecular behavior.”

High-energy physics, including lattice gauge theory and neutrino dynamics, remains the most demanding.

Classical computers can’t handle real-time dynamics or systems pushed far from equilibrium, leaving gaps in areas such as the strong force—the interaction binding quarks and gluons inside matter. Quantum Chromodynamics, the theory describing this force, is singled out by the Department of Energy as one of four top priorities for quantum computing research.

Encoding both fermions and gauge fields drives gate counts higher, meaning significant advances in error-correction and encoding will be essential before quantum devices can compete here.

Speed as Important as Scale

While most studies focus on qubits and gate counts, the NERSC team warns that execution time will become the decisive benchmark once hardware matures. Quantum processors vary widely in clock speed — from kilohertz to gigahertz in published estimates — leading to execution times that could range from seconds to years. In practice, this means that two machines with similar qubit counts could deliver vastly different scientific value depending on how quickly they can complete a calculation.

To capture this dimension, the researchers propose a metric called Sustained Quantum System Performance (SQSP). Like benchmarks in supercomputing, SQSP measures how many complete scientific workflows a system can run per year across a mix of applications. This metric shifts the focus from raw component counts to practical throughput, offering a way to compare systems with very different designs. Early modeling suggests throughput — how much work a system can get done in a given amount of time — could span from a single run to tens of millions annually, depending on architecture and design choices.

The implication is that speed will be just as important as scale. A quantum computer that technically meets the qubit and gate thresholds for a chemistry or physics problem may still be impractical if the run time stretches into months. By contrast, a system with higher effective throughput could unlock rapid iteration, letting scientists test more hypotheses and accelerate discovery. For agencies like DOE, which plan computing procurements on five-year cycles, SQSP could become a key decision tool for evaluating when quantum hardware is ready to supplement classical supercomputers.

Roadmap Evaluation Overview

The NERSC analysis reviewed public roadmaps from major quantum computing companies at the time of the study and found a consistent theme: vendors anticipate a steep rise in machine performance over the next decade. Across superconducting, trapped-ion and neutral-atom platforms, the projections point to exponential scaling, with claims of performance gains reaching as much as nine orders of magnitude.

The study places these vendor milestones against scientific requirements and finds growing overlap. By around 2025 to 2027, companies expect systems that could begin offering “quantum utility” on select problems, with early advantage possible in materials science and chemistry. By the mid-2030s, the most ambitious roadmaps envision fault-tolerant devices capable of handling millions of logical operations, enough to make inroads on chemistry benchmarks, condensed matter models and elements of high-energy physics.

Not all vendors publish detailed timelines, but taken together, the roadmaps show convergence around three stages: small error-corrected systems within five years, larger fault-tolerant machines within a decade, and very large-scale systems beyond that. This trajectory is faster than DOE’s own strategic roadmap, underscoring both the competitive pressure and the uncertainty in the forecasts.

The report stresses that execution time will remain a limiting factor even if qubit counts grow as projected. Still, if industry targets are met, the scaling curves for hardware and the resource curves for algorithms could meet within the next decade, opening the door for quantum computers to directly support DOE’s most demanding scientific workloads.

Implications for DOE and Beyond

For DOE, which deploys new supercomputers on five-year cycles, the timing is critical. The report notes that “quantum relevant” problems — those where quantum mechanics is central and classical methods falter — already account for more than half of the workload on NERSC’s current Perlmutter system. As quantum devices approach capability, integrating them alongside classical high-performance machines will become a strategic priority.

The findings also suggest that the biggest leaps will not come only from hardware. Just as important will be continued progress in algorithms that cut the space-time volume of quantum circuits, and in error-correction schemes that reduce costly overhead. Constant-factor improvements, rather than sweeping theoretical breakthroughs, have already yielded dramatic savings and are expected to continue.

The researchers caution that forecasting technology — just like the underlying technology itself — is inherently uncertain. Vendor roadmaps are aspirational, and some milestones — such as achieving thousands of error-corrected qubits -remain unproven. The study frames its estimates as upper bounds, acknowledging that both hardware advances and unforeseen algorithmic breakthroughs could shift the picture.

Another limitation is that much of the analysis focuses on static problems, such as ground-state energy calculations, where benchmarks are better defined. Dynamic problems — real-time chemistry, particle collisions, or plasma turbulence — remain less explored and could demand far larger resources than current models suggest.

Future Directions

Looking ahead, the report signals three areas where progress will shape the field:

- Algorithmic refinement — Constant reductions in required qubits and gates will continue to close the gap between hardware and applications.

- Execution benchmarking — Metrics such as SQSP will be critical for comparing machines and planning procurement.

- Integration strategies — DOE and other institutions will need frameworks to combine classical supercomputers and quantum processors into hybrid workflows.

Although quantum computing holds promise for scientific discovery, the team cautions that progress is far from guaranteed. Reaching that future will demand not only innovation and persistence but also close collaboration across the field.

They add: “In addition, the classical resources needed for error correction and hybrid algorithms with both quantum and classical resources in the loop, might require tight coupling with low latencies between HPC and Quantum Computing (QC). This inevitably adds another layer of complication to the proposed vendor roadmaps. A tight collaboration between the public and private sector is key in overcoming these challenges and unlocking the quantum computing potential.”

For a deeper, more technical dive, please review the paper on arXiv. It’s important to note that arXiv is a pre-print server, which allows researchers to receive quick feedback on their work. However, it is not — nor is this article, itself — official peer-review publications. Peer-review is an important step in the scientific process to verify results.

The research team included: Daan Camps, Ermal Rrapaj, Katherine Klymko, Hyeongjin Kim, Kevin Gott,

Siva Darbha, Jan Balewski, Brian Austin and Nicholas J. Wright