Insider Brief

- AI is emerging as a critical tool for advancing quantum computing, helping address challenges across hardware design, algorithm compilation, device control, and error correction.

- Researchers report that machine-learning models can optimize quantum hardware and generate more efficient circuits, but face scaling limits due to exponential data requirements and drifting noise conditions.

- The study concludes that long-term progress will likely depend on hybrid systems that combine AI supercomputers with quantum processors to overcome the bottlenecks neither technology can solve alone.

Artificial intelligence may now be the most important tool for solving quantum computing’s most stubborn problems. That is the core argument of a new research review from a 28-author team led by NVIDIA, which reports that AI is beginning to outperform traditional engineering methods in nearly every layer of the quantum-computing stack.

At the same time, the reverse may also one day prove true: quantum computing could become essential for building the next generation of sustainable AI systems. As AI models expand into trillion-parameter scales and energy constraints tighten, the researchers say a hybrid computing architecture that tightly couples classical AI supercomputers with quantum processors may be unavoidable.8The paper – published in Nature Communications – is yet another sign that the two fields are converging faster than expected. Zooming out, what began as two separate scientific communities are now showing signs of structural interdependence.

The authors, drawn from NVIDIA, University of Oxford, University of Toronto, Quantum Motion, Perimeter Institute, The University of Waterloo, Qubit Pharmaceuticals, NASA Ames, and other institutions, make a case that the future development of quantum computing may depend almost entirely on AI-driven design, optimization and error suppression. Their review suggests that AI will be necessary to move the field out of the noisy intermediate-scale quantum era and into practical fault-tolerant machines.

At the same time, the paper indicates that even the best AI systems are still “fundamentally classical” and cannot escape the exponential overhead of simulating large quantum systems. That limitation is one reason to speculate that the long-term evolution of AI systems will require quantum accelerators to continue scaling.

If so, the result may be a kind of feedback loop in the future: AI will be needed to make quantum computing work, and quantum computing may be needed to make AI sustainable.

How Does AI Optimize the Quantum Computing Stack?

AI techniques are replacing or enhancing traditional engineering approaches across every layer of quantum computer development and operation – from hardware design to preprocessing, device control, error correction, and post-processing.

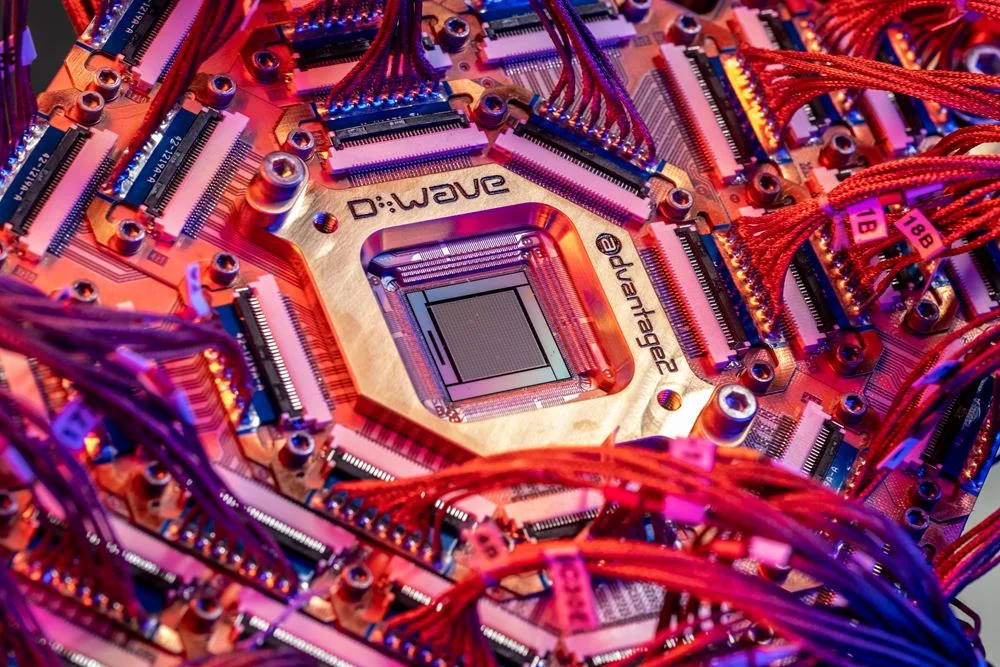

The team starts at the lowest level: hardware design. Quantum devices are notoriously sensitive to tiny fabrication errors, stray electromagnetic effects and material imperfections that cause qubits to behave unpredictably. According to the authors, AI now provides reliable shortcuts for these design challenges. Deep-learning models have automatically designed superconducting qubit geometries, optimized multi-qubit operations and proposed optical setups for generating highly entangled states.

These models can evaluate countless geometric or electromagnetic configurations that would be impossible to inspect manually. In some cases, reinforcement-learning agents have found gate designs that were later verified experimentally, which is also a sign that AI is beginning to generate quantum-hardware insights rather than simply tuning known designs.

Another critical bottleneck is characterizing quantum systems, especially the Hamiltonians and noise processes governing their dynamics.

The researchers explain that machine learning is now helping physicists infer the behavior of quantum devices by reconstructing their underlying equations. For “closed” quantum systems, ML models can recover the Hamiltonian – the mathematical object that describes how an isolated quantum system evolves. For “open” systems, which interact with their environment and suffer noise, ML can learn the Lindbladian – a more complex equation that captures dissipation and decoherence. In both cases, AI can extract these governing rules from limited or noisy experimental data, cutting down on the number of measurements scientists must perform.

In today’s hardware, noise sources are extremely difficult to model, and most qubits operate in regimes that drift over time. The review points to ML methods that can learn disorder potentials, map out the nuclear spin environments around qubits, and track fluctuations that degrade coherence. These capabilities are especially important for spin-based, photonic, and superconducting systems, where environmental drift can render devices unusable without extensive manual recalibration.

| Quantum Stack Layer | What AI Does | Why It Matters | Typical Methods Mentioned |

|---|---|---|---|

| Hardware design | Proposes qubit geometries, gate layouts, and experimental setups | Speeds iteration and finds designs too large to explore manually | Deep learning, reinforcement learning |

| Characterization | Infers device dynamics and noise from limited measurements | Reduces measurement burden and improves model accuracy | Supervised learning, Bayesian methods |

| Compilation | Generates shorter, hardware-aware circuits | Fewer gates means higher success rates on noisy devices | Transformers, RL search |

| Device control | Automates tuning, calibration, and drift compensation | Cuts setup time and improves stability | RL agents, Bayesian optimization, vision-language agents |

| Error correction | Decodes syndrome data and adapts to realistic noise | Enables lower-latency decisions at larger code distances | CNNs, transformers, graph neural networks |

| Readout and post-processing | Improves measurement classification, tomography, mitigation | Better results with fewer samples and cleaner signals | CNNs, HMMs, random forests, neural networks |

What New Capabilities Does AI Bring to Quantum Computing?

AI is fundamentally reshaping quantum algorithm compilation by generating optimized circuits that outperform traditional methods.

Quantum circuit optimization has long been a difficult combinatorial problem. The number of possible gate sequences grows exponentially with qubit count, and the best choices vary by hardware platform. The researchers report that emerging AI models can now generate quantum circuits directly – sometimes outperforming handcrafted or brute-force methods.

One example is a transformer-based system called GPT-QE, which learns to generate compact quantum circuits for chemistry problems by iteratively sampling operators and updating model parameters based on energy estimates. Variants have been adapted to combinatorial optimization, yielding shorter quantum approximate optimization algorithm (QAOA) circuits that run with fewer gates.

DeepMind’s AlphaTensor-Quantum is another example, applying reinforcement learning to identify more efficient decompositions of expensive non-Clifford gates. By searching action spaces too large for classical methods, AI agents can uncover decompositions that dramatically reduce gate counts, The researchers suggest this is an essential step in producing circuits that can run on today’s noisy hardware.

The review also highlights AI’s role in parameter transfer. Graph neural networks and graph-embedding techniques can learn relationships between problem instances, allowing optimal circuit parameters to be reused across related tasks. This makes it possible to bypass the long optimization loops that typically slow variational quantum algorithms.

The limitation, the team writes, is that training such models often requires classical simulations that themselves scale exponentially. This is one of the first places where quantum hardware may need to play a direct role in supporting AI training runs.

| AI System or Approach | What It Optimizes | Core Benefit | Why It Helps on NISQ Hardware |

|---|---|---|---|

| GPT-QE (transformer-based) | Compact circuits for chemistry tasks | Generates shorter circuits than manual approaches | Fewer gates reduce error accumulation |

| QAOA circuit shortening (AI variants) | Combinatorial optimization circuits | Reduces gate depth and runtime | Shorter depth is more robust to noise |

| AlphaTensor-Quantum (RL) | Non-Clifford gate decompositions | Finds efficient decompositions at scale | Lower gate counts improve success probability |

| Parameter transfer (GNNs, embeddings) | Re-using circuit parameters across tasks | Cuts long optimization loops | Faster tuning means more usable runs per device |

Can AI Automate Quantum Device Operations?

Yes – AI is beginning to automate tasks once considered the exclusive domain of human quantum physicists – one of the most striking conclusions of the paper.

Quantum devices require extensive tuning, pulse calibration and stability checks – processes that can take days or weeks per device. Reinforcement-learning agents, computer-vision models, and Bayesian optimizers are now handling large parts of this routine calibration.

Automated calibration examples cited in the review:

- Automatic tuning of semiconductor spin qubits

- Optimization of Rabi oscillation speeds

- Compensation for charge-sensor drift

- Feedback-based pulse shaping that improves gate fidelity

In some experiments, RL agents successfully prepared cavity states, optimized qubit initialization protocols and compensated for unwanted Hamiltonian terms that cause coherent errors.

The team takes this a step further: they highlight recent demonstrations in which large language models (LLMs) and vision-language agents autonomously guided full calibration workflows, interpreting plots, analyzing measurement trends, and choosing the next experiment. This behavior begins to resemble the tasks of a junior laboratory scientist.

Current limitation: These systems are not yet reliable enough to operate without human oversight, according to the researchers. But they make the point that as training data and multimodal capabilities improve, AI agents will eventually manage calibration across large multi-qubit arrays. The implication is that future factories for quantum processors may become largely autonomous.

Why Is Quantum Error Correction AI’s Hardest Challenge?

Arguably, the most difficult challenge in quantum computing is error correction. To perform fault-tolerant computation, qubits must be encoded into large arrays where errors can be detected and corrected faster than they accumulate. The decoding step – interpreting syndrome data to identify likely error patterns – is computationally intensive and must occur at extremely low latency.

The team members devote a significant portion of their review to explaining why AI may be the only path to scalable decoders. Conventional decoders such as minimum-weight perfect matching struggle to keep pace as systems expand, and they are sensitive to realistic noise processes that differ from the idealized depolarizing models often used in simulations.

Conventional decoder limitations:

- Minimum-weight perfect matching struggles to keep pace as systems expand

- Sensitivity to realistic noise processes differing from idealized models

- Cannot adapt to complex environmental variations

AI approaches – from convolutional networks to transformers and graph neural networks – have shown they can identify error patterns more efficiently, adapt to complicated noise and generalize across code distances. Some of the best-performing models mentioned in the review include transformer-based decoders trained on billions of samples and CNN-based pre-decoders that run efficiently on FPGA hardware.

AI decoder advantages:

- Convolutional networks, transformers, and graph neural networks identify error patterns more efficiently

- Adapt to complicated noise conditions

- Generalize across code distances

| Decoder Type | Strengths | Limitations | Best Fit |

|---|---|---|---|

| Conventional decoders (e.g., MWPM) | Well-studied, interpretable, strong on idealized noise | Latency and scaling challenges, sensitive to realistic noise | Smaller codes, controlled noise models |

| AI decoders (CNNs, transformers, GNNs) | Adapts to complex noise, can generalize across distances | Training data can grow exponentially, risk of poor generalization | Realistic noise, large-scale decoding, low-latency pipelines |

The Training Data Bottleneck

Yet even these models have limitations. Training transformers for large surface-code distances requires training data that grows exponentially – the authors estimate that moving from distance-9 to distance-25 could require 10¹³ to 10¹⁴ examples.

(Note: A “distance” of a quantum code reflects how many physical errors it can withstand; moving from distance-9 to distance-25 means jumping to a much larger and more robust code that can correct far more errors but requires exponentially more training data to decode.)

Without new techniques for generating synthetic error data or running training loops on quantum hardware itself, these approaches may hit practical limits.

The scientists also discuss AI-driven code discovery, where reinforcement-learning agents explore vast design spaces for new error-correcting codes. These agents have already found constructions that outperform random search and show signs of scaling to higher qubit counts.

How Does AI Improve Quantum Measurement and Post-Processing?

The review argues that AI also improves the efficiency of post-processing tasks such as qubit readout, state tomography, and noise mitigation.

- Machine-learning models have outperformed standard approaches at distinguishing qubit measurement signals, especially in superconducting and neutral-atom systems. CNNs and Hidden Markov models detect subtle time-series features that simpler algorithms miss.

- For tomography – one of the most measurement-intensive tasks in quantum computing – neural networks cut the number of required samples by orders of magnitude. GPT-based models, trained on simulated shadow-tomography data, predict ground-state properties in systems where full tomography is impractical.

- Error-mitigation techniques, including zero-noise extrapolation, can also be enhanced with ML models that learn the relationship between noise levels and observable outcomes. Random-forest predictors have, in some cases, outperformed conventional mitigation methods, though the authors caution that these approaches lack rigorous statistical guarantees.

Where Do AI’s Limitations Still Constrain Quantum Progress?

We should point out the limitations because the researchers stress that while AI is beginning to outperform traditional approaches across the quantum stack, they also emphasize the sharp boundaries of its usefulness. Machine-learning models remain fundamentally classical and cannot escape the exponential overhead of simulating large quantum systems. That constraint becomes most visible in error correction, where even a modest increase in code size drives training demand into untenable territory.

The review also indicates that many AI models generalize poorly when real hardware deviates from the noise distributions used during training. Synthetic datasets can drift from experimental reality, producing decoders and control policies that work well in simulation but fail on devices exposed to fluctuating environments. Automated calibration agents still miss edge cases that human researchers can catch, limiting their ability to operate without supervision. Post-processing models such as neural-network-based error mitigation also lack rigorous statistical guarantees, raising concerns for precision-sensitive applications such as chemistry and materials modeling.

These constraints reinforce one of the paper’s central findings: AI can accelerate quantum development but cannot substitute for fault-tolerant hardware or eliminate the need for more robust quantum processors. The field will still require scalable qubit architectures, reliable error-correcting codes, and specialized classical infrastructure capable of supporting large-scale quantum workloads.

| Constraint | What It Looks Like in Practice | Why It Matters | What Helps |

|---|---|---|---|

| Exponential simulation overhead | Classical training and simulation costs explode with qubits | Limits scale of models and training loops | Hybrid workflows, quantum-generated training data |

| Noise drift and distribution shift | Models trained on synthetic data fail on real devices | Reduces reliability of control and decoding | Online adaptation, continual training, better datasets |

| Training data bottleneck | Huge sample requirements for large code distances | Makes scaling decoders expensive or impractical | Synthetic data generation, hardware-in-the-loop training |

| Lack of rigorous guarantees | Mitigation models can be hard to validate statistically | Risk for precision-sensitive applications | Benchmarks, uncertainty estimation, validation suites |

Will Future Systems Require a Quantum-AI Supercomputer?

The paper closes by addressing what may be the most important strategic implication: AI and quantum computing may need to be developed as a single hybrid ecosystem.

Training large AI models requires enormous amounts of compute, and classical simulation of quantum systems will never scale efficiently. Quantum processors will eventually need to be embedded inside AI-accelerated supercomputers with high-bandwidth, low-latency interconnects, according to the researchers. Such systems will allow AI models to train on quantum-generated data, use quantum subroutines in their optimization loops, and coordinate with classical accelerators for workload distribution.

Likewise, quantum computing will rely on AI copilots, automated calibration agents, synthetic-data generators and reinforcement learners that optimize everything from pulse schedules to logical-qubit layouts.

The authors refer to this future architecture as an “accelerated quantum supercomputing system.” It is a vision where AI and quantum capabilities grow together, each compensating for the other’s constraints.

The paper is quite technical and covers material that this article may have unintentionally glossed over to provide a tighter summary. So, for a deeper, more technical dive, please review the paper on Nature Communications.

Frequently Asked Questions

What is the main finding of the NVIDIA-led quantum computing research?

AI is becoming essential for solving quantum computing’s most difficult challenges across hardware design, algorithm compilation, device control, and error correction. The research shows AI outperforming traditional methods across nearly every layer of the quantum computing stack, while quantum computing may prove necessary for building sustainable next-generation AI systems.

How does AI help with quantum hardware design?

AI uses deep-learning models to automatically design superconducting qubit geometries, optimize multi-qubit operations, and propose optical setups. Reinforcement-learning agents have discovered gate designs later verified experimentally, and machine learning reconstructs the mathematical equations governing quantum system behavior from limited experimental data.

What is quantum error correction and why is it difficult?

Quantum error correction involves encoding qubits into large arrays where errors can be detected and corrected faster than they accumulate. It’s difficult because decoding syndrome data to identify error patterns is computationally intensive, requires extremely low latency, and the training data requirements grow exponentially as code size increases.

What are the main limitations of using AI for quantum computing?

AI models remain fundamentally classical and cannot escape the exponential overhead of simulating large quantum systems. They generalize poorly when real hardware deviates from training conditions, require enormous amounts of training data for error correction at scale, and lack rigorous statistical guarantees for precision-sensitive applications.

Which companies and institutions contributed to this research?

The 28-author research team included contributors from NVIDIA, University of Oxford, University of Toronto, Quantum Motion, Perimeter Institute, The University of Waterloo, Qubit Pharmaceuticals, NASA Ames, and other institutions. The paper was published in Nature Communications.