Insider Brief

- Harvard researchers have demonstrated advanced error-correction techniques in quantum circuits, significantly improving scalability and reliability of qubit systems, according to the Harvard Gazette.

- The team combined multiple error-correction layers to suppress errors below a critical threshold, enabling qubits to maintain coherence over deeper quantum circuits.

- The research uses neutral rubidium atoms and adds to ongoing efforts worldwide to develop large-scale, fault-tolerant quantum computers.

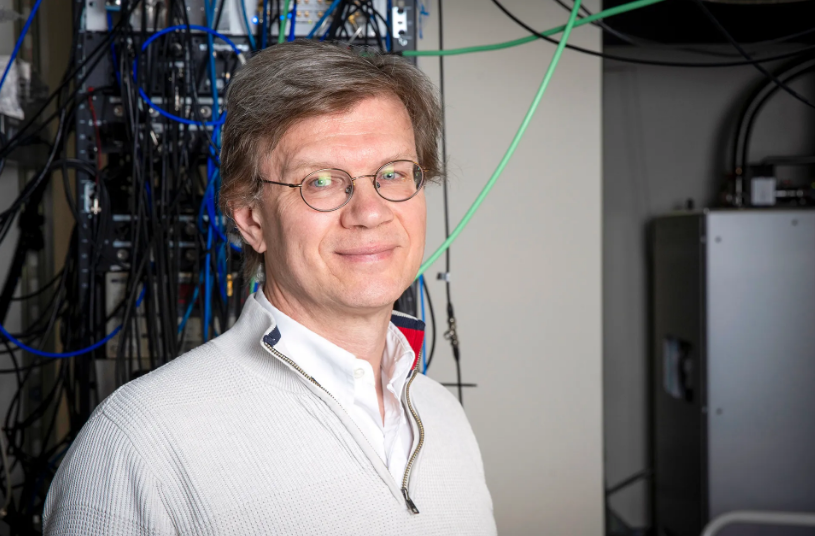

- Photo by Niles Singer – Harvard Staff Photographer

Harvard researchers have made significant progress toward scalable quantum computing by demonstrating advanced error-correction techniques, according to the Harvard Gazette. Their new work focuses on creating complex quantum circuits with multiple layers of error correction, suppressing errors below a critical threshold where adding qubits improves, rather than worsens, system reliability.

Conventional computers encode information in bits (zeros and ones), while quantum computers use qubits — subatomic units that exploit quantum phenomena such as superposition and entanglement. Unlike classical bits, doubling qubits exponentially increases processing power. In theory, 300 qubits could store more information than there are particles in the known universe. This massive computational potential could impact fields including drug discovery, cryptography, AI, finance, and material science.

But practical quantum computing faces hurdles, chief among them the fragility of qubits. Qubits can lose their state easily, making robust error correction essential. The Harvard team combined multiple methods to construct circuits with dozens of correction layers, targeting scalable, deep-circuit computation.

“There have been many important theoretical proposals for how you should implement error correction. In this paper, we really focused on understanding what are the core mechanisms for enabling scalable, deep-circuit computation. By understanding that, you can essentially remove things that you don’t need, reduce your overheads, and get to a practical regime much faster.”

— said Alexandra Geim, lead author and Ph.D. student in the Kenneth C. Griffin Graduate School of Arts and Sciences.

Experimental insights guide progress

Years of laboratory experiments helped the team distinguish real technical bottlenecks from those that can be bypassed, explained Mikhail Lukin, senior researcher. “In the end, physics is an experimental science. By realizing and testing these fundamental ideas in a lab, you really start seeing light at the end of the tunnel,” he said.

The Harvard team specializes in neutral rubidium atoms, manipulated with lasers to encode qubits. Globally, researchers are exploring alternative platforms, including ions and superconducting qubits.

“This work represents a significant advance toward our shared goal of building a large-scale, useful quantum computer.” — said Hartmut Neven, VP of engineering at Google Quantum AI.

Earlier this year, the Harvard-MIT-QuEra collaboration demonstrated a 3,000-qubit system capable of continuous operation for over two hours, overcoming atom loss – another technical hurdle in scaling quantum devices. With these advances, Lukin notes, “This big dream that many of us had for several decades, for the first time, is really in direct sight.”

Source: Harvard Gazette