Insider Brief

- Researchers from Oak Ridge National Laboratory and North Carolina State University demonstrated a framework that integrates quantum simulators and cloud services with supercomputers to run hybrid quantum-classical applications.

- Benchmarks showed that different backends excel at different tasks, with state-vector, tensor-network, and distributed approaches each offering workload-specific advantages.

- The study highlights that reproducible, backend-agnostic orchestration is key to scaling hybrid quantum-HPC workflows and preparing for practical quantum advantage.

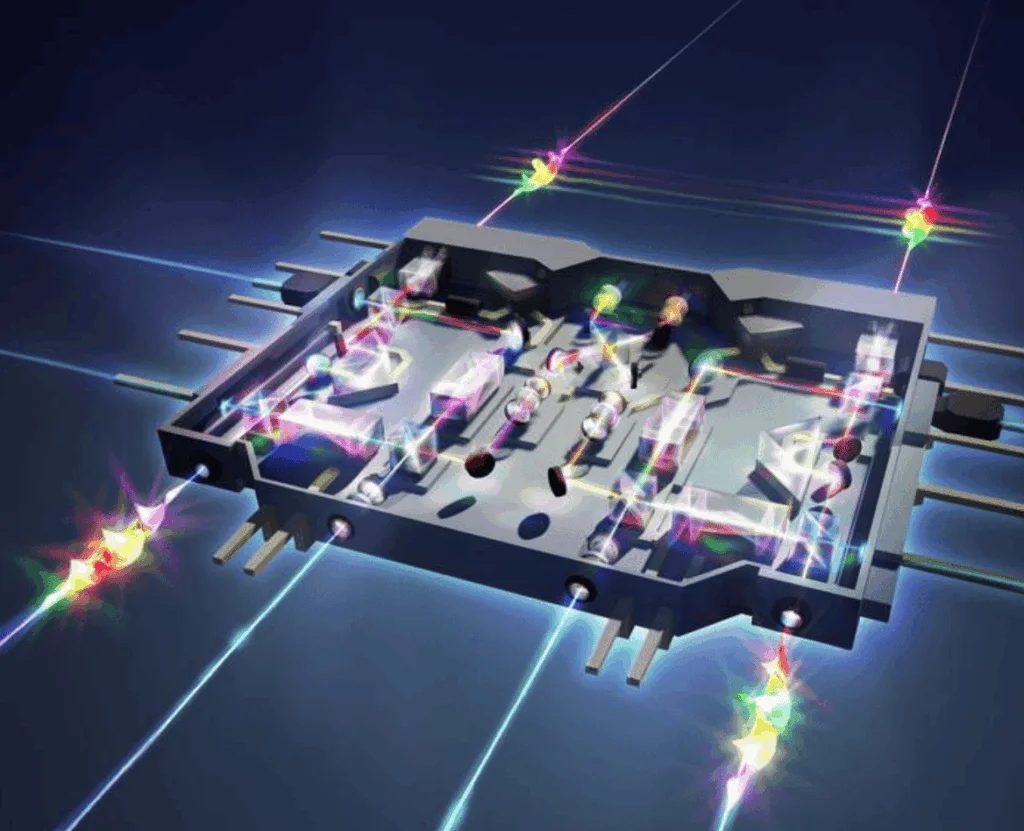

- Image: Carlos Jones/ORNL, U.S. Dept. of Energy

A new study demonstrates that hybrid workflows linking quantum processors with high-performance computers can be scaled across different simulators and hardware, suggesting a practical route to making quantum applications usable at larger sizes.

It could also provide a viable path to quantum advantage, the researchers behind the study point out.

The work, published on arXiv by researchers from Oak Ridge National Laboratory and North Carolina State University, shows that a flexible orchestration layer can balance workloads across classical and quantum systems, providing reproducibility and portability across platforms.

The researchers extended the Quantum Framework (QFw), a modular system originally designed for hybrid computing, to integrate multiple local simulators — including Qiskit Aer, NWQ-Sim, QTensor, and TN-QVM — and a cloud-based backend from IonQ. With this setup, they benchmarked a range of workloads from fixed quantum circuits to variational algorithms such as the Quantum Approximate Optimization Algorithm (QAOA).

Many Roads, No Single Winner

The results show that no single simulator or backend outperforms all others. Instead, each has distinct advantages depending on the structure of the problem. Qiskit Aer’s matrix product state method handled large Ising models efficiently, while NWQ-Sim showed strength in simulating highly entangled states and Hamiltonians. QTensor’s decomposition methods worked better for tree-like circuits but slowed on deeper, more complex ones. The IonQ cloud service performed reliably but incurred latency compared to local runs.

For variational workloads, the study tested distributed versions of QAOA. A QAOA is a method that uses a small quantum circuit, guided by a classical computer, to search for good solutions to tough optimization problems. In this case, the “distributed QAOA” allowed many sub-problems to be executed simultaneously across different backends. The approach significantly reduced computation times, especially when mapped to a supercomputer environment. Fidelity — how closely the results matched expected answers — remained above 95% across tested cases.

The findings point to the need for orchestration tools that can manage both classical and quantum tasks seamlessly. Current quantum processors remain constrained by qubit count and noise, which limit the size of problems they can handle. By combining classical high-performance resources with quantum subroutines, hybrid workflows like those enabled by QFw provide a bridge between today’s limited hardware and future large-scale quantum computing, according to the team.

The researchers emphasize that workload-dependent performance makes it risky to rely on a single backend. Instead, reproducible and portable frameworks allow users to benchmark across platforms, choose the best tool for a given problem, and switch between hardware with minimal code changes.

This portability is expected to become critical as supercomputing centers integrate quantum accelerators into their systems.

Going Under the Hood of QFw

The team deployed QFw on the Frontier test cluster at Oak Ridge, currently one of the world’s most powerful supercomputer. Each node contains 64 AMD EPYC CPU cores and eight AMD Instinct GPUs, according to the paper. They set up heterogeneous job groups — in other words, splitting a supercomputer job into parts that use different types of resources, such as CPUs, GPUs, or quantum simulators — under one coordinated run. The team used SLURM, a workload manager widely used in supercomputing, to allocate separate resources for the application layer and the framework’s orchestration services.

QFw coordinated execution through the Process Management Interface for Exascale, which allowed distributed spawning of quantum tasks across nodes. Applications written in frameworks like Qiskit or PennyLane submitted circuits to QFw, which then dispatched them either to local simulators through MPI or to cloud hardware through REST calls. The modular architecture made it possible to swap backends without rewriting application code.

Benchmarks covered both non-variational tasks — fixed quantum circuits run as-is — such as GHZ entanglement state preparation, Hamiltonian simulation, the transverse-field Ising model and the Harrow-Hassidim-Lloyd linear solver, as well as variational algorithms — adjustable circuits tuned through a quantum-classical feedback loop — like QAOA and its distributed variant.

Problem sizes ranged up to 32 qubits for fixed circuits and optimization problems with up to 40 binary variables for variational cases.

Hitting the Limits

The study acknowledges limits in both scope and resources. For example, tests on the Frontier system were constrained to a smaller test cluster, meaning not all strong-scaling behavior could be observed. Some runs exceeded the two-hour cutoff, particularly for larger circuit depths.

The IonQ integration was limited to its cloud simulator, with real-hardware tests planned for future work.

Performance also varied depending on backend maturity and MPI support. For example, Qiskit Aer, while strong for certain workloads, struggled to benefit from multi-node scaling since it is not natively designed for distributed execution. Very small sub-problem partitions in distributed QAOA incurred overhead from communication and scheduling, reducing efficiency.

These results suggest that scaling strategies must balance sub-problem size with orchestration overhead.

Next Steps

The researchers plan to extend the framework to GPU-accelerated tensor-network methods and to integrate additional hardware providers. Automated workload-driven backend selection is also a priority, so that users can focus on the science rather than choosing execution targets manually. Larger-scale studies combining both high-performance computing resources and multiple quantum hardware platforms are also on the horizon.

Longer term, the work supports the broader vision of hybrid computing in which quantum processors act as accelerators within supercomputing centers. By decoupling application logic from backend execution, QFw provides a testbed for reproducible benchmarking and cross-platform studies, both of which are seen as essential for identifying where genuine quantum advantage may appear.

The researchers argue that addressing the limitations of the system and taking these future research states could lead to practical quantum advantage. They add that quantum advantage will likely come not from isolated devices but from tightly integrated ecosystems where supercomputers and quantum processors work in tandem.

The study was conducted by Srikar Chundury and Frank Mueller of North Carolina State University, along with Amir Shehata, Seongmin Kim, Muralikrishnan Gopalakrishnan Meena, Chao Lu, Kalyana Gottiparthi, Eduardo Antonio Coello Perez, and In-Saeng Suh of Oak Ridge National Laboratory.

For a deeper, more technical dive, please review the paper on arXiv. It’s important to note that arXiv is a pre-print server, which allows researchers to receive quick feedback on their work. However, it is not — nor is this article, itself — official peer-review publications. Peer-review is an important step in the scientific process to verify results.