Insider Brief

- NVIDIA’s CUDA-QX 0.4 release adds new quantum error correction and solver capabilities, including a generative AI-powered algorithm, GPU-accelerated tensor network decoder, and automated detector error model generation.

- The update enhances CUDA-Q’s end-to-end workflow for designing, simulating, and deploying error-correcting codes, with upgrades to tensor network and BP+OSD decoders for flexibility, accuracy, and performance.

- A new Generative Quantum Eigensolver integrates transformer-based AI to design quantum circuits adaptively, aiming to overcome optimization challenges in quantum chemistry and physics simulations.

NVIDIA has added a slate of new tools to its CUDA-QX quantum computing platform aimed at helping researchers tackle one of the biggest barriers to large-scale quantum computers: error correction. The update, announced in the CUDA-QX 0.4 release and discussed in a company blog, includes a generative AI-powered quantum algorithm, a GPU-accelerated tensor network decoder, and automated generation of detector error models from noisy circuits.

The release targets quantum error correction (QEC), a process needed to protect fragile quantum states from noise. NVIDIA said in the blog that QEC represents both the largest opportunity and the most difficult challenge in building commercially viable quantum processors. The company’s CUDA-Q QEC framework is designed to accelerate the entire workflow, from designing error-correcting codes to deploying decoders on physical hardware.

New Tools

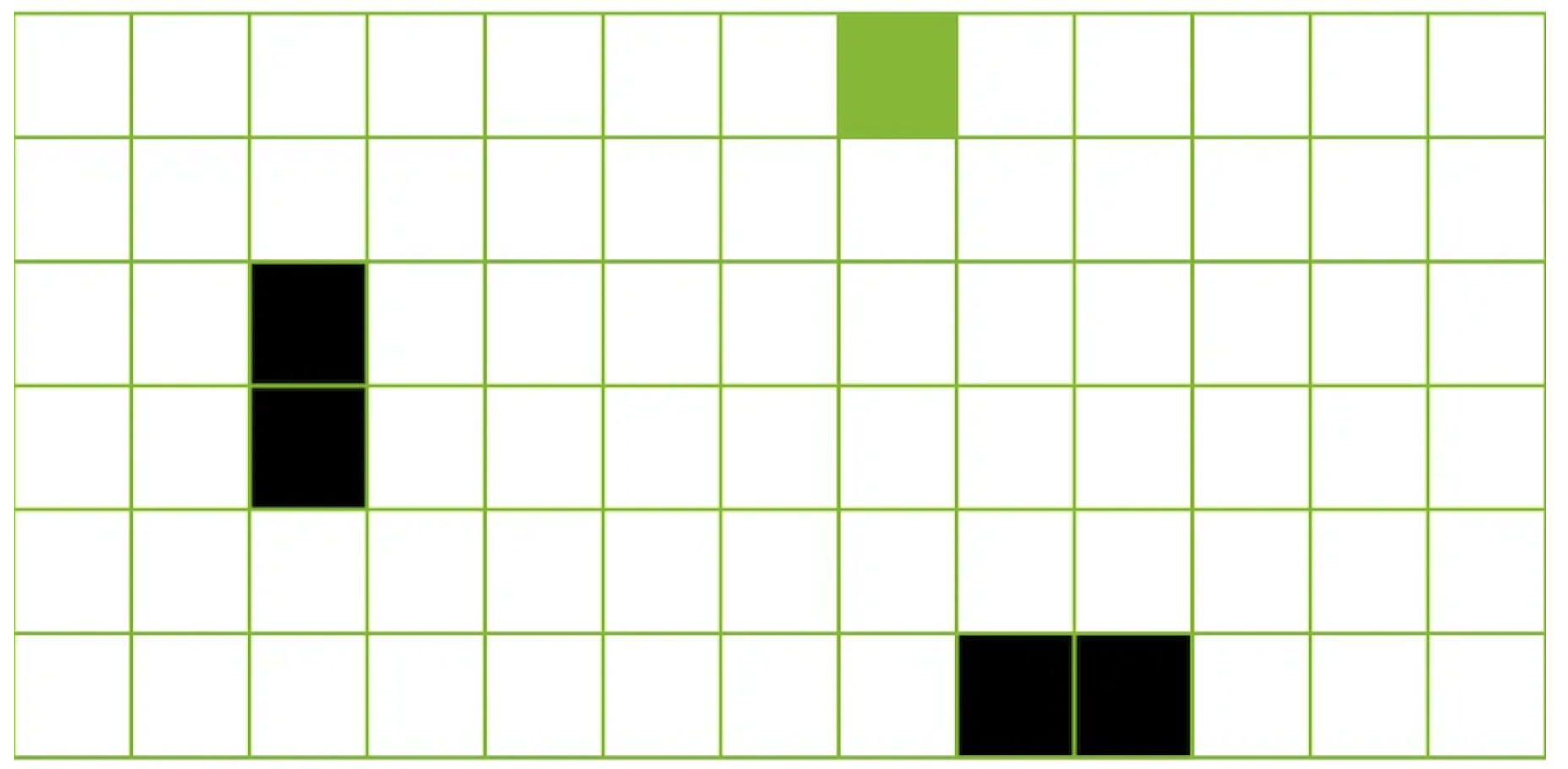

A major addition is the ability to automatically generate a detector error model (DEM) from a quantum memory circuit and its noise model. DEMs are data structures that link each stabilizer measurement in a QEC code to its physical error probabilities, enabling more realistic simulation and decoding. This feature builds on work in the open-source Stim framework and can now be applied directly within CUDA-Q, streamlining the setup for both simulation and hardware experiments.

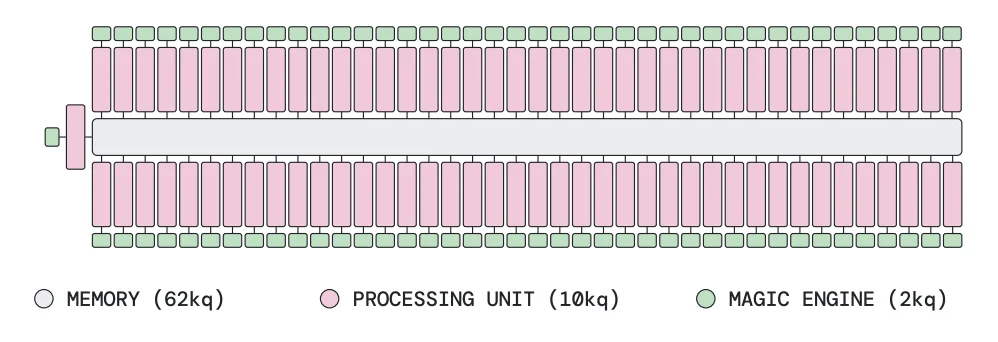

CUDA-QX 0.4 also introduces a tensor network decoder with native Python support. Tensor networks — graph-based representations of quantum states — are valued in research for their exactness and lack of training requirements, making them a benchmark for other decoders. NVIDIA’s implementation uses its cuQuantum GPU libraries to accelerate network contraction and path optimization, achieving performance parity with Google’s own tensor network decoders on open benchmark datasets while remaining open-source.

For those using the BP+OSD decoder, the update brings new flexibility and diagnostics, according to the post. Features include adaptive convergence monitoring to reduce computation overhead, message clipping to avoid numerical overflow, and options to switch between sum-product and min-sum algorithms. Researchers can also dynamically scale min-sum factors and log detailed decoding progress to track performance over time.

Generative AI Meets Quantum Algorithms

On the solver side, NVIDIA has added an implementation of the Generative Quantum Eigensolver (GQE), a hybrid classical–quantum algorithm that replaces the fixed-parameter circuit design of traditional methods with a generative AI model. The model proposes quantum circuits to test against a target Hamiltonian, then adapts based on results. NVIDIA says this approach could avoid common pitfalls in variational quantum algorithms, such as “barren plateaus” where optimization stalls.

The GQE example in CUDA-QX 0.4 is tuned for small-scale simulation but provides a template for integrating generative models into future large-scale quantum chemistry and physics calculations.

By consolidating these tools into a GPU-accelerated, API-driven platform, NVIDIA appears to be positioning CUDA-Q as a central environment for quantum error correction research. The company notes in the blog that researchers can design custom codes, simulate them with realistic noise, configure decoders, and deploy on actual quantum processing units without leaving the framework.

For complete information, heck out the NVIDIA blog post. or for more details on the Solvers implementation of GQE, see the Python API documentation and examples.