Insider Brief

- IBM and Google both claim they can build industrial-scale quantum computers by the end of the decade, shifting the race from theoretical advances to large-scale engineering, according to the Financial Times.

- Scaling quantum systems from fewer than 200 qubits to millions faces major challenges, including qubit instability, interference, manufacturing complexity, and the need for cost-effective error correction.

- Competing designs and qubit technologies — from superconducting to trapped ions, neutral atoms, and photons — are vying for viability, with government funding and new component innovations likely to narrow the field.

The race to build a large-scale quantum computer has moved from theory toward execution, with IBM and Google claiming they can deliver industrial-grade machines by the end of the decade, according to reporting from the Financial Times. Source suggest that a century-long marathon of incremental physics has now shifted into an engineering sprint that could determine the technology’s first real champions.

The shift marks a change in tone from decades of speculation about quantum computers. These devices — long seen as a “holy grail” for tackling problems in materials science, cryptography and artificial intelligence — rely on qubits, which can store and process information in ways that classical bits cannot. Scaling from the fewer-than-200-qubit prototypes seen today to the million-qubit threshold most experts believe is needed for practical use has been one of the field’s hardest problems.

IBM and Google Set Timelines

IBM released a new blueprint in June that it says fills in missing pieces from earlier designs, putting it on a path to a workable system before 2030. Google, which last year cleared what it called one of the most difficult remaining hurdles, has set the same target. Both companies say the most fundamental physics problems have been solved, leaving mainly engineering challenges to reach scale.

Not everyone is convinced. Amazon Web Services is less bullish. Oskar Painter, head of AWS quantum hardware, told the FT that while the remaining hurdles are less daunting than the fundamental physics, they remain formidable. He estimated 15 to 30 years before the arrival of a “useful” quantum computer.

Scaling Obstacles

Increasing the number of qubits is not as simple as stacking more onto a chip. Qubits are inherently unstable and susceptible to any type of environmental disturbances or noise, making it vexingly difficult to maintain their delicate quantum states for only fractions of a second. As more qubits are added, interference grows, sometimes to the point where systems become unmanageable.

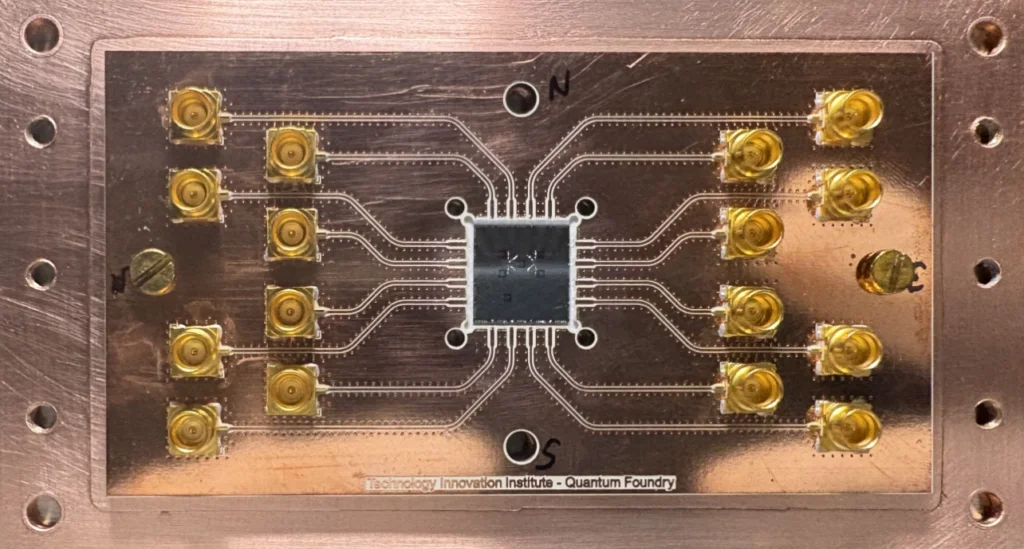

IBM encountered the problem in its Condor chip, which used 433 qubits and suffered from crosstalk between components. Rigetti Computing, another U.S. quantum start-up, told the FT that scaling with today’s superconducting qubits creates uncontrollable effects. IBM says it anticipated this and has switched to a new type of coupler to reduce interference.

At small scales, qubits are often tuned individually for performance. This becomes impractical at large scale, requiring new manufacturing methods, more reliable materials, and cheaper components. Google aims to cut component costs tenfold to keep a full machine near $1 billion.

Error Correction Strategies Diverge

Error correction — creating redundancy by linking multiple qubits so failures in one can be compensated by others — is central to making large systems useful. Google is the only company to have demonstrated a chip where error correction improves as the system grows. Its preferred method, known as surface code, organizes qubits into a two-dimensional grid, with each linked to its nearest neighbors. This requires millions of physical qubits before the system can run useful workloads.

IBM is pursuing a different error-correction code, called low-density parity-check (LDPC), which it says will cut qubit requirements by up to 90 percent. LDPC relies on longer-range connections between qubits, which is known as a difficult feat in superconducting systems. Google has argued that this adds unnecessary complexity, while IBM claims to have recently achieved the longer links needed.

Analysts told the FT that IBM’s design could work in theory but has yet to be proven in manufacturing.

Engineering the Whole System

Beyond the qubits themselves, companies told FT that scaling may require a complete rethinking of the quantum architecture. Early superconducting systems are wired together in dense bundles, a setup impossible to replicate at million-qubit scale. The leading approach involves integrating many components onto single chips, then connecting those chips into modules. The modules will need larger, more sophisticated refrigerators capable of operating near absolute zero.

The choice of qubit technology could determine which companies can scale fastest. Superconducting qubits, used by IBM and Google, have seen the largest practical gains but are difficult to control and require ultra-cold temperatures. Other approaches — such as trapped ions, neutral atoms, and photons — are more stable but have their own scaling barriers, including slower computation and difficulty linking multiple clusters into a single system.

Sebastian Weidt, CEO of U.K.-based Universal Quantum, told the FT that government funding choices will shape which technologies survive. The U.S. Defense Advanced Research Projects Agency (DARPA), for example, is already studying the field to identify candidates that could be expanded quickly.

New Qubit Designs Emerge

Some companies are betting on radically different designs. Amazon and Microsoft have announced work on qubits derived from exotic states of matter that could yield more stable components. These designs are far earlier in development but could, if successful, leapfrog current leaders.

Meanwhile, established techniques remain the focus for companies that have invested years in their development. Analysts speculate that the sheer cost and difficulty of scaling will narrow the field, leaving only well-funded players — or those with government backing — in contention.

High Stakes for Industry

A large-scale quantum computer could deliver exponential speedups in certain calculations, enabling rapid design of new materials, optimization of complex systems, and breaking of encryption schemes. That potential has drawn billions in private and public investment worldwide, even as practical use remains years away.

Still, optimism seems to be surging.

“It doesn’t feel like a dream anymore,” Jay Gambetta, head of IBM’s quantum initiative, told FT. “I really do feel like we’ve cracked the code and we’ll be able to build this machine by the end of the decade.”