Insider Brief

- A new study proposes a detailed and experimentally grounded blueprint for building a scalable, fault-tolerant photonic quantum computer using deterministic quantum dot emitters.

- The design integrates time-bin encoded photons, adaptive fusion gates, and a low-depth architecture that reduces optical complexity and enables real-time error correction.

- Simulations show the system meets key fault-tolerance thresholds under realistic noise conditions, though achieving necessary performance benchmarks in quantum dot hardware remains a central challenge.

A new study looks to lay out a comprehensive and practical blueprint for building a scalable photonic quantum computer using deterministic quantum dot emitters, offering a potentially lower-depth, fault-tolerant architecture that bypasses many of the barriers that have hindered the field.

The research, conducted by scientists from the University of Copenhagen, Sparrow Quantum and the University of Bristol, addresses longstanding limitations in photonic quantum computing such as photon loss, inefficient entangled state generation and the complexity of large-scale optical circuits. Their proposal, published in the pre-print server arXiv, leverages advances in deterministic entangled-photon sources, fusion-based computing models and real-time error correction to construct a time-efficient system that is both experimentally feasible and scalable.

Fusion-based Quantum Computing

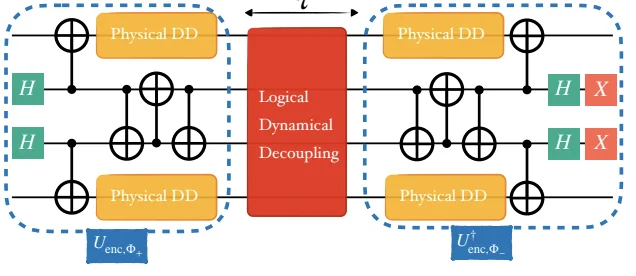

The blueprint centers around a fusion-based quantum computing (FBQC) model, which processes information by performing entangling measurements on small entangled resource states. Unlike traditional approaches that rely on probabilistic photon generation, this system uses deterministic quantum dot-based emitters that can reliably produce high-quality entangled photons on demand. The photons are encoded in time bins and routed through a low-depth, modular optical network that significantly reduces optical loss and hardware overhead.

To address error correction, the architecture constructs a foliated Floquet color code (sFFCC) lattice—essentially a 3D grid of entangled photons that can detect and correct errors in real time. The system includes adaptive “repeat-until-success” (RUS) fusion gates that dynamically retry entangling operations until a successful measurement is achieved or a maximum number of attempts is reached.

The researchers also simulate a full range of noise models, including photon loss, spin decoherence and distinguishability errors. These simulations confirm that the proposed system meets key fault-tolerance thresholds under realistic experimental conditions, particularly those found in semiconductor quantum dot platforms.

Establishing a Roadmap

According to the researchers, the study represents a bridge from theory to experiment in photonic quantum computing by offering a credible roadmap to building systems that can achieve fault tolerance with fewer physical resources and lower optical complexity. The architecture’s reliance on time-bin encoding and minimal optical depth makes it especially attractive to integrate into existing photonic and semiconductor manufacturing platforms.

Important to note: the researchers provide detailed timing constraints, hardware specifications and experimental benchmarks needed for implementation. This includes estimates that a logical clock cycle — representing one round of error correction –can be completed in microseconds and scales linearly with code distance. For instance, according to the study, with a small error-correcting code size (L=3), the system uses only five active phase shifters and up to eight passive beamsplitters per photon, minimizing the potential for loss or error.

Methods — Three Primary Components

The system design is broken into three primary components:

- Entangled-Photon Sources (EPS): These are based on quantum dots embedded in photonic-crystal waveguides. Each source emits a chain of time-bin encoded photons entangled with its electron spin state using carefully timed laser pulses and spin rotations. This setup allows on-demand generation of resource states required for computation.

- Fusion Measurement Circuits: Photons emitted from EPS units are routed through optical switches and variable beam splitters into fusion gates that attempt to entangle pairs of photons. The circuit supports adaptive operations based on detection outcomes, which are analyzed and used to reconfigure future fusion attempts in real-time.

- Classical Control Unit: A classical processing system coordinates photon detection, fusion success or failure and control signals to the EPS units. This feedback loop enables the repeat-until-success fusion operations and keeps the system synchronized across many emitters and optical paths.

The researchers derive exact inequalities and timing requirements for every component, including detector deadtime, pulse repetition rate and phase shifter switching speed. They reference state-of-the-art devices in quantum dot research to show that existing technology is approaching the necessary performance thresholds.

Limitations

While the researchers are optimistic of success, the study does identify some limitations and areas of future work. For example, the blueprint relies heavily on the further maturation of quantum dot hardware. Many components — such as electro-optic modulators, single-photon detectors with high number-resolving capability and low-loss optical paths — must operate near peak performance simultaneously. Achieving high optical cyclicity and spin coherence time also remains challenging. The researchers specify a required spin coherence time of more than 12 microseconds and a photon indistinguishability exceeding 96%, benchmarks that are at or just beyond current experimental capabilities.

Another limitation is that while RUS fusion significantly improves loss tolerance, it introduces system complexity due to the need for real-time feedback and fast reconfiguration. The coordination between photon generation, routing, and detection must happen within strict nanosecond-scale timing windows, pushing the limits of control electronics and integration, at least currently.

Future Directions — Improving Performance, Boosting Integration

The team outlines several areas for future research, including improving the optical cyclicity of quantum dots to reduce branching errors, enhancing spin coherence through better nuclear spin control and refining the fusion gates to further suppress loss and distinguishability errors. They also propose hardware integration strategies, such as combining EPS and fusion circuits onto a single chip and using lithium niobate or silicon nitride photonics to reduce loss.

The researchers hope their blueprint will serve as a guide for experimental teams working to move photonic quantum computers from theoretical models to laboratory prototypes and eventually to full-scale machines. With much of the equipment needed for the advance already catalogued and used in the field, they argue that by tailoring the architecture to the strengths and constraints of quantum dots, and by rigorously simulating realistic error channels, their design brings fault-tolerant photonic quantum computing within practical reach.

“Our blueprint builds upon components that have already been experimentally demonstrated and thoroughly characterised, providing a clear roadmap toward the realisation of a functional logical qubit in photonic quantum computing,” the researchers write. “With the remaining areas for improvement now well defined, efforts may gradually begin to shift from primarily fundamental research toward a focus on targeted engineering development.”

For a deeper, more technical dive, please review the paper on arXiv. It’s important to note that arXiv is a pre-print server, which allows researchers to receive quick feedback on their work. However, it is not — nor is this article, itself — official peer-review publications. Peer-review is an important step in the scientific process to verify the work.

The study was authored by Ming Lai Chan, Peter Lodahl, Anders Søndberg Sørensen, and Stefano Paesani from the University of Copenhagen; Ming Lai Chan is also affiliated with Sparrow Quantum. Aliki Anna Capatos and Stefano Paesani are affiliated with the University of Bristol.