Insider Brief

- A new study in Nature Reviews Physics proposes multidimensional photonic computing as a scalable, energy-efficient alternative to conventional electronics for managing AI’s growing computational demands.

- The approach encodes data across multiple independent properties of light—such as wavelength, polarization, and spatial mode—to enable highly parallel processing with reduced latency.

- The researchers outline a hybrid roadmap combining classical photonic computing and quantum photonic systems, emphasizing neuromorphic architectures and current technical challenges like nonlinearity and opto-electronic interfaces.

In a new study published in Nature Reviews Physics, a team of researchers suggests that the future of computing may not lie in silicon chips but in beams of light. Rather than relying on conventional optical systems, they propose an emerging framework called “multidimensional photonic computing”, which encodes and processes information across multiple properties of light simultaneously.

The international team, led by scientists from the University of Münster and Heidelberg University, outlines how this approach could enable faster, denser, and more energy-efficient data processing, potentially transforming how machines manage the exploding demands of artificial intelligence and machine learning.

According to the researchers, multidimensional photonic computing uses independent characteristics of light — such as wavelength, polarization, phase, and spatial mode — to run many operations in parallel. This design sidesteps the physical limits of traditional electronics and opens a path to greater scalability.

The study draws a distinction between two emerging approaches: classical photonic computing, which is optimized for high-throughput data tasks, and quantum photonic computing, which is better suited for complex problems that outstrip the capacity of conventional systems.

“The complementary nature of these approaches suggests a promising path towards multidimensional, neuromorphic photonic quantum computing as a flexible and efficient architecture to meet future computational demands,” the researcher write in the study.

AI’s Growing Appetite for Compute

According to the researchers, this new, more efficient approach might be especially needed today to face one of computing’s biggest challenges: the soaring demand for calculation-hungry artificial intelligence and the dwindling computational resources that can handle it.

The team reports that current AI models like ChatGPT and Gemini are already straining traditional hardware. The sheer volume of data needed to train and run these systems is surpassing what conventional processors, even GPUs and TPUs, can handle efficiently. The energy cost is also escalating. According to prior studies cited in the paper, training state-of-the-art AI models now consumes significant amounts of electricity, contributing to carbon emissions and environmental concerns.

The paper frames this problem as a critical bottleneck in computing, one that cannot be solved simply by adding more transistors or building faster chips. What’s needed, the authors argue, is a fundamental shift in how data is represented and processed.

From Electrons to Photons

While electrons are the basis of most conventional electronics, photons — the particles of light — can move faster, carry more information, and don’t heat up circuits in the same way. In photonic computing, data is encoded in light waves and manipulated through optical components. But the real advance discussed in this paper is leveraging multiple “orthogonal degrees of freedom” simultaneously.

Orthogonal properties, like different colors of light (wavelengths), polarization angles, or spatial paths, don’t interfere with each other. This allows engineers to perform parallel processing without crosstalk, which is the interference between signals that are supposed to stay separate. An easy way to understand crosstalk is to think of a time when you were trying to talk to the person next to you at a party or busy business function. You can hear parts of the conversation from the table behind you, but not the whole conversation. That overlap is crosstalk.

In practice, tapping light’s orthogonal properties successfully could mean encoding dozens or even hundreds of separate data streams on a single beam of light, allowing for far more calculations to happen at once.

The team outlines several well-established multiplexing methods already used in telecommunications — such as time-division, frequency-division, spatial mode, and polarization multiplexing — and suggests that combining them within computing systems could exponentially boost processing speed, or throughput.

“Computing platforms that process data using multiple, orthogonal dimensions can achieve exponential scaling on trajectories much steeper than what is possible with conventional strategies,” the researchers write in the paper. “One promising analog platform is photonics, which makes use of the physics of light, such as sensitivity to material properties and ability to encode information across multiple degrees of freedom. With recent breakthroughs in integrated photonic hardware and control, large-scale photonic systems have become a practical and timely solution for data-intensive, real-time computational tasks.”

Building the Photonic Toolbox

The study catalogs a wide array of hardware demonstrations already underway. These include convolutional neural networks operating at terabit-per-second speeds, all-optical accelerators and integrated chips combining both analog and digital interfaces. For instance, one system achieved over 11 tera-operations per second (TOPS) by combining time, wavelength and spatial multiplexing. Another reached over 217 TOPS using on-chip diffractive optics for vision and audio tasks.

Most of these systems use analog computation — in which light’s physical properties represent data continuously — rather than binary ones and are especially well-suited to “neuromorphic” designs. These brain-inspired architectures place memory and computation together, mimicking how biological neurons work. Because light travels faster than electricity, such designs can drastically reduce latency.

To bridge analog and digital systems, the paper highlights hybrid photonic–electronic chips that use analog light signals for computation and electronic circuits for readout and control. These systems have achieved energy efficiencies up to three orders of magnitude better than traditional chips.

A Photonic Quantum Twist

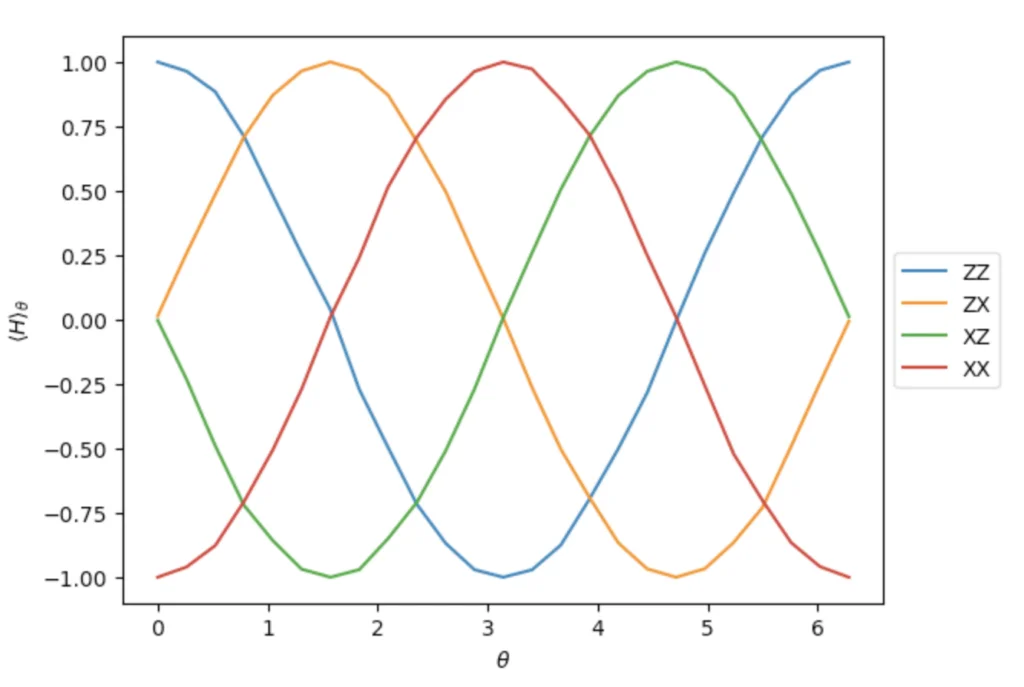

The authors also explore how photons can be used in quantum computing, where quantum bits (qubits) can represent many possible states at once. Photonic quantum systems can encode information in high-dimensional quantum states, enabling them to, in principle, simulate molecules, perform cryptographic tasks, or classify data in ways that are impractical for classical computers.

The team differentiates between two flavors of photonic quantum computing:

- Discrete-variable systems, where data is encoded in distinct photon properties like path or polarization.

- Continuous-variable systems, where data is encoded in continuous wave properties like phase or amplitude.

While discrete-variable systems require exotic hardware like single-photon sources and entangling gates, continuous-variable systems can leverage more mature optical technologies like squeezed light and beam splitters. However, both approaches require nonlinear optical components to achieve full computational power, which is a key challenge researchers are still working to solve.

Limitations and Technical Hurdles

Despite the progress, hurdles remain and more work will be needed. Chief among these challenges is the “opto-electronic interface” — the connection point between the photonic and electronic domains. Delays or inefficiencies at this junction can undercut the speed and energy benefits of photonic computing.

Nonlinear operations, which are essential for complex tasks like pattern recognition, are also hard to implement in photonics without introducing noise or instability. While researchers have developed some promising materials and architectures, integrating them into scalable chips remains difficult.

Another challenge: while many photonic processors have demonstrated extraordinary speed, most are limited to relatively shallow computations — meaning they’re good at simple tasks but not yet ready to handle deep neural networks or general-purpose workloads without help from digital counterparts.

These challenges represent excellent next steps for research work in multidimensional photonic computing.

What Comes Next: Hybrid Systems and Scaling Up

The study points to a convergence of classical photonics, neuromorphic computing and quantum architectures. Rather than replacing electronics entirely, photonic systems may work alongside CPUs, GPUs and even future quantum processors.

One path forward involves creating hybrid chips that combine the best of each world: analog speed, digital control and quantum complexity. Early versions have already shown success in solving optimization problems, and the authors suggest this route may eventually lead to full-scale neuromorphic quantum photonic processors.

Another direction is improving integration and manufacturability. The field is still waiting for the kind of standardized, scalable infrastructure that drove the rise of silicon electronics. Until then, photonic systems will likely remain specialized accelerators for demanding applications like machine learning, cryptography, or molecular simulation.

The study is quite complex and technical — reviewing it in Nature is recommended for the technical detail that this summary story cannot provide.

The study was led by researchers from the University of Münster and Heidelberg University, including Ivonne Bente, Frank Brückerhoff-Plückelmann, Shabnam Taheriniya and Wolfram Pernice. Francesco Lenzini contributed from the Consiglio Nazionale delle Ricerche, Istituto di Fotonica e Nanotecnologie in Milan. Michael Kues is affiliated with Leibniz University Hannover’s Institute for Photonics. Harish Bhaskaran is based at the University of Oxford, and C. David Wright is with the University of Exeter.