Insider Brief

- A joint SpinQ research team developed a machine learning model that can both predict quantum system dynamics and infer hidden parameters, potentially improving calibration and control of quantum hardware.

- The model, based on a Long Short-Term Memory network, demonstrated sub-1% error rates in forecasting and parameter inference across tests on NMR and superconducting quantum platforms.

- The approach showed strong scalability and could aid future efforts in real-time noise tracking and system stability for quantum computing applications.

A research team has developed a machine learning model that can both explain and forecast the behavior of quantum systems, a feat that, if successful, could streamline error correction, hardware calibration and algorithm design in quantum computing. The team included scientists from including team members from SpinQ, Hong Kong University of Science and Technology, Shenzhen University and the Quantum Science Center of the Guangdong-Hong Kong-Macao Greater Bay Area

The study, published in Physical Review Letters, outlines how a type of artificial intelligence (AI) known as a Long Short-Term Memory (LSTM) network was trained to establish a two-way translation between the internal rules of a quantum system — known as Hamiltonian parameters — and the way these systems evolve over time. This “bidirectional” capacity means the system can infer unknown quantum properties from observed data and predict future system dynamics from known parameters.

“This achievement provides new approaches for quantum hardware calibration, noise characterization, and algorithm optimization. The results have been validated through experiments on different quantum computing platforms,” researchers from SpinQ, a leading quantum computing company based in China, write in a statement.

The researchers report the model offers a significant advance for the quantum computing field, where even small systems can be difficult to simulate and diagnose due to the complex and fragile nature of quantum states. Traditional methods of analyzing these systems either rely on brute-force simulations or controlled experiments designed to probe specific features — approaches that often require substantial time and computing resources.

By contrast, the new method uses machine learning to recognize patterns in time-series data. That allows it to estimate hidden variables, such as fluctuating magnetic fields, and forecast how a quantum system will behave.

The model’s main strength, the authors suggest, lies in its ability to move fluently in both directions — forecasting system behavior from known rules and identifying those rules from observed behavior.

Results

The model achieved error rates under 1% when predicting and reconstructing system parameters. In test cases, it could infer the time-varying Hamiltonian parameters that govern system behavior just by watching local observables — such as spin orientation — change over time. When the parameters were already known, the model accurately forecasted how the system would evolve over 15 time steps.

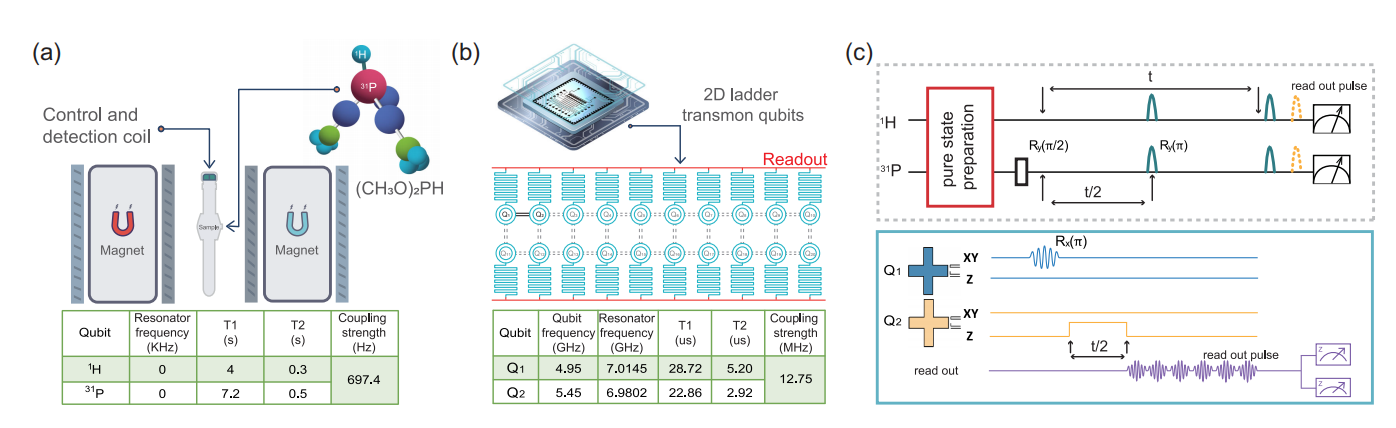

To verify its performance, the team ran tests across both classical simulations and real-world quantum hardware. That included experiments on SpinQ’s nuclear magnetic resonance (NMR) platform and its 20-qubit superconducting quantum processor.

In one test on the NMR system, the AI predicted spin dynamics in a two-qubit setup across a 50-millisecond interval with less than 1% error, according to the study. On the superconducting chip, the model was able to determine previously unknown system noise values by analyzing gate operation data. These parameters are typically hard to measure but crucial for maintaining qubit stability.

A Tool for Quantum Error Correction

These findings could help address one of quantum computing’s main challenges: error correction. Quantum bits, or qubits, are notoriously error-prone due to their sensitivity to external noise and internal inconsistencies. Most current approaches to quantum error correction rely on static assumptions about noise, which can quickly become outdated.

The new method allows for real-time tracking of noise sources, which could help systems automatically adjust to fluctuations and maintain accuracy. In one experiment, the researchers showed the model could detect time-varying changes in system parameters that correlated strongly with observed decoherence — where qubits lose their quantum behavior. The match between predicted and actual data reached a coefficient of determination of 0.97, suggesting strong reliability.

The study suggests that the ability to track fluctuations in parameters such as detuning may support future developments in noise characterization and system stability.

Scalability

The model demonstrated promising scalability in simulations. When tested on simulated systems with more qubits, the time it took to estimate parameters grew at a much slower rate than with traditional methods. For example, when scaling from 5 to 11 qubits, the model’s inference time grew by a factor of 1.8. In contrast, classical linear algebra approaches needed more than five times longer.

That sub-linear growth rate is promising for future large-scale systems, where real-time monitoring could become essential for stability and performance.

The underlying model combines LSTM networks — a type of neural network adept at analyzing sequences — with an encoder that extracts key features from input data. These tools are often used in natural language processing or time-series forecasting. In this context, the researchers applied them to sequences of quantum measurements to learn the hidden parameters behind them.

Limitations And Future Research Directions

Though the model’s results are encouraging, there are limitations. Most tests so far have involved small systems, and while results generalized across both NMR and superconducting hardware, broader validation across other quantum platforms will be necessary. The system’s accuracy also drops for longer prediction horizons, suggesting limits to how far into the future it can see.

There’s also a dependency on data quality. The model’s performance hinges on having sufficiently detailed and clean observational data, which may not always be available in real-world quantum systems where noise and imperfections abound.

The research was led by a joint team from SpinQ and collaborating institutions. SpinQ says it plans to continue developing the method as part of its broader goal of commercializing quantum technologies. The company markets both desktop quantum devices and cloud-accessible quantum processors.

Future research will likely focus on broadening the model’s applicability, improving robustness under noisier conditions and integrating real-time feedback loops. Researchers say the goal is to enable quantum computers to better understand — and perhaps eventually correct — themselves. That would take a step toward the team’s ultimate goal of integrating quantum computers into real-world technology and business systems.

The team writes in the statement: “In the future, SpinQ will continue to pursue a core strategy driven by ‘technology R&D and commercial implementation,’ committed to deeply integrating cutting-edge quantum computing research into its full-stack technological infrastructure and business ecosystem. SpinQ aims to transform quantum technology into an accessible tool that genuinely drives industrial upgrade, partnering with global collaborators to pioneer a new paradigm in quantum computing-enabled industries.”

This paper relied on the SpinQ statement and the arXiv version of the PRL paper, which is published behind a paywall.