There’s an old saying that when you come to a fork in the road, you should take it.

For decades, scientists have treated photonic and atomic approaches to quantum computing as that fork in the road – two distinct paths to scalability and practicality.

Quantum Source, however, isn’t choosing one over the other. Instead, it’s taking both, forging a dual approach that could open the path to practical large-scale quantum computing, according to Quantum Source’s chief scientist, Prof. Barak Dayan.

Combining atoms and photons, says Dayan, enables a deterministic instead of probabilistic approach to photonic quantum computing, promising game-changing solutions to scalability, resource inefficiencies and error correction challenges.

It’s a development that could redefine the trajectory of universal quantum computing.

Quantum computing is at a pivotal juncture. While current systems have demonstrated potential, scaling-up to the level of million qubits – as required for error correction – has become the main challenge.

Although the photonic approach is possibly the most promising in terms of scalability, its main bottleneck is the inherent inefficiency in both the generation of single photons, and in their large-scale entanglement, which are exactly the hurdles that single atoms can efficiently solve, according to Prof. Dayan.

Quantum source has a team of over 50 multidisciplinary experts, including seven Professors and 25 PhD-level researchers, that is dedicated to solving these complex challenges with creative solutions to remove the aforementioned roadblocks to scalability and affordability.

Using photon-atom gates is the core of novel technology the company designs, which is designed to enable scalable, cost-efficient and fault-tolerant photonic computing, Dayan added. Founded in 2021 and rooted in two decades of research at the Weizmann Institute of Science, the company has raised $77 million to advance breakthroughs in photonic technologies.

For the team, the heart of the system is mastering the generation of entangled photonic structures, which can support error correction and topological quantum operations.

“The fuel of quantum computers is entanglement,” said Dayan, in an interview with The Quantum Insider. “Generating and entangling photons is the hardest task when you’re using a photonic quantum computer, and harnessing single atoms enables doing this deterministically.”

Why Single-Photon Non-Linearity Matters

At the heart of any computation, classical or quantum, is the concept of non-linearity, which is the ability of a system to respond disproportionately to inputs. For example, in classical computation, an AND gate only outputs a 1 if both inputs are 1.

All the entangling quantum gates, such as the controlled-NOT or controlled-phase gates, require an analogous non-linear physical mechanism in order to produce outcomes that are not just a product state of the individual qubits.

This behaviour is analogous to the disproportionate response of classical logic gates but operates in the realm of superpositions and entanglements, allowing quantum systems to perform operations that classical linear systems cannot achieve.

In photonic systems, this is extraordinarily challenging because, unlike other quantum particles, photons don’t naturally interact, which makes it difficult to create the dependencies – or state correlations – needed for quantum gates. Current methods rely on inefficient non-linear optical effects combined with highly probabilistic and complex optical operations, making the generation and entanglement of photons the biggest hurdle in photonic quantum computing.

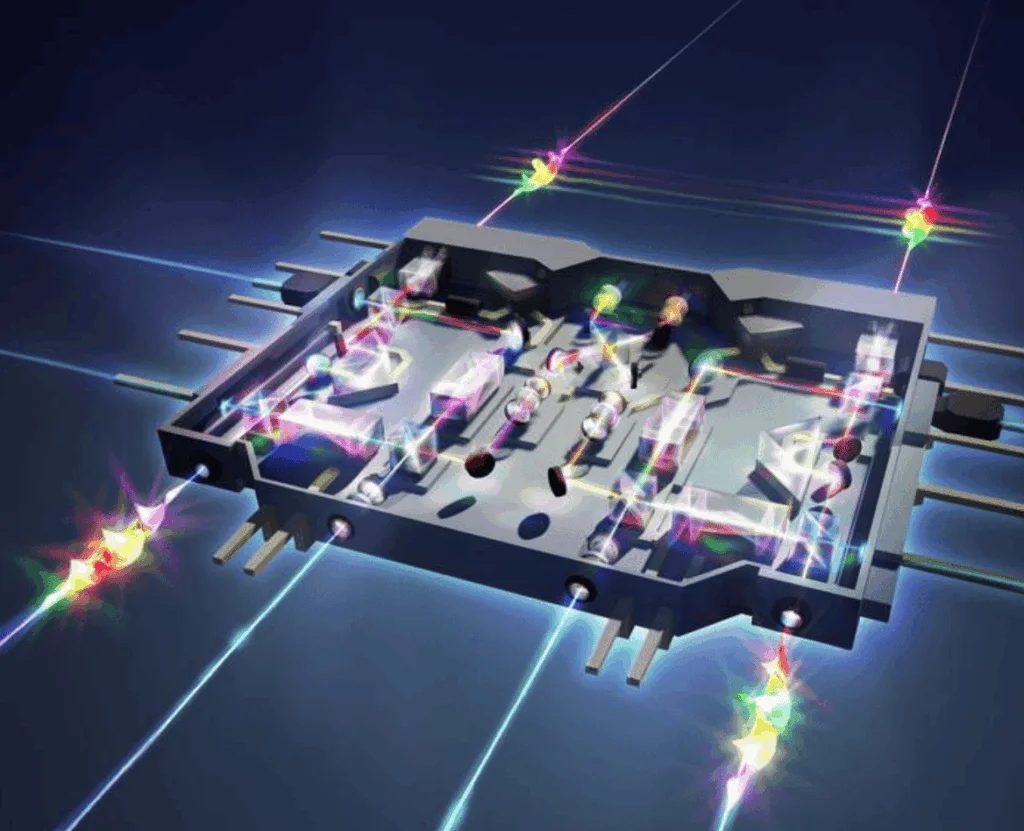

Quantum Source’s solution hinges on single-photon non-linearity, which is achieved by integrating photonic qubits for computation with atomic qubits—in this case, rubidium atoms—to mediate the entanglement. Unlike bulk material systems like silicon resonators or quantum dots, rubidium atoms offer unmatched uniformity and isolation from environmental noise.

“All the rubidium atoms in the universe are identical,” said Dayan. “You can replace one rubidium atom with another, and nothing will change.”

Deterministic Systems: Breaking the Probabilistic Bottleneck

Probabilistic quantum systems generate photons and entangle them through processes that succeed only a fraction of the time. For example, probabilistic systems typically use spontaneous parametric down-conversion (SPDC) or spontaneous four-wave mixing. These heralded sources rely on nonlinear optical processes in crystals or fibers to generate single photons at low probabilities. Entangling these photons with each other is also done in a probabilistic way, with an extremely low probability of success for each attempt.

To guarantee the generation and entanglement of even a small number of photons, these systems require hundreds of sources, resulting in significant resource overhead.

“With probabilistic systems, the inefficiency accumulates,” said Dayan. “Generating a single photon, creating an entanglement, and conducting the heralding measurements each require redundant resources. These inefficiencies lead to machines that are large, costly, and complex.”

Quantum Source’s deterministic approach eliminates these inefficiencies. Single rubidium atoms, held in vacuum close to chip-based optical resonators, ensure precise photon generation and entanglement. Accordingly, this architecture requires drastically fewer resources and operates with greater efficiency.

“We aim for a smaller machine—something you can have in your facility, not a factory-sized building in another country,” said Dayan.

Error Correction and Logical Qubits

Error correction is one of the most significant challenges – and some would argue THE most significant challenge – in quantum computing. All systems aim to cluster many physical qubits into logical qubits. A logical qubit is a fault-tolerant unit of quantum information that is encoded across multiple physical qubits. Designed to detect and correct errors in quantum computations, logical qubits aim for extremely low error rates, typically around 10^-15. Achieving this requires reducing the error rate of individual qubits and ensuring the scalability of error-correcting codes like the surface code.

In photonic quantum computing, error correction demands teleportation – a process that maintains logical qubits even as the physical qubits – the photons – are measured and destroyed. This adds another layer of complexity. However, the deterministic approach of Quantum Source is dramatically reducing the required resources, according to Dayan. High-quality photonic qubits generated by single atoms require fewer resources for error correction, thereby reducing overhead and complexity.

Advantages Over Solid-State Emitters

Quantum emitters like nitrogen-vacancy centers or quantum dots have been explored as alternatives to atomic systems. While they offer the advantage of being fixed in place, their presence within solid materials introduces noise and variability, according to Dayan.

“The single-photons must be pure and the generated states must be identical,” said Dayan. “That is much harder to achieve with solid-state emitters, which are coupled to their environment and prone to inhomogeneity and spectral diffusion.”

Rubidium atoms, on the other hand, operate in a vacuum, and are decoupled from environmental influences. This isolation results in cleaner, more uniform photons.

The challenge lies in trapping and cooling these atoms effectively. Quantum Source employs advanced techniques such as dipole traps, evanescent wave trapping, and in-trap cooling to ensure stability and precision.

Scalability and Accessibility

Tied in with the challenge of error correction is another pressing question for quantum computing scientists and engineers: scalability. Probabilistic systems require exponentially increasing resources to grow in capability. Many experts worry this approach will not scale. However, deterministic systems, like Quantum Source’s model, can scale linearly, simply by adding more devices. This modularity enables the creation of powerful quantum systems without the need for enormous physical or financial investments.

The design also offers accessibility advantages. Unlike other approaches that demand low-temperature operation across the entire system, Quantum Source’s architecture operates primarily at room temperature. Only the final detectors—superconducting devices used for photon detection—require cooling.

“This room-temperature operation is a major advantage,” says Prof. Dayan. “It simplifies the infrastructure and reduces costs significantly.”

The Road to Universal Quantum Computing

Quantum Source’s deterministic approach aligns with the broader goal of creating universal, fault-tolerant quantum computers capable of solving problems beyond the reach of classical systems. Prof. Dayan emphasizes the importance of this goal: “There is no point in running a quantum computer just to solve faster a problem a classical computer can solve. The Holy Grail is solving problems that classical computers will never be able to approach.”

This requires not just high-quality qubits but also systems capable of running billions of computational steps without error. Deterministic designs are uniquely suited to meet these demands, providing both the precision and scalability required for universal quantum computation.

Remaining Challenges

Despite its promise, the technology is not without hurdles. Trapping and cooling rubidium atoms with high fidelity remains a complex task. Additionally, optimizing resonators, coupling efficiencies, and switching mechanisms will be critical for achieving commercially viable systems.

The challenges are considerable, according to Dayan, but the team is committed to surmounting these hurdles to bring to bear the power of quantum computing on some of the world’s biggest scientific, societal, and industrial problems. “Quality, quality, quality,” said Dayan. “The quality of resonators, the efficiency of coupling to fibers, and the fidelity of switching are all areas where significant effort is needed. All these are challenges we are addressing very seriously.”