Insider Brief:

- Researchers from Nu Quantum propose hyperbolic Floquet codes as a solution to overcome the limitations of traditional surface codes, enabling scalable and efficient error correction for distributed quantum computing.

- Surface codes effectively suppress errors but struggle with poor encoding rates, limiting their scalability in distributed architectures where complexity grows without proportional efficiency gains.

- Hyperbolic Floquet codes leverage tessellated hyperbolic geometries to efficiently store multiple logical qubits, offering higher encoding rates and reduced connectivity demands compared to planar surface codes.

- Nu Quantum’s research demonstrates that distributed quantum error correction is possible with near-term technology, requiring realistic fidelity levels for interconnects and processors, and creating a blueprint for scalable modular quantum computing systems.

Distributed quantum computing, with its promise of scalable architectures, is seen as a way to overcome the limitations of current quantum systems. But as researchers and companies explore this modular approach, one unassailable challenge continues to rear its head: error correction. In a recently published preprint on arXiv, researchers form Nu Quantum propose a fresh solution, using hyperbolic Floquet codes to address the shortcomings of traditional methods and enable scalable, distributed quantum computation.

SURFACE CODES AND THE SCALING PROBLEM

The surface code, while the current go-to method for quantum error correction, is effective at suppressing errors, but suffers from poor encoding rates. Each instance of the surface code is capable of storing only one logical qubit. For distributed systems, this becomes an issue, as scaling up the system leads to increasing complexity without proportional gains in efficiency.

The modular architecture pursued by NuQuantum envisions many quantum processing units interconnected through quantum channels. Such a design allows the system to grow infinitely without increasing the complexity of individual QPUs. However, surface codes struggle in these distributed settings, where non-local operations introduce additional error risks. This limitation in surface codes prompts a search for alternative approaches, where geometric principles, such as those found in hyperbolic spaces, offer a potential solution.

THE FORBIDDEN GEOMETRY

Understanding the significance of hyperbolic Floquet codes requires a brief detour into geometry. Most of us are familiar with Euclidean geometry, where parallel lines never intersect and shapes behave predictably on flat surfaces. But when we move to curved spaces, new possibilities emerge. Consider the curved surface of the Earth: flight paths, for instance, appear curved when viewed on a 2D map but are actually the shortest routes in three dimensions.

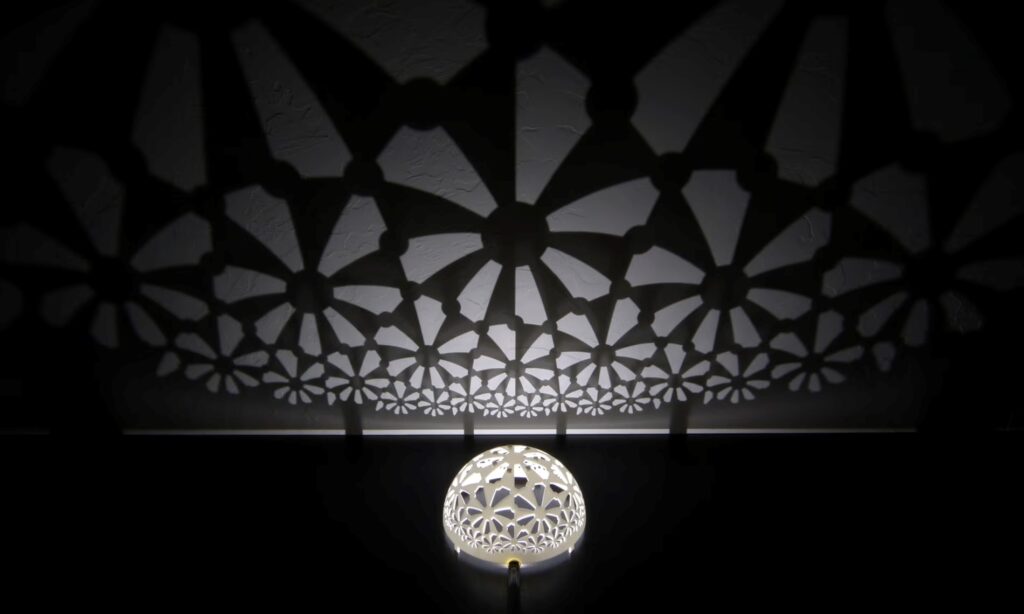

Hyperbolic geometry takes this further, operating in a negatively curved space where lines diverge exponentially. Imagine a geometric pattern projected onto a flat surface from a hollow, spherical structure. Near the edges, the shapes shrink infinitely, revealing an incredible capacity for information density. In quantum error correction, this geometry enables the efficient storage of a high number of logical qubits relative to the number of physical qubits.

As discussed in the paper, hyperbolic Floquet codes capitalize on this by encoding information on tessellated hyperbolic surfaces. Unlike the planar surface code, which struggles to encode more than one logical qubit per instance, hyperbolic Floquet codes can store multiple logical qubits, scaling linearly with the number of physical qubits.

SCALING QUANTUM COMPUTING

In a recent press release, Nu Quantum emphasized that a notable milestone for the quantum computing industry was demonstrating high-quality qubits that can be successfully error-corrected. The Google Willow chip proved that one error-corrected qubit could be achieved using around 100 physical qubits, setting a benchmark. However, scaling this approach to the tens of thousands—or even millions—of physical qubits needed for practical applications remains a challenge.

Nu Quantum’s recent research on distributed quantum error correction seeks to address this. The release highlights the following as the three key outcomes from the paper:

- Distributed QEC is possible: By distributing logical qubits across multiple processors interconnected via entanglement links, the size of an error-correcting code is no longer constrained by the size of individual processors.

- Network requirements are feasible: Simulations demonstrate that a distributed system can achieve fault tolerance with realistic interconnect and processor fidelity requirements. Specifically, a 99.5% fidelity for entanglement links and 99.99% fidelity for two-qubit gates within each processor are achievable with near-term technology.

- Distributed QEC is efficient: The hyperbolic Floquet codes discussed in this research allow for higher efficiency than traditional surface codes. These codes rely on sparse connectivity between processors, ensuring scalability without exponentially increasing the complexity of the system.

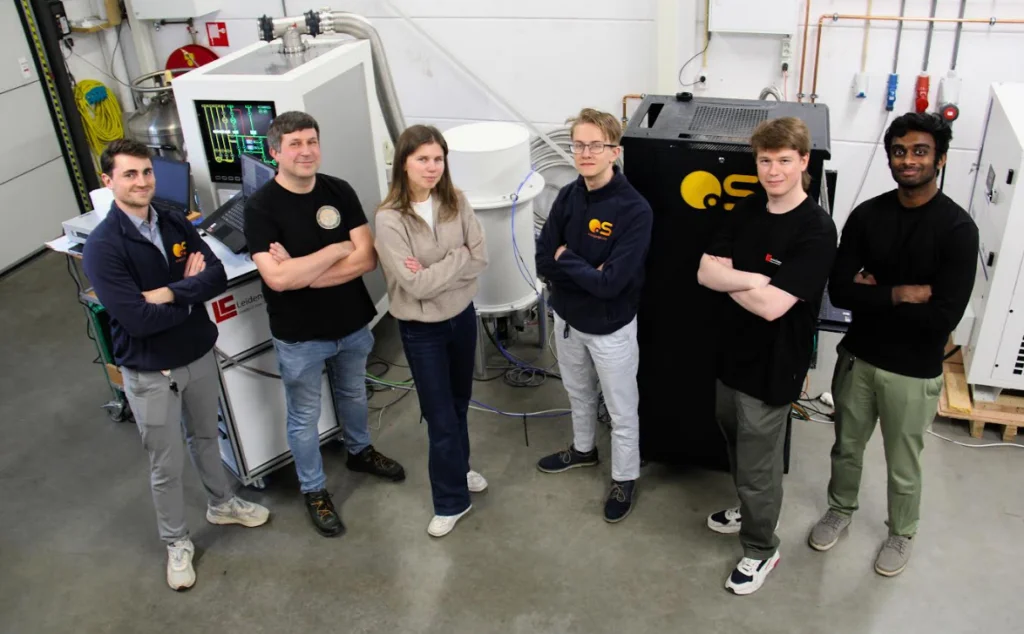

This modular architecture features true scalability, where identical QPUs and network elements can be added without increasing the complexity of individual units. Nu Quantum’s focus on interfaces, such as Qubit Photon Interfaces and scalable Quantum Networking Units, further supports this distributed vision.

REWRITING THE DISTRIBUTED QUANTUM COMPUTING FRAMEWORK

Distributed and modular quantum computing has long been seen as a logical path to scalability, analogous to the modular data centers that are central for classical computing. But without scalable error correction, the dream of practical quantum applications remains out of reach, as systems rely on highly reliable (and still elusive) physical qubits.

Hyperbolic Floquet codes may change this narrative by providing a way to achieve resilient error correction in distributed settings. The ability to store more logical qubits with fewer physical qubits, coupled with the simplicity of pairwise measurements, makes them a compelling alternative to traditional methods.

Contributing researchers on the preprint include Evan Sutcliffe, Bhargavi Jonnadula, Claire Le Gall, Alexandra E. Moylett, and Coral M. Westoby.