- Researchers from the University of Edinburgh used NVIDIA’s CUDA-Q platform to simulate quantum clustering algorithms, significantly reducing the qubit count needed for large datasets.

- The team developed a method using coresets, allowing quantum machine learning tasks to be more feasible on current quantum hardware without relying on quantum random access memory (QRAM).

- NVIDIA’s GPU acceleration enabled the simulations to scale from 10 to 25 qubits, demonstrating the potential for quantum-accelerated supercomputing in solving complex problems.

NVIDIA’s recent quantum research, conducted in collaboration with the Quantum Software Lab at the University of Edinburgh, sheds light on the future of quantum machine learning (QML) and its potential to solve some of the most complex problems in science and industry, according to a company blog post.

This research, led by Associate Professor Dr. Petros Wallden and his team, emphasizes the importance of tackling compute-intensive tasks without relying on the elusive quantum random access memory (QRAM), which remains as yet a theoretical, but unproven technology..

Their findings could help bridge the gap between current quantum computing capabilities and the high expectations set for the technology, according to the post.

The researchers focused their efforts on the development and acceleration of QML methods that significantly reduce the number of qubits necessary for studying large datasets. The team used NVIDIA’s CUDA-Q platform to simulate these methods, extending the work of Harrow and his colleagues by using coresets—a classical dimensionality reduction technique—to make QML applications more feasible on near-term quantum hardware.

A coreset is a reduced version of a full dataset, optimally mapped to a smaller, weighted dataset that still retains the essential characteristics of the original. This allows researchers to approximate the traits of a full dataset without processing it directly, making data-intensive QML tasks more realistic and manageable on current quantum systems.

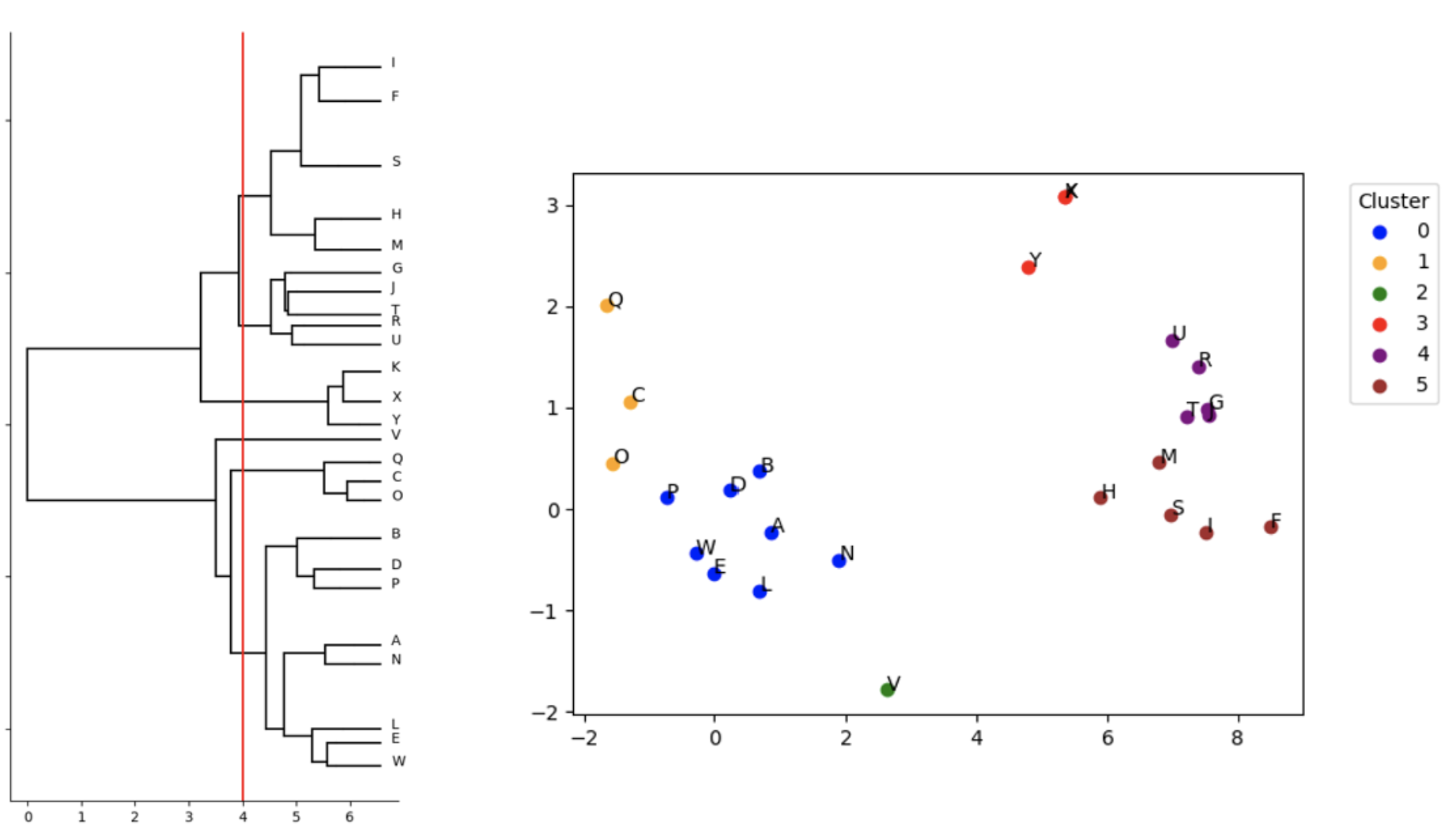

The research team employed coresets to implement and test three quantum clustering algorithms: divisive clustering, 3-means clustering and Gaussian mixture model (GMM) clustering. Clustering is an unsupervised learning technique that groups similar data points in a meaningful way, with real-world applications ranging from tumor classification to market segmentation. They are particularly valuable in applications like market segmentation, image recognition, and medical diagnosis, where understanding the natural grouping of data can inform decision-making and predictions.

Using CUDA-Q, the team was able to simulate these clustering techniques on problem sizes of up to 25 qubits. The simulation toolkit provided by NVIDIA was crucial in overcoming the scalability challenges inherent in quantum computing, allowing the researchers to perform large-scale simulations that would have been impossible with CPU hardware alone.

Boniface Yogendran, the lead developer on this research, said “CUDA-Q enabled us to not worry about qubit scalability limitations and be HPC-ready from day one.”

This capability was particularly important as the team transitioned from simulating 10-qubit problems on CPUs to 25-qubit problems on an NVIDIA DGX H100 GPU system. The seamless scalability offered by CUDA-Q meant that the team could continue their work without significant alterations to their simulation code.

The ability to pool the memory of multiple GPUs using NVIDIA’s mgpu backend was another significant advantage. This feature allowed the researchers to scale their simulations even further, demonstrating the potential of quantum-accelerated supercomputing for solving large-scale problems.

The findings from these simulations are promising, the researchers report. The quantum algorithms performed particularly well in the GMM (K=2) clustering approach, and the divisive clustering method was found to be comparable to a classical heuristic approach known as Lloyd’s algorithm. These results suggest that quantum computing could offer competitive advantages over classical methods in specific use cases.

As reported in the blog, “The performing simulations at the scales that Petros’ team presented in their Big data applications on small quantum computers paper is only possible with the GPU-acceleration provided by CUDA-Q.”

This statement underscores the critical role that NVIDIA’s CUDA-Q platform played in enabling these quantum simulations, and by extension, in advancing the field of quantum machine learning.

Looking ahead, Petros and his team plan to continue their collaboration with NVIDIA to further develop and scale new quantum-accelerated supercomputing applications using CUDA-Q. Their ongoing work will likely focus on refining QML algorithms and exploring additional quantum techniques that can be applied to real-world problems.