Insider Brief

- A QuEra Computing-led team of researchers said their quantum reservoir learning can operate effectively on up to 108 qubits.

- Scientists says the advance could one day be used for practical purposes, from better image classification to improved medical diagnostics

- The team included included scientists from the Department of Physics at Harvard University, and JILA and the Department of Physics at the University of Colorado.

Researchers report they have taken an important step in developing scalable quantum reservoir learning algorithms that could one day be used for practical purposes, from better image classification to improved medical diagnostics — and maybe even tastier tomatoes.

According to the QuEra Computing-led team of researchers, their quantum reservoir computing method can operate effectively on up to 108 qubits, which they are calling the largest quantum machine learning experiment to date, surpassing the former 40-qubit record.

This advance, described in a research paper on arXiv, introduces a scalable, gradient-free algorithm that leverages the quantum dynamics of neutral-atom analog quantum computers for data processing, according to the research team, which also included scientists from the Department of Physics at Harvard University, and JILA and the Department of Physics at the University of Colorado.

The researchers, writing in a company social media post, report that their findings show competitive performance across binary and multi-class classification and time-series prediction tasks. An easier way to put this: the method can sort items into categories, recognize patterns and better predict future data trends, all of which eventually could be valuable for a range of everyday computations.

Traditional quantum machine learning methods often need a lot of resources to fine-tune parameters and face problems such as diminishing returns in training effectiveness. QuEra’s algorithm bypasses these challenges by using a general-purpose, gradient-free approach, making it both scalable and resource-efficient. The new method achieved a significant leap, surpassing the previous record of 40 qubits and demonstrating the potential of using quantum effects that traditional computers can’t handle for better machine learning.

“A universal parameter regime, informed by physical insights, eliminates the need for any parameter optimization in the quantum part, resulting in substantial savings of quantum resources.” the QuEra team noted in the paper.

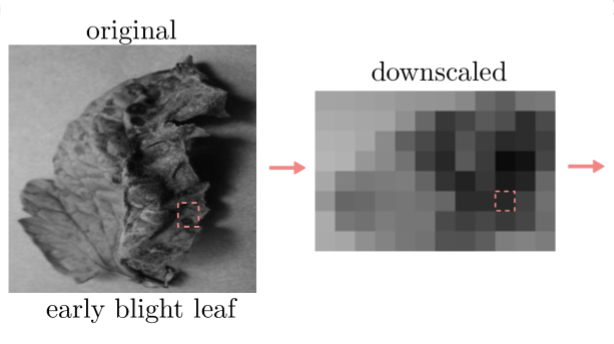

The experimental results on QuEra’s Aquila quantum computer included various tasks, such as image classification on the MNIST handwritten digits dataset and the tomato leaf disease dataset.

The MNIST dataset is a well-known collection of 70,000 images of handwritten digits, commonly used for training and testing image processing systems. Each image is a 28×28 pixel grayscale image of a single digit from 0 to 9. It may sound easy — because it is for humans — but this dataset is widely used as a benchmark in the field of machine learning to evaluate the performance of various algorithms in recognizing handwritten digits.

The tomato leaf disease dataset consists of images of tomato leaves affected by different diseases. It is used to train and test algorithms in identifying and classifying the types of diseases affecting the leaves. It’s also relevant for agricultural applications, where accurate identification of plant diseases can lead to better crop management and improved yields.

The algorithm successfully handled both binary and 10-class classifications, achieving a test accuracy of 93.5% for the MNIST dataset, even in the presence of significant experimental noise.

To evaluate the performance of their approach, the researchers compared it to several classical methods, including a linear support vector machine (SVM) baseline, a four-layer feedforward neural network, and the classical spin reservoir (CRC). The CRC serves as a classical analog to the quantum reservoir, providing a clue to how important quantum entanglement us. The QRC method showed a clear advantage, achieving higher accuracy and demonstrating the practical benefits of quantum effects in machine learning tasks.

One significant finding is the observed quantum kernel advantage. By comparing QRC-generated kernels with classical kernels, the researchers demonstrated the existence of datasets where non-classical correlations can be effectively utilized for machine learning. This advantage was evident even with a relatively small number of measurement shots, providing an order of magnitude reduction in runtime compared to typical classical methods.

The study also highlights the noise resilience of the QRC framework. The algorithm was tested across different tasks and data, consistently showing robust performance even on noisy quantum hardware. This robustness comes from using a set of parameters that work well for many situations, which means no fine-tuning is needed for the quantum part.

“This shows the existence of datasets for which non-classical correlations of QRC can be utilized for effective machine learning even on current, noisy quantum hardware,” the researchers write.

It’s always important to generally understand the potential limitations of quantum approaches to machine learning. For example, scalability beyond the tested 108 qubits may present additional challenges. Improvements in both quantum algorithms and hardware also may be necessary to fully realize practical quantum advantages.

Looking ahead, however, the researchers see several avenues for further exploration and improvement. Scaling up the experimental sampling rate and system size could lead to substantial performance gains. Additionally, tailoring the algorithm to different quantum platforms, including digital quantum computers and early fault-tolerant quantum systems, could extend its applicability.

Future research will also focus on identifying datasets that exhibit comparative quantum kernel advantage and exploring the utility of QRC for other machine learning paradigms, such as generative and unsupervised learning tasks. The general-purpose nature of the QRC algorithm allows for strong hybridization with classical machine learning methods, potentially offering a versatile tool for various applications.

The