Insider Brief

- The promise of vastly improved capabilities is driving researchers to explore the convergence of quantum computing and artificial intelligence.

- Quantinuum announced the introduction of Quixer, a state-of-the-art quantum transformer that is quantum ‘native’.

- The team reports Quixer has demonstrated efficient and effective quantum transformations tailored for language modeling tasks.

One of the hot topics in quantum is what happens when — or if — artificial intelligence (AI) and quantum computing will converge? Both fields appear to be rapidly moving along the path of that technological merger.

Quantinuum may have just quickened that pace with the introduction of Quixer, a state-of-the-art quantum transformer, according to Quantinuum’s LinkedIn post and a paper posted in ArXiv, a pre-print server. The team adds that Quixer also marks a departure from traditional methods to advance quantum AI

The promise is huge, as the team points out in their post: “The marriage of AI and quantum computing holds big promise: the computational power of quantum computers could lead to huge breakthroughs in this next-gen tech.”

Bridging AI and Quantum Computing

First, a little background in artificial intelligence might be helpful. Transformers, a key component in modern AI, are machine learning models that can predict and analyze sequences, such as text or speech. They are integral to AI technologies like ChatGPT. However, implementing these models on quantum computers presents unique challenges due to the fundamental differences between quantum and classical architectures. Traditional approaches have relied on “copy-paste” methods, which directly transpose classical algorithms onto quantum systems without leveraging the distinctive properties of quantum computing. This approach, however, has often led to inefficiencies and fail to exploit the true potential of quantum mechanics, according to the researchers.

The researchers explain in the post: “Transformers are incredibly well-suited to classical computers, taking advantage of the massive parallelism afforded by GPUs. These advantages are not necessarily present on quantum computers in the same way, so successfully implementing a transformer on quantum hardware is no easy task.”

The Mechanics of Quixer

Quantinuum’s AI team, headed up by Stephen Clark, tackled this issue during the development of Quixer, which is designed explicitly for quantum circuits. By using quantum algorithmic primitives, the team reports this transformer model is uniquely suited for quantum circuits, enhancing qubit efficiency and potentially outperforming classical models. When the researchers refer to quantum algorithmic primitives, they are describing foundational methods in quantum computing that manage complex operations, such as superposition and entanglement, so they can be efficiently implemented on quantum hardware.

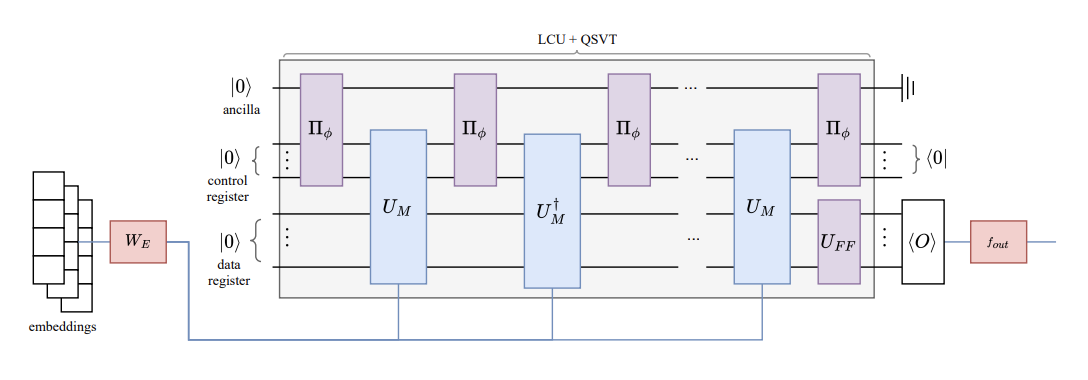

In this case, Quixer used quantum algorithmic primitives, including the Linear Combination of Unitaries (LCU) and Quantum Singular Value Transform (QSVT). According to details outlined in the ArXiv paper: “Quixer operates by preparing a superposition of tokens and applying a trainable non-linear transformation to this mix.”

This mechanism allows Quixer to achieve efficient and effective quantum transformations tailored for language modeling tasks.

One of the key achievements of the Quixer model is its application to a practical language modeling task using the Penn Treebank dataset, which is a dataset of English text annotated with syntactic and grammatical structure, commonly used to train and evaluate natural language processing models. According to the team, this marks the first instance of a quantum transformer being applied to a real-world dataset, producing results that are competitive with classical baselines.

The paper notes: “Our primary contributions are the first result for a quantum transformer model applied to a practical language modelling task, along with a novel quantum attention mechanism built using the LCU and QSVT.”

Overcoming Limitations and Looking Forward

While the results from Quixer are promising, the researchers, of course, do acknowledge certain limitations. Quantum models inherently differ from classical ones, making direct comparisons challenging.

The paper explains, “Comparisons between classical models and quantum counterparts can be difficult due to their inherently different data representations. Quantum models are limited to low-dimensional parameterization of unitary matrices on exponential-sized complex-valued systems.”

Additionally, the current scale of quantum models is relatively small, as simulating large-scale quantum systems remains a complex task. Despite these challenges, the success of Quixer in handling real-world tasks is a milestone in itself. The researchers suggest that future work will focus on scaling up these models and exploring new instances of the framework to balance gradient magnitudes and classical simulability. In other words, the team is focused on achieving an optimal trade-off between the precision of model training and the ability to simulate it on classical computers.

Founder and Chief Product Officer, Ilyas Khan stated “Our head of Ai, Dr Steve Clark has been working on the core foundations that need to be built in order for quantum computing to propel AI systems to a whole different level from the already impressive position they occupy today. This is a long term effort – we have been working on this for over 5 years already – and it has to be noted that effectiveness will have to be based on the quality of our quantum processor, the fine calibration of our middleware, and of course the scaling of our system which everyone now knows is possible. We are very excited to share all of these updates with the quantum computing and AI community as a whole, for the benefit of all”

The Broader Impact of Quixer

The introduction of Quixer not only advances the field of quantum AI but also demonstrates the practical benefits of integrating quantum computing with AI technologies. As the LinkedIn article succinctly puts it, “This paper also marks the first quantum machine learning model applied to language on a realistic rather than toy dataset. This is a truly exciting advance for anyone interested in the union of quantum computing and artificial intelligence.”

The potential applications of Quixer extend beyond language modeling to other areas requiring complex data analysis and pattern recognition. As quantum computing continues to evolve, the principles demonstrated by Quixer could lead to significant breakthroughs in various domains, from natural language processing to advanced scientific research.

Quantinuum’s announcement also hints at future developments, promising a “summer of important advances in quantum computing.”

The Quantinuum team writes that their optimism is grounded in their recent achievement where the company’s System Model H2 surpassed Google’s quantum supremacy benchmarks, indicating that Quantinuum is rapidly progressing to more advanced quantum computing capabilities.

In addition to Clark, the Quantinuum team includes Nikhil Khatri, lead author; Gabriel Matos and Luuk Coopmans.

Read the paper on ArXiv for a deeper technological understanding of Quixer.