Insider Brief

- Scientists have taken a step towards better understanding the complex behavior of quantum systems by directly observing how quantum entanglement scales across different energy levels.

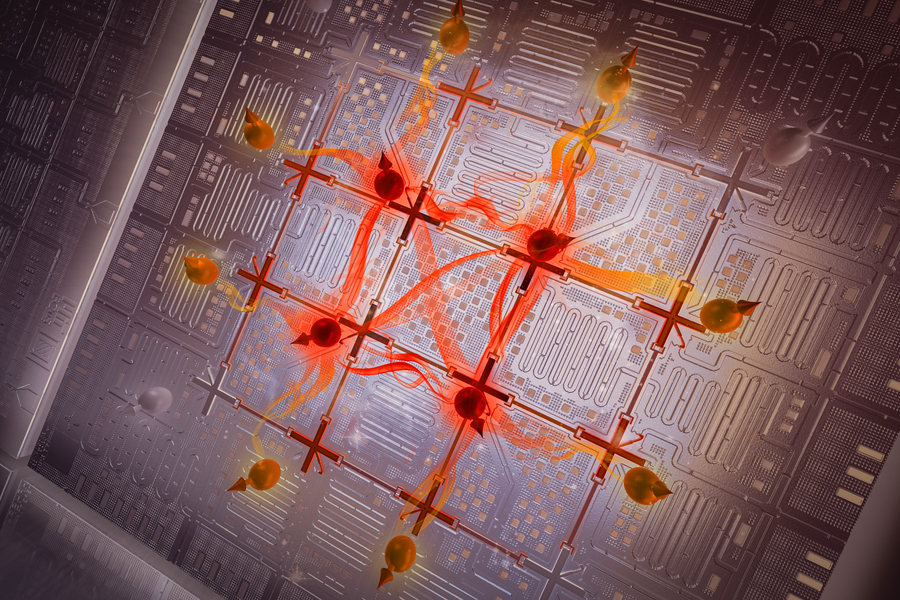

- The MIT-led team performed the experiments in a programmable superconducting quantum processor.

- The findings were published in Nature and could help aid in benchmarking and validating future generations of quantum computers.

- Image: MIT News (Credit — Eli Krantz, Krantz NanoArt)

An MIT-led team of scientists have taken a step towards better understanding the complex behavior of quantum systems by directly observing how quantum entanglement scales across different energy levels in a programmable superconducting quantum processor.

The findings, published in Nature, provide new insights into the emergence of thermodynamic properties from the quantum world and could help establish benchmarks for quantum computers, among other practical outcomes.

Entanglement, where particles can demonstrate counter-intuitive correlations no matter what the distance, is a critical feature of quantum mechanics — and its key to developing practical quantum computers. However, pinning down how entanglement propagates and contributes to the collective behavior of large quantum systems has proven exceptionally challenging, to say the least. Conventional computers likely can’t help either because they quickly become overwhelmed by the exponential complexity.

To confront this roadblock, researchers from MIT, MIT Lincoln Laboratory, Wellesley College and the University of Maryland turned to the quantum hardware itself. They built a 4×4 grid of superconducting qubits, cooled to cryogenic temperatures, that could emulate an idealized two-dimensional quantum system known as the hard-core Bose-Hubbard lattice model. (The “hard-core,” by the way, refers to this strong particle-particle interactions that restrict each lattice site so that it can be occupied by at most a single particle.)

“Here, we are demonstrating that we can utilize the emerging quantum processors as a tool to further our understanding of physics,” Amir H. Karamlou, the lead author, told MIT News. “While everything we did in this experiment was on a scale which can still be simulated on a classical computer, we have a good roadmap for scaling this technology and methodology beyond the reach of classical computing.”

By simultaneously driving all 16 qubits, the team prepared highly entangled quantum states across various energetic regimes of the simulated lattice model. Analyzing correlations between the qubits then revealed what could be called a dichotomy in how entanglement scaled depending on the state’s energy.

For states near the middle of the spectrum, entanglement exhibited a “volume law” scaling, where it increased proportionally with the system size. This implies that information is spread out in a complex way. In contrast, toward the highest and lowest energies, the data showed an “area law” scaling where entanglement only increases across the boundary.

“While (we) have not yet fully abstracted the role that entanglement plays in quantum algorithms, we do know that generating volume-law entanglement is a key ingredient to realizing a quantum advantage,” William D. Oliver, a senior author on the study, explained to the university news service.

Karamlou elaborated: “As you increase the complexity of your quantum system, it becomes increasingly difficult to simulate it with conventional computers. If I am trying to fully keep track of a system with 80 qubits, for instance, then I would need to store more information than what we have stored throughout the history of humanity.”

The ability to experimentally map out this crossover between area and volume law scaling directly probes how thermodynamic quantities like temperature and entropy emerge from the quantum realm. It also provides a testbed for developing intuition about large-scale quantum dynamics that classical computers cannot replicate.

“Our results establish a powerful new methodology for using current and near-term quantum processors to explore foundational questions in many-body quantum physics,” said Oliver. “It’s an exciting step towards understanding the physics underlying quantum computing advantages.”

While still modest in size, the team’s 16-qubit lattice represents a substantial advance over previous explorations of entanglement scaling, which were limited to studying smaller numbers of qubits. Crucially, their approach can readily extend to larger arrays as quantum hardware continues its rapid development.

The findings not only illuminate fundamental aspects of quantum mechanics, but could also aid in benchmarking and validating future generations of quantum computers tasked with solving currently intractable problems, the researchers said.