Exposing the emptiness of the h-word: how claims of hyperbole ring hollow; and how nobody, not even today’s experts, can possibly know the limits of Quantum.

By Shai Phillips

President, PSIRCH

In a monthly segment in The Quantum Insider, PSIRCH’s President, Shai Phillips, will conduct a first-of-its-kind audit of broad industry-internal accusations of exaggeration in quantum computing and associated fields. How much truth there is to them will be the focus of this series.

“Quantum computation will be the first technology that allows useful tasks to be performed in collaboration between parallel universes.”

David Deutsch – Professor of Physics, University of Oxford, and foremost authority in pioneering some of the most significant advances in quantum computation, including the Deutsch-Jozsa algorithm: one of the first exponentially faster quantum algorithms over classical computing.

- Expert views in Quantum support the outlook that quantum computing is set to change the world, meaning far-reaching generalized projections regarding Quantum are valid and not hyperbolic.

- There is strong evidence quantum computing will aid in artificial intelligence/ machine learning, including with exponential speedups when quantum data is involved, in training AI, and in generalizing, thus some skeptical statements by experts do not tell the full story, and can be misleading to various audiences.

- Even expert claims of hyperbole, largely due to the impracticality of qualifying all remarks, can be inaccurate, ironically hyperbolic, and have the potential to retard progress in Quantum. Thus, a cessation of such claims will be beneficial.

Part II – The Ironic Hyperbole of the H-word

For various reasons, many quantum experts who participated in conversations that led to the findings and discussions in this series were not able to be quoted by name. I am truly grateful to these unsung heroes for their valuable help in getting to the bottom of some highly complex subject matter. This series is dedicated to them.

COMPUTATIONAL COMPLEXITY THEORY

In last month’s segment, a deeper dive into complexity theory was promised, in order to better evaluate the veracity of claims of exaggeration in quantum computing. This is exactly what we will do here in Part II of this article series, but first a few quick caveats:

Later in this series, we will get into the limitations of complexity theory, and question if they may be too great to consider this framework in any way dispositive. We will also challenge whether complexity theory is the right rubric as we enter a new paradigm of computing, and consider others in play, which may even serve as a superior lens. Lastly, we will consider evidence that current computer scientists and adjacent experts in the field do not seem to put much stock in this theoretical area today, and ask whether this means it is considered less useful than it might appear. All that is to say, computational complexity theory is far from perfect, and while we will explore it now, that fact should be kept in mind. Also important to note is complexity theory is many oceans deep and wide. The overview below and subsequent debate are a purposefully highly simplified surface-level discussion, so as to be intelligible even to non-technical readers. Needless to say, many of these points can be picked apart the deeper one goes, but there is no room for that here. Hopefully this introduction is nonetheless a helpful beginning. If it’s too “in-the-weeds” for anyone, feel free to skip ahead and simply use it as a reference…

The field of complexity theory, central to the usefulness debate in quantum computing (whether it should be or not!), is concerned with: distinguishing the different types of computational problems and their degrees of relative difficulty; algorithms (which are essentially sets of instructions) for solving problems; and Turing machines (theoretical “simplified” abstractions of computers) as instruments used to implement algorithms.

Algorithms are either: deterministic (a predictable standard we know well); randomized, or probabilistic (powerful but less reliable: e.g. quantum walks, Monte Carlo method, with heavy application in Finance). The idea of a nondeterministic model of computation exists too (with nondeterministic Turing machines), but it is purely theoretical, and can’t be used in practice. If it could, it would be revolutionary, as it allows multiple computational paths simultaneously – a trait often mistakenly attributed to quantum computers themselves.

Problems to be solved can be framed as either decision problems (yes/no answers), or function problems (solutions beyond yes/no outputs). If a problem is ‘intractable’, there is no efficient algorithm for solving it, though “brute-force” may inefficiently tackle some instances of it. An “exponentially hard” problem rapidly becomes increasingly harder as it scales, until unlikely to ever be solved. Efficient algorithms ideally solve all instances of a given problem, and when they do, they can speed up the process to varying degrees: super-linearly; polynomially; quadratically… But the holy grail is an exponential speed-up, as this is the only way to render an exponentially hard problem fully solvable.

Another important construct, crucial to complexity theory, is the idea of an oracle – a subroutine that takes some input and returns an output when queried in any instance of a given problem. The opacity with which it does this, when theorizing, has led to its being known as a “black box” operation. Taking care to note that an “oracle” can mean slightly different things in different contexts: In practice, the desired function must be encoded into the oracle, whereas in query complexity and theoretical computer science, it may be uniquely notional. Still, it’s a helpful tool and often the only way to distinguish between different classes of problems (based on varying performances of algorithms given that resource). When it does so, this is known as “oracle separation”. Problem classes are often characterized via a technique known as ‘reduction’, but we’ll avoid the topic of reducing to problem classes here, as that could get complicated. Instead, here are some simplified definitions of various classes of problems that arise in this series:

- P (polynomial time): decision problems that a classical computer can solve in polynomial time (you can think of this simply as taking a “reasonable amount of time”, even when the problem scales to worst case scenarios).

- NP (non-deterministic polynomial time): decision problems that a classical computer can verify [but not necessarily solve] in polynomial time.

- NP-Complete: the hardest type of NP decision problem.

- NP-Hard: problems that are at least as hard as NP-complete, sometimes overlap with NP-Complete, and may be either decision problems or function problems.

- PH (polynomial hierarchy) – a union of all classes in the polynomial hierarchy.

- BPP (bounded error probabilistic polynomial time) – decision problems classical computers can often solve (2/3) with randomized algorithms in polynomial time.

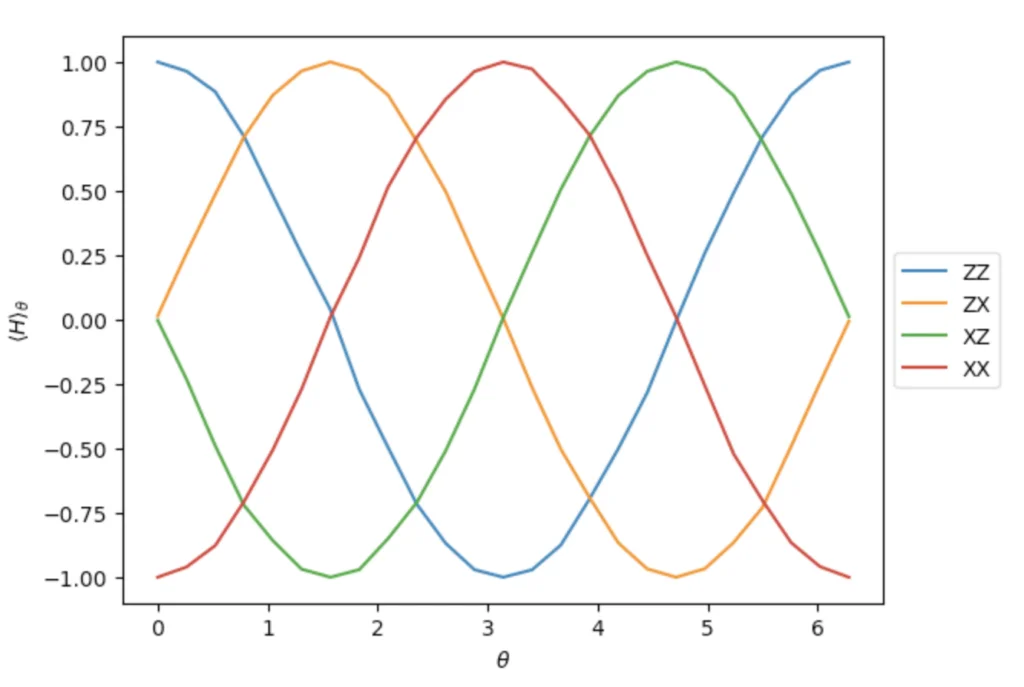

- BQP (bounded error quantum polynomial time) – decision problems a quantum computer can often solve (2/3) in polynomial time.

- EQP (exact quantum polynomial time) – decision problems a quantum computer can always solve in polynomial time.

- QMA (Quantum Merlin Arthur) –sort of like the quantum analogue of NP [above].

- PSPACE (polynomial space): decision problems a classical computer can solve in “a reasonable amount” of space.

- #P: a “counting problem” that asks how many solutions there are to a question.

- GapP: a counting problem asking how many more true results exist vs. false ones.

BQP covers P, some of NP, and more. However, as of now, there is no real compelling evidence that a quantum computer may solve NP-complete nor NP-hard problems in polynomial time – at least, not in the most stringent sense… we’ll get to that.

EXAGGERATED CLAIMS OF EXAGGERATION

In 2021, not much had changed in Aaronson’s view since his 2008 popular article. At QCWare’s Q2B conference, the professor’s frustration persisted at inaccuracies about what current theory says regarding quantum computation. In particular, he voiced his consternation that many, especially those outside of academia but rather in industry or financing, continued to claim that the “Travelling Salesman Problem” (or “TSP”) will see exponential speedups on account of quantum computers. “Wrong”, says Aaronson, and that’s “not an opinion, it’s a fact.” The esteemed professor was seemingly objecting on grounds that TSP is an NP-hard or NP-complete problem, depending how it’s framed, so such a claim had no basis in reality. Before we examine whether Aaronson’s claim of the h-word was justified, we’d do well first to ask if it’s truly “a fact” as he claimed, because if it’s not, Aaronson himself was ironically being hyperbolic, but in this case the “hype”, or exaggeration, was to the negative, not to the positive. That’s right, it cuts both ways.

Not to stand on ceremony, the most straightforward answer to this question is: No, it’s not a fact. Technically speaking that’s not the case. We know this for two reasons: First, as there has been no formal proof of it; and second, because Aaronson said so himself! In his 2008 mainstream article, he remarked we can “…not rule out the possibility that efficient quantum algorithms for NP-complete or even harder problems are waiting to be discovered.” In other words, Aaronson knew it was not a fact, but nonetheless chose to present the information in a more negative light than necessary. That’s the real “hype” right there – exaggeration in service of the most negative, or pessimistic, viewpoint. That is, unless anything has changed on this issue since 2008, and that’s very doubtful.

With the technical answer out of the way, we must nonetheless balance it with the real expectations of present day: As it stands, there is near total consensus in the quantum community that quantum computers will not be able to solve NP-complete or NP-hard problems in polynomial time. Aaronson was clearly right on the point of agreement. To go even further in supporting his position, we must also consider the burden of proof – if anyone is to claim something as far-reaching as solving NP-complete, it is on them to provide either proof or at least extremely compelling evidence for it, and that has not happened. As Richard Dawkins would say, we can’t prove there’s no invisible spaghetti monster in the room, but if we try to disprove every wild notion that has no basis, we will be chasing wild geese forever. In that respect, just as we might consider it “a fact” that there’s no invisible spaghetti monster around, Aaronson could be deemed right that it’s a fact quantum computers won’t be able to solve NP-hard problems like TSP in a reasonable timeframe. So, as we can see, there is more than one way to look at this, depending on your philosophical bent. It’s also worth noting there is likewise no proof a classical algorithm may not someday solve NP-complete, so while quantum computing provides another approach to tackle it, we really must admit there is no basis today for the claim, or otherwise confess our ignorance on the entire matter, or even question the sufficiency of the complexity theory rubric itself. That’s to say, by asking: “can quantum (or classical) computing solve NP-complete?” – is that even the most sensible question, or is there another way to look at it, a better way to approach the issue? That’s a matter for more qualified minds, but it does seem to be a sentiment echoed in the industry.

Still, that’s not the end of the story. We must also consider what exactly Aaronson means when he says this. In computer scientist talk, to ‘solve’ a class of problem means to solve any and all instances of that problem, no matter how big or complicated they get as they scale up. Every and any such problem of any and all sizes must be solved for this to hold true. Not only that, but no matter how big the problem gets, it must be solved with total precision, even in worst case scenarios. It’s possible that many audiences don’t realize the stringency and rigor of this language, and might therefore misinterpret Aaronson’s condemnation to mean the complete inefficacy, in this regard, of quantum computing…

Little might they know that experts believe certain problem instances of these types of problems may indeed be solvable, or that approximate solutions are often so apt, as to make precise solutions for worst case scenarios all but unnecessary. Nor might such audiences consider the role that heuristics could play. We will cover these topics later, and also look at how the only way in which they might be possible is by exploiting the structure of a problem – another assertion Aaronson has made on various occasions. For now, back to the claim that some people allegedly believed that quantum computing could offer exponential speedups for TSP (basically “solving” an NP-complete problem):

MISUNDERSTANDING IS NOT HYPERBOLE

I had not heard this claim before, but I believe it’s out there. Why? Because it’s such an easy mistake to make! Quantum computers are notoriously effective at optimization problems in particular, so just to start, there is already a possibility of confusion, given that TSP can be framed as an optimization problem.

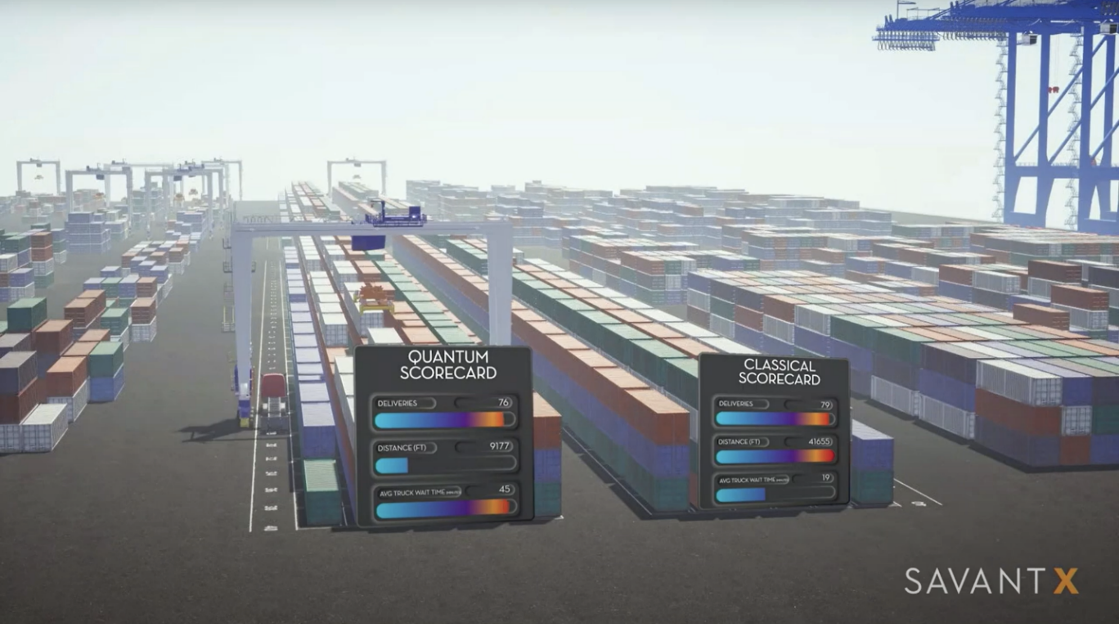

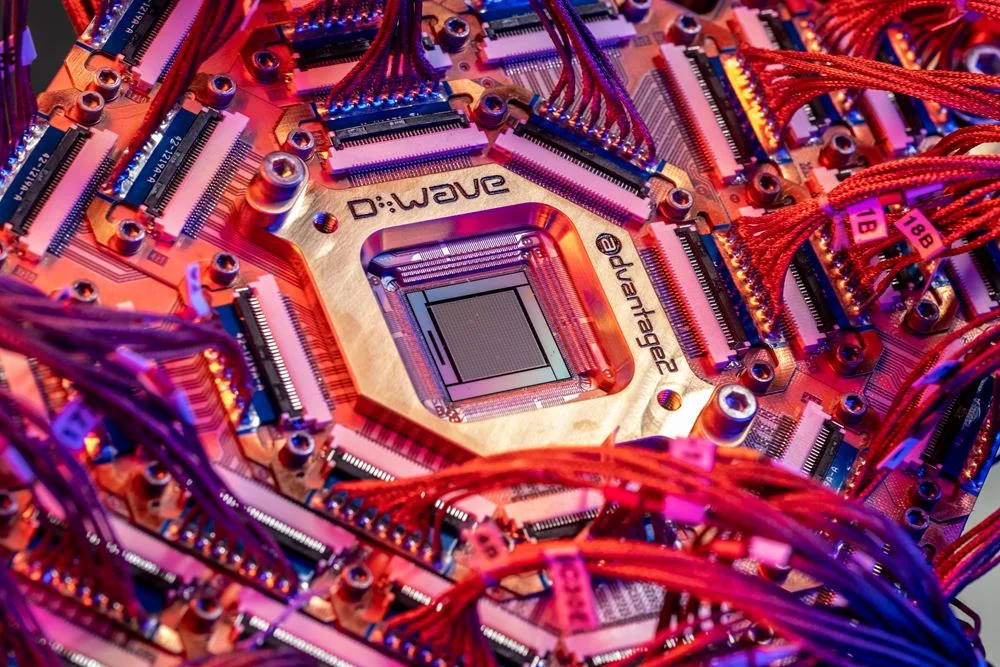

Going further, quantum computers can even be used in broaching TSP itself! To better demonstrate this, I’ll borrow from a well-credentialed quantum vlogger by the name of Anastasia Marchenkova, who interviewed Aaronson at this same conference the next year, and who has alluded to this multiple times in her videos (which, by the way, are highly recommend for up-and-coming quantum enthusiasts). Anastasia has explained how the QUBO (or “Quadratic Unconstrained Binary Optimization”) model is “used for optimization problems like Travelling Salesman” and she goes on to discuss adeptness of quantum annealers in “translating these… problems effectively onto quantum hardware… by translating the graph onto the topology of the quantum chip… then construct[ing] the Hamiltonian [total energy of the system] needed to minimize”.

It bears mentioning that Marchenkova is referring to adiabatic quantum computers, and not the universal gate-based systems most companies are developing. D-Wave is really the only company for annealers. Nonetheless, the point remains that quantum computers and TSP are interlinked quite solidly in capability discussions. Universal quantum computers are often touted as strong at optimization problems too, though likely not yet to the same level as annealers. It’s also important to note that Anastasia is neither incorrect, nor contradicting Aaronson. It’s possible for a quantum computer to be helpful in solving or approximating various instances of TSP without giving rise to an algorithm that could solve any instance of it, even as it scales exponentially. If that happened, it truly would be a breakthrough. Yet, even in these minor instances, Aaronson would not let us get away without asking: Does it exceed the performance of classical computation? And, as such, is the use of a quantum computer even justified?

In actuality, classical computers are already pretty good at approximating solutions for TSP, as I was informed by a former exec at Google Quantum AI, because of the facet of locality. We must also consider another type of popular quantum algorithm: QAOA (or “Quantum Approximate Optimization Algorithm”) – these will not give you any precise solutions, but will come so close as to make it a moot point in many cases, with other cases oft being so far-fetched as to be immaterial to useful application. Approximation is itself often a sufficiently enhanced solution, without the need for absolute precision.

If you thought quantum computers exponentially speed up TSP, unless you’re in the field, you can’t be faulted and should be forgiven. While unlikely, they may someday achieve a gargantuan feat like this, for reasons elucidated later. Until then, dispelling people of their error, and inducting them to the finer nuances, is certainly meritorious, but accusing them of the h-word merely for such an understandable mix-up is unfair, unwarranted, and the first sign of how our industry’s “hype police” has gone too far in weaponizing the h-word.

(Tune in next time, as we behold the power of early breakthroughs in quantum.)

For more market insights, check out our latest quantum computing news here.