Insider Brief

- Quantinuum researchers published a paper reveals a shift towards creating artificial intelligence (AI) frameworks that users can understand and trust.

- The team is addressing concerns about the vagueness of AI’s decision-making process, often referred to as a “black box”.

- Category theory is used as a “Rosetta stone” for comprehending AI decision-making.

Quantinuum researchers are shining a light into creating a new generation of artificial intelligence (AI) systems designed to be both interpretable and accountable, challenging the opaque nature of current “black box” AI technologies, according to a company blog post.

The team, led by Dr. Stephen Clark, Head of AI at Quantinuum, published a paper on the pre-print server ArXiv that reveals a shift towards creating AI frameworks that users can understand and trust, addressing long-standing concerns over AI’s decision-making processes and their implications.

At the heart of the AI mystery is the question of how machines learn and make decisions, a process that has largely remained elusive due to the complex nature of artificial neural networks—computer models inspired by the human brain. The obscurity of these neural networks has given rise to what is known as the “interpretability” issue in AI, raising alarms over the potential risks AI poses when its workings and rationales remain unfathomable.

The team writes: “At Quantinuum we have been working on this issue for some time – and we began way before AI systems such as generative LLM’s became fashionable. In our AI team based out of Oxford, we have been focused on the development of frameworks for “compositional models” of artificial intelligence. Our intentions and aims are to build artificial intelligence that is interpretable and accountable. We do this in part by using a type of math called “category theory” that has been used in everything from classical computer programming to neuroscience.”

Quantinuum’s research, however, offers an innovative approach to interpretability by employing category theory, a branch of mathematics known for its ability to bridge disparate fields from classical computer programming to neuroscience. This “Rosetta stone” of understanding, as described by mathematician John Baez, offers a promising framework for deconstructing and comprehending the systems and processes underlying AI cognition.

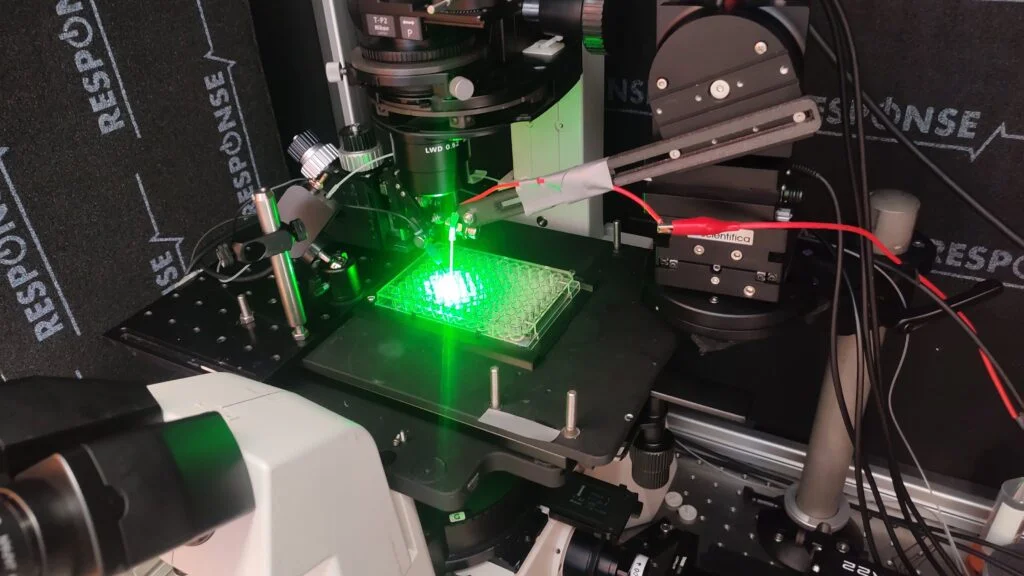

The team’s recent publication delves into the application of compositional models and category theory to improve AI’s transparency, particularly in image recognition tasks. By relying on these models, Quantinuum’s researchers have demonstrated how machines, including quantum computers, can learn concepts such as shape, color, size, and position in a manner that is both interpretable and accountable.

Quantinuum’s researchers warn us not to think of this as a theoretical exercise, adding that the investigation is rooted in a vision for AI that transcends the current fascination with generative Large Language Models (LLMs). By focusing on foundational principles that promote clarity and responsibility, the work marks a significant stride towards addressing the safety concerns associated with AI systems. The ability for users to understand why an AI system makes certain decisions will be critical in ensuring that AI can be a force for good, mitigating the risks of unintended harm.

Ilyas Khan, a founder, Chief Product Officer, as well as Vice Chairman of Quantinuum, emphasized the timeliness and importance of this research, suggesting that the impact on future AI systems will be both significant and imminent.

“In the current environment with accountability and transparency being talked about in artificial intelligence, we have a body of research that really matters, and which will fundamentally affect the next generation of AI systems. This will happen sooner than many anticipate,” writes Khan.

The implications of Quantinuum’s work extend beyond mere academic interest. As quantum computing continues to evolve, offering unprecedented computational power, the applications of transparent, interpretable AI models promise to be as varied as they are profound. From enhancing the precision and reliability of AI-driven decisions to fostering trust and collaboration between humans and machines, the shift towards transparent AI could redefine our technological landscape.

This paper, part of a larger body of work in quantum computing and artificial intelligence. The team writes that as the research community continues to explore the vast potentials of this approach, the promise of a future where AI systems are not only powerful but also comprehensible and accountable becomes increasingly tangible. Their vision for a new generation of AI is one that can be fully integrated into society with confidence and trust, they write.

For more market insights, check out our latest quantum computing news here.