Stuart Woods is the COO of Quantum Exponential, the UK’s first enterprise venture capital fund focused on quantum technology. He has 30 years’ experience leading technology companies from startups to publicly traded businesses. Stuart’s deep tech portfolio comprises eleven disruptive technologies and five acquisitions.

Mandy Birch has decades of executive experience in the quantum industry and in national security where she has built leaders and multinational teams who unlock the potential of disruptive ideas. She recently founded TreQ, a company expediting next-generation computing clusters that include quantum processors. Mandy holds degrees from the US Air Force Academy, MIT, and the Fletcher School of Law & Diplomacy.

With 2023, the year of the national quantum strategy behind us, and 2025 dubbed the International Year of Quantum Science and Technology, the stage is set for 2024 to build upon the momentum catalyzed over the past 12 months. With major quantum initiatives launched and funding committed by nations across the globe in 2023, this year anticipates exciting developments promised by industry and governments.

Success was not all at the national level either. Just within Quantum Exponential’s portfolio, we saw extraordinary achievements. Oxford Quantum Circuits launched the world’s first enterprise-ready quantum computing platform and announced that SBI Investment, Japan’s premier VC fund, is leading its $100m round. QLM Tec introduced the world’s first quantum gas lidar product for continuous methane emissions monitoring, with significant implications for the Biden Administration’s promise to cut its methane emissions by 80% in the next 15 years. Aegiq partnered with Honeywell to develop its small satellite technology for safer, faster communications. Siloton is looking to raise capital for its at-home diagnostic imaging device for age-related macular degeneration.

In the next few years, we need a combination of: continued government backing and ecosystem validation; savvy specialist VCs who invest in the right companies at the right time and spur their success; and intelligent mergers and acquisitions to encourage radical innovation and help the ecosystem grow in a mutually beneficial way – facilitating returns for investors and founders while expediting technological advances. This is especially crucial since well-managed consolidation is essential for successful outcomes as many companies are – and should be – specializing in different parts of the stack only. Many “quantum computing” companies specialize in component technologies that will comprise the supply chain of future high-performance quantum computers.

Bringing all these specialized innovations together to create a fault-tolerant quantum computer is a systems engineering challenge not merely an assembly exercise. The sooner that we recognize that all quantum processor and related component companies should not be full-stack quantum computing companies – the better. Anyone remember JDSU? They were the component company that fueled the dot-com and telecom booms. Companies need to start working together to merge assets across the ecosystem and piece together these elemental sub-architectures.

To discuss the short-term future of quantum computing, specifically regarding how the landscape may shift and consolidate, I had the pleasure of speaking with Mandy Birch. Her achievements include nomination as a general officer in the US Air Force, serving as an officer at Rigetti, and advising the UK’s NQCC. Most recently she founded TreQ. TreQ is a startup focused on scaling the quantum ecosystem by solving systems engineering and manufacturing challenges. I have known Mandy for years. She is well-grounded, experienced and positioned for success.

Stuart Woods: How do you see the topography of the industry shifting over the next few years?

Mandy Birch: We’ll see consolidation for sure. I suspect there will be significant activity in a 2-3 year timeframe, corresponding with larger venture-backed and SPAC companies who will either be running low on cash and challenged to raise the next round, or well capitalized and looking to fortify their positions. With the proliferation of small, highly specialized companies, especially around universities, I anticipate many small companies will look for buyers.

Stuart: Do you see this consolidation unfolding in a particular sequence across the quantum stack in the next 2-3 years? For instance, will we initially see more M&A among hardware startups before moves by platform/software players?

Mandy: I think so. Some of the full-stack companies will become more specialized as processor companies as they will not be able to keep pace with the innovation happening with smaller specialized teams.

We’ll likely see 5 different flavors of consolidation activity:

1) vertically, players will bring in elements of the full stack;

2) horizontally, across different types of processors that might share some top-level infrastructure for multiprocessor heterogeneous workloads;

3) the “component” companies consolidating within existing scientific instrumentation vendors;

4) IP buy outs, including the rise of more malicious tactics like challenging IP in court to bleed away small company resources; and

5) anti-competitive buy-outs, perhaps for IP or teams, but also to mitigate competitive threats.

Thoughtful orchestration of a consolidation process will best keep today’s growing momentum and lessen malicious and anti-competitive activity.

Stuart: From your perspective, having grown with the industry, what do you think is stopping these more productive and constructive forms of consolidation activity at the moment? Is the problem driven by the companies or by the early form of the technology itself?

Mandy: It seems there is an assumption – perhaps a willingness in the industry – to believe that consolidation is hindered by the prematurity of the tech. But I think it has more to do with something missing in our quantum industry: customer focus.

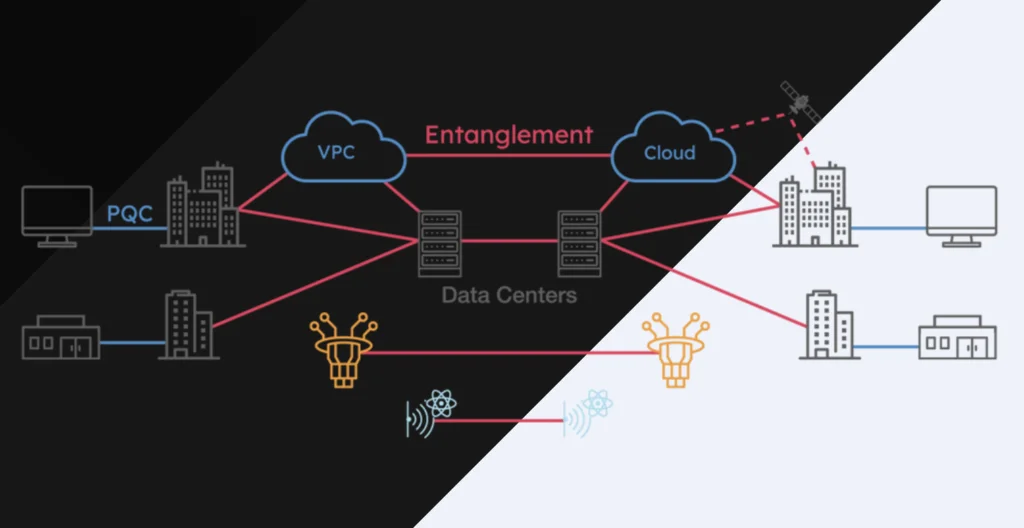

I am most interested in horizontal consolidation across different processors. From a tech perspective, we are seeing that different qubit types, especially near-term, show promise for different types of workflows. However, this needs to be better communicated with customers.

While on-premises demand is growing, customers want a variety of technologies and not just at the software level. That’s because they know important innovations remain at the hardware level and they want to help drive and benefit first from this progress.

While AWS and Azure are great for beginners developing quantum awareness and they have opened broad, affordable access to a diverse user base, they have limits when it comes to performance optimization. We need on-prem offerings that incorporate multiple technologies at an affordable price. Right now, system prices are inflated, often in the upper 8-figure price range. This is because few vendors are making this offering, and the ones who do struggle with delivery beyond an in-lab prototype. So, they mark up for risk, and because they can. Customers should be getting a handful of qubit modalities for the prices they’re paying, not a single technology, single-vendor system. When we start offering multiple modalities at the same site, we’re going to start seeing efficiencies and more opportunity at the upper levels of the stack.

Stuart: There are certain pockets of the industry that champion only one kind of qubit modality or another – you must find this very frustrating!

Mandy: I do. I wish people would stop talking about which technologies will win. There will be plenty of space for many different qubit types for a long time. This also makes it difficult to measure performance today.

The aircraft industry is a good analogy. Fighter jets are designed to be fast and highly maneuverable, at the cost of stability. That sounds a lot like superconducting qubits. But a fighter jet is the wrong product if you need to safely transport lots of people regularly. You need a larger capacity and more stability. Maybe this is like a chemistry simulation, where a cold atom platform might be a better solution. But until we start working with detailed workflows across different processors within the same workflows, and tuning that orchestration, we won’t know what will perform best. And it would be unhelpful to measure all aircraft against a single standard, as we do with supercomputers today.

Stuart: A lot of the companies and technologies that are in play at the moment are being called quantum computing – by the companies themselves or by ministers and media who, in this relatively early phase of development, understand every quantum technology to be quantum computing. Many of these are actually subsets of verticals within what we define to be a quantum computer. There are companies building full-stack quantum computers to show off their low latency, high-speed backbone or to show off the strength of their control architecture. Those technological innovations aren’t necessarily based on quantum principles, but have extensive applications and implications for quantum technologies.

For me, one of the biggest questions over the next few years is how we bring all of these elegant evolutions together. Do you think the engineering challenge has been adequately assessed?

The journey to fault-tolerant quantum computing is filled with many science and engineering challenges. I think we’re overlooking systems engineering challenges and underestimating their difficulty. It will take large overheads to create single logical qubits. There is a major producibility, scalability and manufacturing hurdle. Many companies will struggle through that mindset shift, even if they make a great prototype logical qubit. I’d like to see more manufacturability design in parallel with qubit development.

As the industry becomes increasingly fragmented with specialized innovations, open architecture platforms will be essential to bring the best capabilities together. While “open source” is unlikely and maybe unnecessary on the hardware side, open design is an important capability to keep integrating the flourishing innovation. These solutions don’t have to be universal in the early days. We’ll start with systems integrators calling for specific pieces of stack with interface specifications.

Open design solutions need not be universal. One solution we need is shared infrastructure for deep-stack innovation. We need something that makes high-cost specialized equipment available to a broad community of users. If you’re manufacturing flex lines, you shouldn’t have to build a quantum computer to fully test and characterize your products. And you might like to advertise that your product was used in a real workflow with strong results. If you want to innovate on new qubit modality, you shouldn’t have to have enough capital to build out a full-stack system.

The difficulty here is that even experts seem to underestimate the engineering challenges of building a performant system. While the shared infrastructure need not be the largest, most capable systems required for algorithm and applications work, you do need quality, cutting-edge components. And there’s nothing plug-and-play here. To date, full-stack innovation has been important to our industry. It’s not uncommon to discover something at the algorithm level that requires a change in the silicon to implement. And you need to run many design iteration cycles. That’s hard to do if not on-prem. So this shared infrastructure could really help the industry blossom in a capital-efficient way, with the side benefit of drawing in a more diverse group of innovators.

Every national lab and every university serious about quantum computing work should plan shared infrastructure facilities.

Stuart: I completely agree. To facilitate this commercialization, we need the proper production infrastructure. The work companies like Infleqtion are doing to build an ecosystem of commercial quantum products is paramount to achieving this.

And talking of Infleqtion, at Quantum Exponential we invest across multiple quantum technologies from computing to sensing to communications. I increasingly feel that both computing and other quantum technologies such as sensing are afflicted by a marketing problem. And it’s hurting both, because there is a mistaken preconception of what quantum computing is while quantum sensing is still trying to explain what it does.

From your perspective, is there a communications challenge currently being faced by the quantum industry?

Market misunderstandings either way are bad for our industry. If people believe the only relevant technology is fault-tolerant quantum computers that are capable of cracking encryption, that’s a problem. If people believe in the marketing hype from aggressive quantum computing companies, that harms the whole industry with false expectations. And if people don’t understand what quantum sensors do, we have to work harder at simplifying narratives that resonate with customers.

One puzzle piece we’re missing is how sensors and computers will work together so that processing can remain in the quantum domain.

Overall, we need good technical communicators with character to help non-experts make good decisions around the technology – and that’s customers, government policy/funding, people making career choices, and the list goes on.

For more market insights, check out our latest quantum computing news here.