Insider Brief

- The performance of quantum computers is often difficult to quantify.

- A team of researchers say that they succeeded in deriving concrete numbers from random, data sequences to help benchmark the performance of a quantum computer system.

- The team included experts from Helmholtz-Zentrum Berlin, Freie Universität Berlin, Qusoft Research Centre Amsterdam, the University of Copenhagen and the Technology Innovation Institute Abu Dhabi.

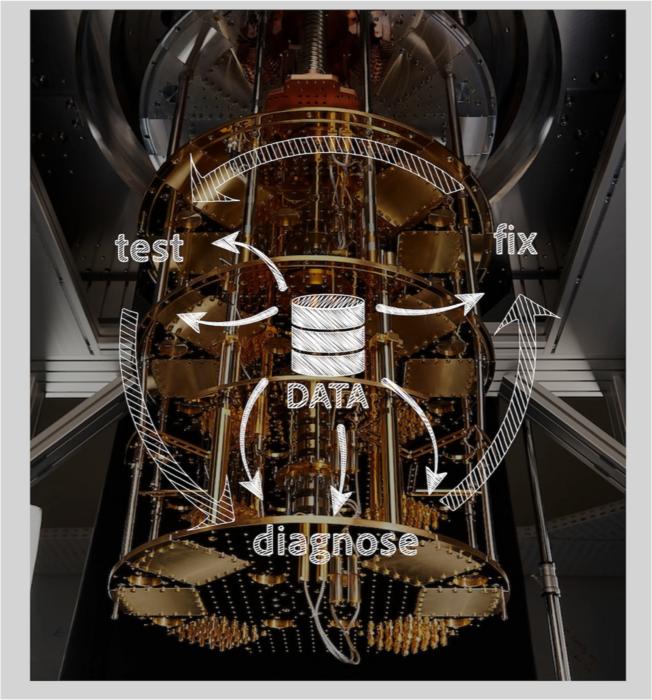

- Image: Quantum computers (here an experiment at the Technology Innovation Institute in Abu Dhabi) work at very low temperatures to minimize noise and unwanted disturbances. With a new developed mathematical tool, it is now possible to evaluate the performance of a quantum computer by random test data and diagnose possible bugs. Credit: I. Roth/Quantum research center, TII

PRESS RELEASE — With increasing size and complexity, quantum computers become a sort of black box.

Using methods from mathematical physics, a team has now succeeded in deriving concrete numbers from random, data sequences that can serve as a benchmark for the performance of a quantum computer system. Experts from Helmholtz-Zentrum Berlin, Freie Universität Berlin, Qusoft Research Centre Amsterdam, the University of Copenhagen and the Technology Innovation Institute Abu Dhabi were involved in the work, which has now been published in Nature Communications.

Quantum computers can be used to calculate quantum systems much more efficiently and solve problems in materials research, for example. However, the larger and more complex quantum computers become, the less transparent the processes that lead to the result. Suitable tools are therefore needed to characterise such quantum operations and to fairly compare the capabilities of quantum computers with classical computing power for the same tasks. Such a tool with surprising talents has now been developed by a team led by Prof. Jens Eisert and Ingo Roth.

Roth, who is currently setting up a group at the Technology Innovation Institute in Abu Dhabi, explains: “From the results of random test sequences, we can now extract different numbers that show how close the operations are on statistical average to the desired operations. This allows us to learn much more from the same data than before. And what is crucial: the amount of data needed does not grow linearly but only logarithmically.” This means: to learn a hundred times as much, only twice as much data is needed. An enormous improvement. The team was able to prove this by using methods from mathematical physics.

“This is about benchmarking quantum computers,” says Eisert, who heads a joint research group on theoretical physics at Helmholtz-Zentrum Berlin and Freie Universität Berlin. “We have shown how randomised data can be used to calibrate such systems. This work is important for the development of quantum computers.”

If you found this article to be informative, you can explore more current quantum news here, exclusives, interviews, and podcasts.

.jpg)