Fault Tolerance

In a QuTech blog post published this year, quantum fault tolerance

“means that errors on a single or a few operations — for example any one of the physical qubits inadvertently flipping its state — should not corrupt the information encoded in the logical qubits. Such fault tolerance enables reliable computation with noisy components and is the key to realizing quantum computers.”

‘Fault tolerance’ is the key phrase here, the holy grail of achievements. Whether we get there or not, is not for us to say, but that doesn’t mean we shouldn’t let the experts continue the debate.

Where do we stand in achieving full tolerance? is a video segment from a longer interview episode called “Fault Tolerance and Error Correction” hosted by Joe Fitzsimons and Si-Hui Tan, CEO and CSO of Horizon Quantum Computing, respectively, with quantum computing luminaries John Preskill and Dave Bacon.

Recorded in February and available on podcast platforms since July, the interview was made available this week on Horizon Quantum Computing’s YouTube channel. The discussion is informative and sheds light on the topic of fault tolerance in quantum computing.

Preskill, an American theoretical physicist and the Richard P. Feynman Professor of Theoretical Physics at the California Institute of Technology, where he is also the Director of the Institute for Quantum Information and Matter, is a leading scientist in the field of quantum information science and quantum computation, and he is known for coining the term “quantum supremacy” and that of “noisy intermediate-scale quantum (NISQ)” devices.

A Senior Staff Software Engineer at Google, Dave Bacon meanwhile is a specialist in software engineering, quantum computing, quantum software, innovation research, and technical management and has a Ph.D. in Physics from the University of California, Berkeley.

Brute Force

“There’s what I call the “brute force effect”,” said Bacon to host Joe Fitzsimons’ question on where we are heading in regard to error mitigation of quantum systems, “which is like our hardware is right on the cusp of being able to do these computations, let’s see how much we can get out of that. For most systems that are laid out on a 2D spatial grid leads you to the surface code… What could happen to change that? Well, one thing that could happen to change that is thinking about connectivity and the architecture.”

Bacon gave some examples of this, focussing on trapped ions, where he mentioned that within the trap the qubits have connectivity and whether it is possible to leverage that to perform error correction using different codes, noting demonstrations of this have already been done.

“And what are the main barriers ahead of us?” Fitzsimons then asked.

“It’s still extremely hard to get these experiments to work,” said Bacon. “We are just learning how to do this. There are some things that we’re starting to see that are interesting — there’s a word in quantum that I don’t like to say because I don’t know what it means, which is “scalable”.

Admitting it’s hard to describe because it has a lot of components, Bacon said that for many years a lot of quantum computing was focused inwardly on improving parts of a system to get things like better decoherence rates, work on controlling single qubits and getting measurements to work.

The past focus on components, however, has made way for the bringing together of these things at the same time, according to Bacon, meaning that mashing things together, so they work at the same time, equates to nothing surviving.

“I think that’s one of the key challenges we’re seeing, especially for these brute force-type approaches, is to get everything to work at the same time” he added.

Hallmark of Quantum Error Correction

It was Preskill’s turn to speak:

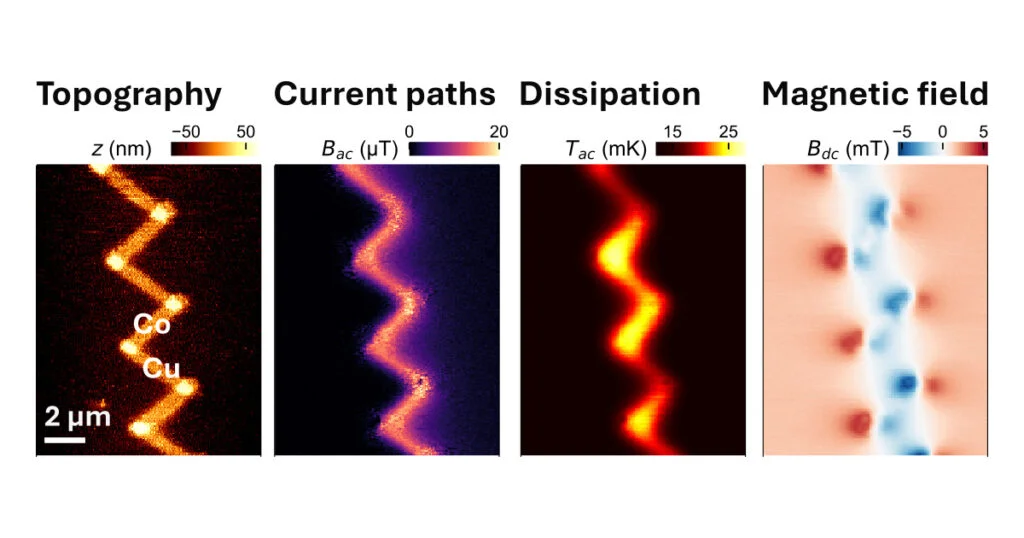

“We would like to see, as you increase the size of the quantum error correcting code block, say in the surface code, the error rates decline exponentially with the size of that code block — that’s the hallmark of quantum error correction.”

Preskill also stated he would like to see the gates can be protected with that exponential improvement in fidelity as things are scaled.

“We haven’t seen that yet,” said Preskill, “Why haven’t we? Well, the short answer is, the gates aren’t good enough, and the error rates are too high.”

Preskill said that a very interesting experiment the group at Google did — where they saw an exponential improvement in error rate, as they increased the size of the code block — was a good case in point.

“But the catch was,” said Preskill, “it wasn’t a full-blown quantum error correcting code, they can only correct one type of error, that the dephasing error is not the bit flips in that configuration.”

He continued by saying it was a very interesting experiment because they were able to do up to fifty repeated rounds of quantum error correction, declaring he’d like to see a time when there are many successive rounds.

The Horizon Quantum Computing interview, which also talked about what makes quantum computers so vulnerable to error, how thresholds are calculated and where the industry stands in achieving full tolerance, is an excellent source for those wanting to know more.

The Quantum Insider (TQI) highly recommends it.

For more market insights, check out our latest quantum computing news here.