Most of us have heard of AI and quantum computing. But, have we heard of quantum machine learning?

Quantum machine learning is the intersection between quantum computing and AI that’s going to change what the future looks like.

Individually, they’re amazing. But together, they’re unstoppable.

Quantum machine learning is a field that aims to write quantum algorithms to perform machine learning tasks.

In this article, I’m going to break down those intimidating words. Specifically, I’m going to be talking about quantum support vector machines (QSVMs) but there are so many more amazing QML algorithms to learn about.

You may also like:

- IonQ and Fidelity Center for Applied Technology Demonstrate Quantum Machine Learning

- 13 Companies Offering Quantum Cloud Computing Software Solutions

- Quantum Machine Learning May Improve Weather Forecasting

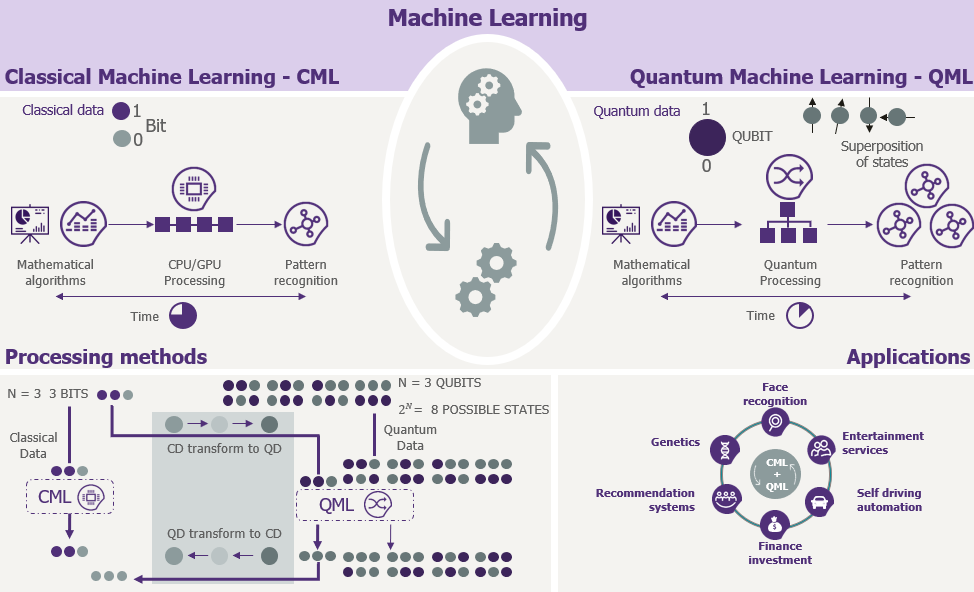

Classical Machine Learning

Machine learning can be broken down into three core groups: supervised learning(training data to predict the next value), unsupervised (acting on unlabeled data), and reinforcement (learning from environment and mistakes).

Support vector machines (SVMs) fall into the category of supervised learning and we’re going to be focusing on that.

Supervised learning algorithms learn from examples. In supervised learning, you have input variables (X) and an output variable (Y). The purpose of the algorithm is to learn how the function maps from the input to the output.

Y = f(X)

The algorithm’s goal is to approximate the mapping function well enough that when you have new input data (X), you can predict the output variables (Y) for that data.

For example, the supervised learning algorithm can use a trained dataset to understand the difference between two types of objects (cats and dogs). The algorithm analyzes the different features (hair, color, eyes, ears, etc.) to learn what each object looks like. Then, an unknown data point is introduced (a white dog). Using its past training, the algorithm will predict which category the data point falls into.

It is called supervised learning because it’s similar to a teacher giving information to a student. The teacher gives out the answer at first for the student (or in this case the algorithm) to learn. But, eventually, the student understands the concepts enough to solve problems on their own.

Support Vector Machines

SVMs are among the most powerful supervised learning algorithms. In this case, we use them for classification but they can also be used for regression.

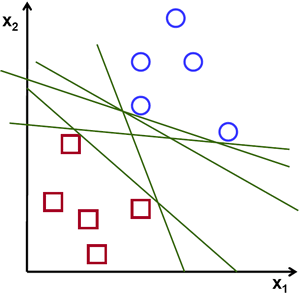

Their specialty comes from their ability to classify objects in the nth-dimensional space (N–the number of features). Their objective is to find a hyperplane in nth-dimensional space that distinctly classifies the data points.

To understand, it helps to think of an analogy. Picture drawing a cat and putting a dog sticker right on top of the cat drawing. It’s 2D (n=2); regardless of how many lines you draw, there’s absolutely no way to separate the cat and dog. The two dimensions (i.e. weight and height) aren’t enough to classify the object.

This is the problem that many classification algorithms deal with.

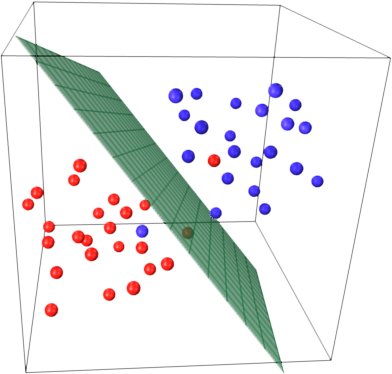

Now, let’s make it 3D(n=3) by adding another dimension; depth. We realize that the two objects are eclipsed, inhabiting different depths. The third dimension (i.e. bark) allows the computer to understand the difference. If we project the cat and dog into a higher-dimensional space, we can slide a thin sheet (a “plane”) between the two to separate them.

Although it’s very simple, this is what SVMs do. They analyze in the nth dimensional space.

The computers take the images of a cat or dog and organize their pixels based upon height, weight, bark, and other characteristics. Then, the computer utilizes a kernel trick to project the non-linear data set into a higher dimensional space. The end result is the “feature map” which allows us to understand how input (X) becomes output (Y).

But’s what’s the holdup with classical machine learning and SVMs? Why do we even need to add quantum computers?

Quantum Machine Learning

When data points are projected in higher and higher dimensions, it is hard for classical computers to deal with such large computations. Even if the classical computer can handle it, it would take too much time.

Simply put, sometimes, classical machine learning algorithms are too taxing for classical computers.

Luckily, quantum computers have the computational power to handle these taxing algorithms. They utilize powerful laws like superposition and entanglement to solve problems faster than their classical counterparts.

In fact, a study by IBM and MIT found that SVMs are, mathematically, very similar to what goes on inside a quantum computer.

Quantum machine learning allows scientists to take the classical ML algorithm and translate it into a quantum circuit so it can be run efficiently on a quantum computer.

Applications

Quantum machine learning is an extremely new field with so much more growth. But, we can already start to predict how it’s going to impact our future!

Here are some of the areas QML will disrupt:

- Understanding nanoparticles

- The creation of new materials through molecular and atomic maps

- Molecular modeling to discover new drugs and medical research

- Understanding the deeper makeup of the human body

- Enhanced pattern recognition and classification

- Furthering space exploration

- Creating complete connected security through merging with IoT and blockchain

With more amazing developments happening every day, QML will solve more problems than we could’ve ever imagined.

Personal Project: Utilizing SVMs for Classification of Parkinson’s Disease

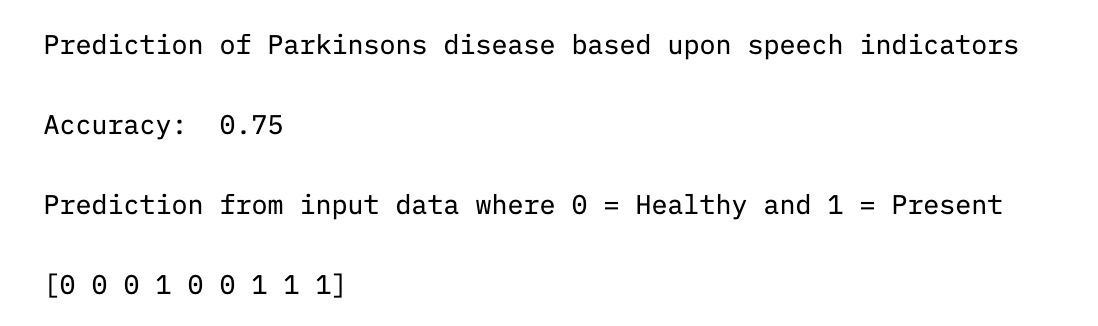

I focused on building a QML algorithm to identify whether a patient has Parkinson’s disease based on their speech features. I conducted a 9-qubit simulation on IBM’s quantum simulators utilizing a quantum support vector machine.

How to build the algorithm:

The first step is to set up the circuit:

- Import the necessary packages

- Load your IBM account credentials and connect to the optimal quantum simulator

- Set up the number of shots (or attempts) your algorithm will take

The next step is to prepare the dataset:

- Import the dataset. The Parkinson’s dataset I used can be found here.

- Split the data into the two classes (present and not-present)

- Divide the data into training and testing datasets (a 7:3 training to testing ratio is ideal)

Then, we need to build the QML algorithm:

- Set up the number of qubits the circuit will have (the number of qubits should be equivalent to the number of features in your dataset)

- Initialize the feature map to build the SVM

- Set the necessary parameters; including the device it’ll run on, number of shots, and initializing the pseudo-random number generator

- Import unlabelled data for classification

The last step is to run the algorithm. The run method will generate the accuracy of the circuit. Meanwhile, the predict method will do the training, testing, and prediction of the unlabeled data.

The results shown can predict whether or not each of 9 patients have Parkinson’s disease with a 0.75 accuracy rate. With a larger dataset and more stable hardware, the accuracy rate will only go up

Implications

With diseases like Parkinson’s, the earlier the detection, the better the treatment. QML algorithms like this one will be able to make huge strides in treatment and disease prevention.

In the coming years, QML is going to become a massive field with increasingly more computational power. It’ll have the capability to solve the world’s most complex problems. The AI revolution of today will be even bigger when combined with quantum computing.

I’m super excited to see the field grow and become integrated into our lives. Lucky for us, many QML experiments are already possible with our current quantum technology. The future is here and we’re ready for it!

Github for reference to QML algorithm: https://github.com/anisham25/parkinsons-QSVM

Personal Website: www.anishamusti.com