Introduction

There’s a lot of disagreement in the investment world around Quantum Computing (QC), a mix of gushing hype and irritable scepticism. Whatever side of the fence you sit on, it is increasingly clear that it is being taken seriously, with billions of dollars invested by governments in furthering the technology. As outlined in a previous TQD article Venture Capital (VC) investment in QC is increasing, but it pales in comparison to other “deep tech” areas. ~$9bn was invested in AI in 2018 vs less than $200m in QC. Given the recent news around Quantum Supremacy, you could be forgiven for being surprised by these figures.

Whilst there are firms out there investing in QC (with Rigetti’s $71m down round being a notable recent headline), it’s fairly uncommon to see the big hitters getting involved in the space. So at TQD we wanted to explore why there isn’t more interest from VC investors…

What is Venture Capital?

There are plenty of good explanations out there (e.g this, this) but the following provides a summary. Venture Capital is the provision of funding to support the growth of new companies. This dates back to the merchant banks of the 19th century (and some would argue to the expeditions of the Limited Companies of the 17th century). The modern form of VC involves a firm raise a pool of money, known as a fund, from Limited Partners (LPs). Individual managers or General Partners (GPs) make investment decisions on behalf of LPs (with the broad parameters being agreed in advance), for which they get a management fee (typically ~2%) and a share of any profits on their investments (typically ~20%). VCs are now a key part of the start-up ecosystem because they provide founders with early-stage funding to support new developments and scale ups. Their understanding as part of the modern lexicon is intrinsically linked with their involvement in Silicon Valley in the 1970s onwards (for example Apple), and more recently, with some of the big household names such as Uber, Facebook and Instagram.

A typical VC will invest in 10-20 companies in a fund. For the top performing VCs, typical funds will include 2 or more companies that return more than the whole fund alone, meaning that the inevitable losses on most of the companies are more than made up for. There are plenty of discussions around how (and indeed if) this model works but let it suffice to say that it is a game of picking big winners.

All of the above should tell you that VCs are willing to take big risks for big returns, by betting that on average that one of their portfolio companies will be a huge winner.

The NISQ era and the overhead

The relative lack of VC involvement in QC is primarily driven by the nascent stage of the QC industry. As we cover here few, if any QC companies, are making any money (leaving aside Xanadu’s T-shirts). There are a number of reasons why, but we laser in on a couple of key issues below, whilst trying to avoid the jargon.

There are currently no commercial applications for the QC technology we have today.

This is primarily because we have not made a Quantum Computer which is able to solve a practical problem faster / better than our existing supercomputers. As outlined in this accessible article by John Preskill, Google’s Quantum Supremacy achievement was based on a very narrow application, specifically aimed at making a QC be able to outperform a classical computer.

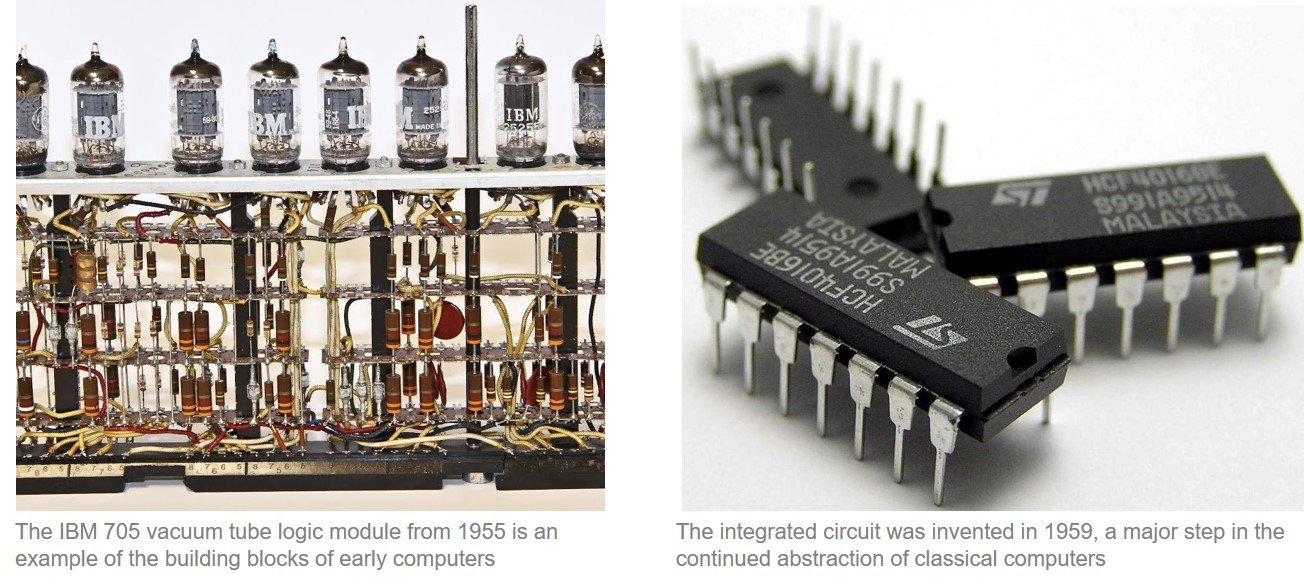

As Preskill outlines in his – now relatively well known – paper, we are currently in what has been termed the Noisy Intermediate State Quantum era of QC. Noisy is the key word here. Classical computers use bits (zeros and ones) to represent information. These bits were first represented with physical switches and relay logic in the first electro-mechanical computers. Vacuum tubes were used in the late 1940s as a way of controlling electric current to represent bits. These were unwieldy, unreliable devices that overheated and required replacing:

Abstraction has continued since the 1950s from the development of transistors through to the integrated circuits that are used today. Check out this series for a much better explanation. All these electrical devices are susceptible to external noise but the signal, if it deviates from zero or one (let’s say “0.2” without going into the detail), can then be normalised back to zero easily and formulaically. Even vacuum tubes, despite their unreliable nature, were able to do reliable computation.

One of the fundamental challenges with using qubits (basic unit of quantum information) is that they are required to represent states other than zero and one, and therefore a simple normalisation is not possible. Errors build on errors, unless qubits can be subject to some more nuanced error correction, which results in challenges in creating meaningful outputs. The likes of Google, Rigetti and IBM are taking different approaches, but part of what they are working on is solving this problem.

In the last 20 years (great overview here) we have worked out that, at least theoretically, QCs should be able to deal with noise through more advanced error correction using more “correcting” qubits. Whilst this technically means that we should be able to build fault-tolerant QCs, it imposes an “overhead” in that manufacturers will need potentially thousands of qubits to support one qubit that is not subject to noise, or one “fault tolerant” qubit.

Side note: this is why quantum de-cryptography is sometimes overblown. To implement Shor’s algorithm you need about a thousand logical qubits, which means about a million qubits.

This is a long way of saying that the fundamental technology that underpins creating commercially useful Quantum Computers, is still in its infancy. As Scott Aaronson wrote in the New York Times, Quantum Computing is “now, finally, in the early vacuum tube era of quantum computing”. Some have argued that even this is an optimistic assessment.

In addition, despite headlines, there is not yet a consensus on precisely what QCs will be able to solve. We will cover it in a separate article but, in short, there are problems that are impossible for classical computers in a reasonable period of time, which may be solvable by a quantum computer, but the full list is actually still in flux.

All this said, QC offers the potential to fundamentally change and accelerate our technological process through solving problems that have been previously impossible, and potentially creating new markets. All of which should offer opportunities for big returns for investors.

Why is QC so hard for VC?

We should preface this piece by acknowledging that none of us at TQD are Venture Capitalists. We have not raised a fund, nor have we invested money in any Quantum Computing company. We wrote this as an intellectual exercise, or curiosity, but hope the themes we discuss provoke an interesting debate in the community.

As outlined above, VCs look to make outsized returns by picking companies that can come to disrupt or build new markets. On average, even the best funds only pick 1 or 2 winners in a fund (often <10% hit rate). This would seem to point to VCs being excited about the opportunity to invest in Quantum computing.

Nonetheless, this doesn’t mean that VCs revel in taking unmitigated risk (please be quiet, sceptics). Investment decisions are partly predictions of the future, but they are made with a degree of market understanding and due diligence. Backing Facebook was based on the view that people would look to connect more online, and that these users could be advertised to, but investors won’t have had a crystal ball.

Investing in QC is made particularly hard by the following factors:

QC is nascent and there are limited people who really understand it: Given where QCs are in terms of development stage, advancing the technology requires a deep grounding in maths, physics and computer science. For an investor to understand if a company is a good investment, they need to either understand the underlying technology themselves, or work with people who do (the latter also needing to be able to communicate in business terms). Contrast this to understanding an investment in a provider of SaaS solutions for the legal industry. To understand the market opportunity, an investor need not concern themselves with the various components of the technology stack that go into making a typical SaaS business (as this is now well understood). Rather they need to focus primarily on the opportunity to sell the abstracted product to the legal market.

There is no consensus on QC technology: Whilst you can argue this about most markets, it is not clear what technology will be successful in the coming decades. An investor doesn’t want to risk putting millions in to a company writing algorithms for a topological quantum computer if we are unable to invent one for 50 years. Timelines are unclear and most VCs typically don’t look to make bets that pertain to changes more than 10 years in the future.

Understanding QC is fundamentally hard: Understanding any technology takes a degree of effort and knowledge however QC has a particularly high barrier to understanding because it is fundamentally built on quantum physics. Feynman, the famous theoretical physicist famously said, “I think I can safely say that nobody understands quantum mechanics.” There are plenty of incredibly well-educated investors out there, but not everyone is willing to get deep into understanding STEM subjects they haven’t looked at since college days.