A new method could pave the way to establishing universal standards for measuring the performance of quantum computers, according to researchers at the University of Waterloo.

The new method, called cycle benchmarking, allows researchers to assess the potential of scalability and to compare one quantum platform against another.

“This finding could go a long way toward establishing standards for performance and strengthen the effort to build a large-scale, practical quantum computer,” said Joel Wallman, an assistant professor at Waterloo’s Faculty of Mathematics and Institute for Quantum Computing. “A consistent method for characterizing and correcting the errors in quantum systems provides standardization for the way a quantum processor is assessed, allowing progress in different architectures to be fairly compared.

Cycle benchmarking provides a solution that helps quantum computing users determine the comparative value of competing hardware platforms and increase the capability of each platform to deliver robust solutions for their applications of interest.

The breakthrough comes as the quantum computing race is rapidly heating up, and the number of cloud quantum computing platforms and offerings is quickly expanding. In the past month alone, there have been significant announcements from Microsoft, IBM and Google.

This method determines the total probability of error under any given quantum computing applications when the application is implemented through randomized compiling. This means that cycle benchmarking provides the first cross-platform means of measuring and comparing the capabilities of quantum processors that is customized to users’ applications of interest.

“Thanks to Google’s recent achievement of quantum supremacy, we are now at the dawn of what I call the `quantum discovery era’,” said Joseph Emerson, a faculty member at IQC. “This means that error-prone quantum computers will deliver solutions to interesting computational problems, but the quality of their solutions can no longer be verified by high-performance computers.

“We are excited because cycle benchmarking provides a much-needed solution for improving and validating quantum computing solutions in this new era of quantum discovery.”

Emerson and Wallman founded the IQC spin-off Quantum Benchmark Inc., which has already licensed this technology to several world-leading quantum computing providers, including Google’s Quantum AI effort.

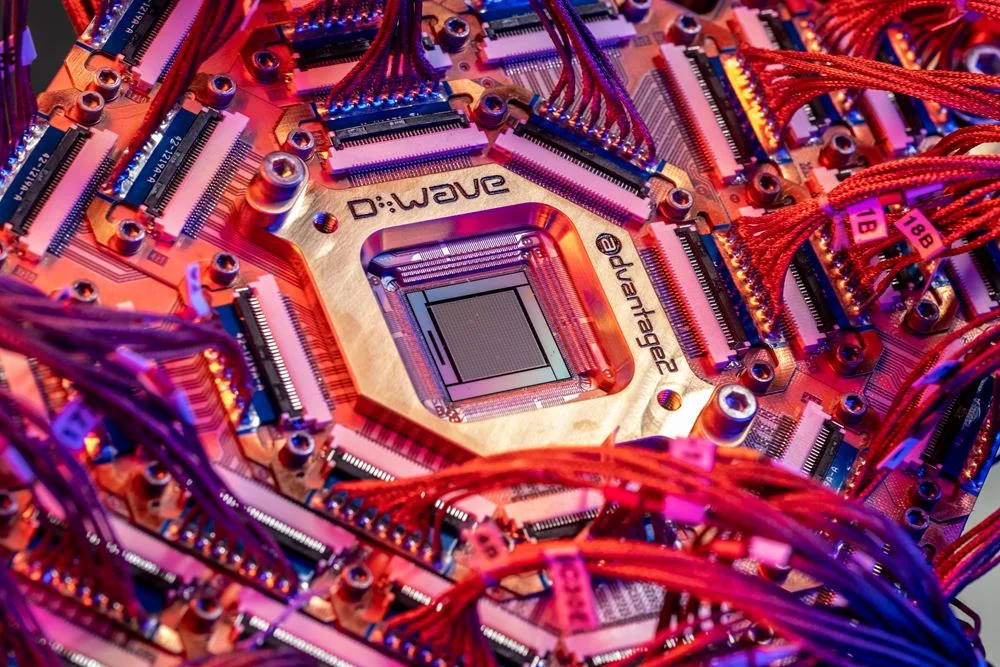

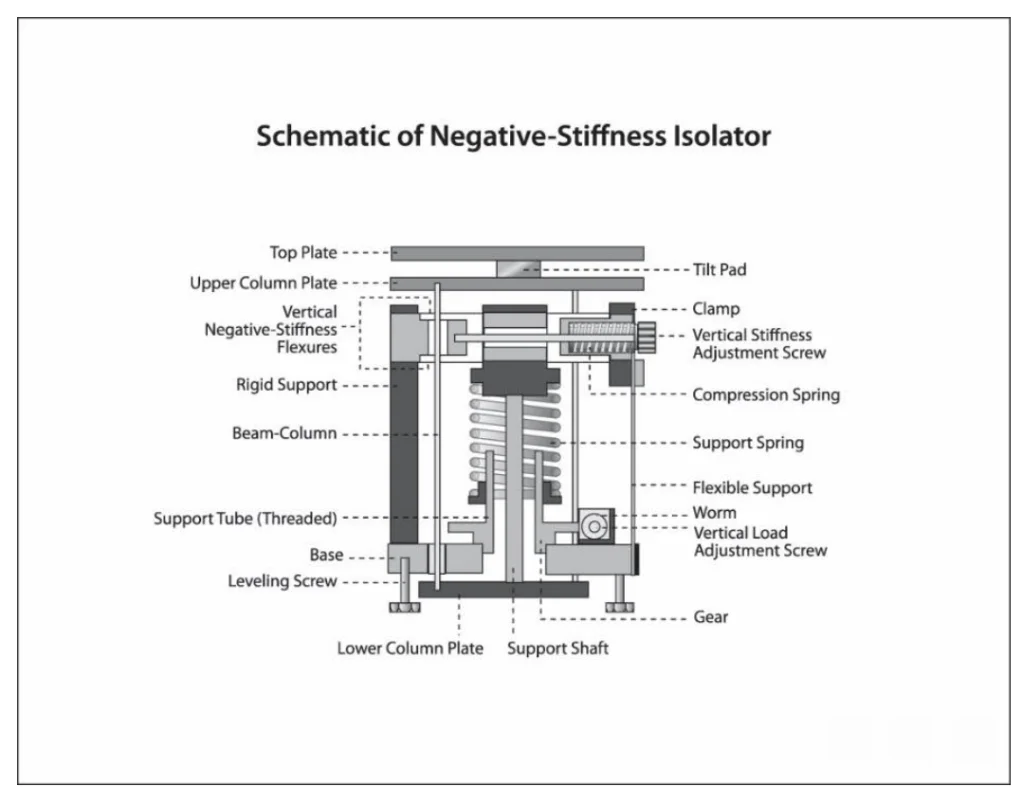

Quantum computers offer a fundamentally more powerful way of computing, thanks to quantum mechanics. Compared to a traditional or digital computer, quantum computers can solve certain types of problems more efficiently. However, qubits—the basic processing unit in a quantum computer—are fragile; any imperfection or source of noise in the system can cause errors that lead to incorrect solutions under a quantum computation.

Gaining control over a small-scale quantum computer with just one or two qubits is the first step in a larger, more ambitious endeavor. A larger quantum computer may be able to perform increasingly complex tasks, like machine learning or simulating complex systems to discover new pharmaceutical drugs. Engineering a larger quantum computer is challenging; the spectrum of error pathways becomes more complicated as qubits are added and the quantum system scales.

Characterizing a quantum system produces a profile of the noise and errors, indicating if the processor is performing the tasks or calculations, it is being asked to do. To understand the performance of any existing quantum computer for a complex problem or to scale up a quantum computer by reducing errors, it’s first necessary to characterize all significant errors affecting the system.

Wallman, Emerson and a group of researchers at the University of Innsbruck identified a method to assess all error rates affecting a quantum computer. They implemented this new technique for the ion trap quantum computer at the University Innsbruck and found that error rates don’t increase as the size of that quantum computer scales up a very promising result.

“Cycle benchmarking is the first method for reliably checking if you are on the right track for scaling up the overall design of your quantum computer,” said Wallman. “These results are significant because they provide a comprehensive way of characterizing errors across all quantum computing platforms.”

The paper Characterizing large-scale quantum computers via cycle benchmarking appears in Nature Communications.