Introduction to Qubits: Part 1

If you read anything about quantum computers, you are bound to come across the term ‘qubit’. Every big development within creating quantum computers seems to revolve around adding more qubits, making them more stable and less ‘noisy’. But what does this mean?

First we must remind ourselves of ‘bits’. A bit is the smallest unit of classical information and can be in one of two states (we call these states 0 and 1). We can make a bit from anything that has two states; old computer scientists used to store bits by punching holes in card, a hole represented a 1 and the absence of a hole represented a 0. Newer technology such as compact disks (CDs) stored bits using tiny dents in the metal surface of the disk, where a variation in the surface represented a 1 and a constant surface represented a 0.

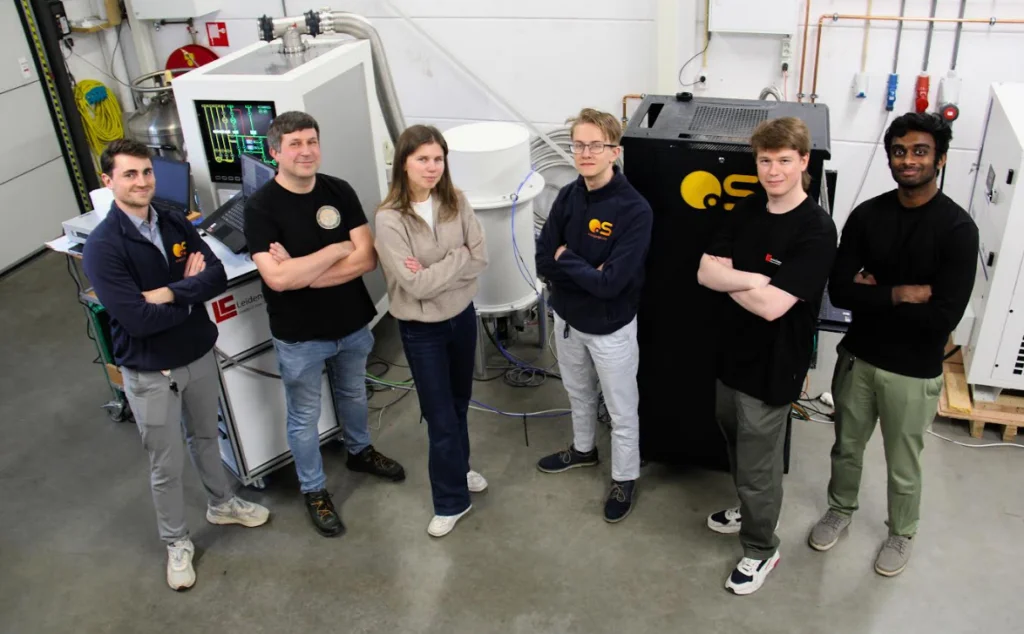

Quantum mechanics is a more accurate model of the world that emerged in the early 20th century. One of the many results of this new model was that the most basic unit of information was not the bit but instead the quantum bit, or qubit. More interestingly, it turned out that this new unit of information could be useful for computations and communications, and since then there has been an effort to create a physical qubit.

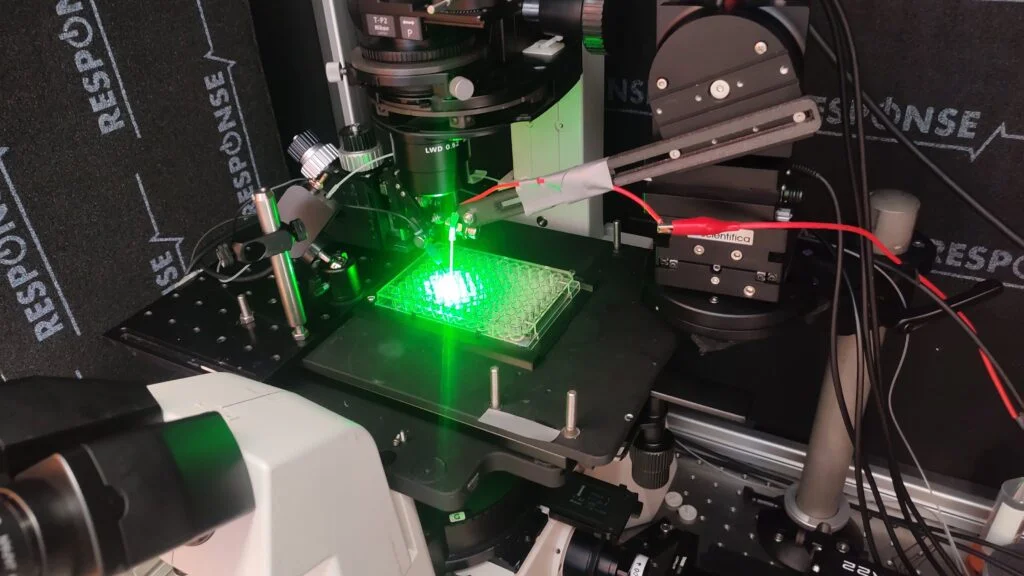

As with bits, the qubit is an abstract idea that isn’t tied to a specific object and we must find a suitable system to store them. Unfortunately, qubits are much harder to implement than the classical bit; while we can carry round billions of bits on a hard drive in a rucksack, it’s difficult to sustain a handful of qubits under laboratory conditions. As a result of this, many qubits in the computer must be used for error correcting.

Physical vs Logical Qubits

When discussing quantum computers with error correction, we talk about physical and logical qubits. Physical qubits are the, well, physical qubits in our computer – the number of qubits on the box, whereas logical qubits are groups of physical qubits we use as a single qubit in our computation. To illustrate this, let’s consider an example of a quantum computer with 100 qubits. Let’s say this computer is prone to noise, to remedy this we can use multiple qubits to form a single more stable qubit. We might decide that we need 10 physical qubits to form one acceptable logical qubit. In this case we would say our quantum computer has 100 physical qubits which we use as 10 logical qubits.

When designing quantum algorithms, we normally imagine perfect, stable qubits that do exactly as we expect. Say we want to run a simulation of two-level quantum particles(link), the largest number of particles we could simulate would be 10, even though we have 100 physical qubits at our disposal.

Distinguishing between physical and logical qubits is important. There are many estimates as to how many qubits we will need to perform certain calculations, but some of these estimates talk about logical qubits and others talk about physical qubits. For example: To break RSA cryptography we would need thousands of logical qubits, but millions of physical qubits. This can lead to confusion, as we currently have 100-qubit machines but these are not able to do any useful computations.

If you’re not already sick of the word ‘qubit’, in the next section we will cover different implementations of qubits, their advantages and their disadvantages.