As 2024 wraps up, it’s impossible not to marvel at the sheer scope of quantum discoveries we’ve witnessed this year. From developments in quantum chemistry to incremental movement towards the ever-elusive fault-tolerant computing, the field has pushed boundaries in ways that were science fiction just yesteryear.

Pulling together a list of the top research stories is no trivial task—the quantum universe does not go gently into a single post. Many deserving moments are not listed here, but that’s the nature of gift-giving: what fits under the tree is unfortunately bound by classical constraints.

It’s important to note that this list is not exhaustive and leans toward application-based research rather than hardware developments (or else Microsoft’s logical qubits and Google’s Willow would have certainly made the roundup). It reflects stories that resonated notably–a curated collection of quantum highlights, with a little nod to the community for helping shape the narrative through your clicks, shares, and conversations.

And now for the always anticipated unboxing:

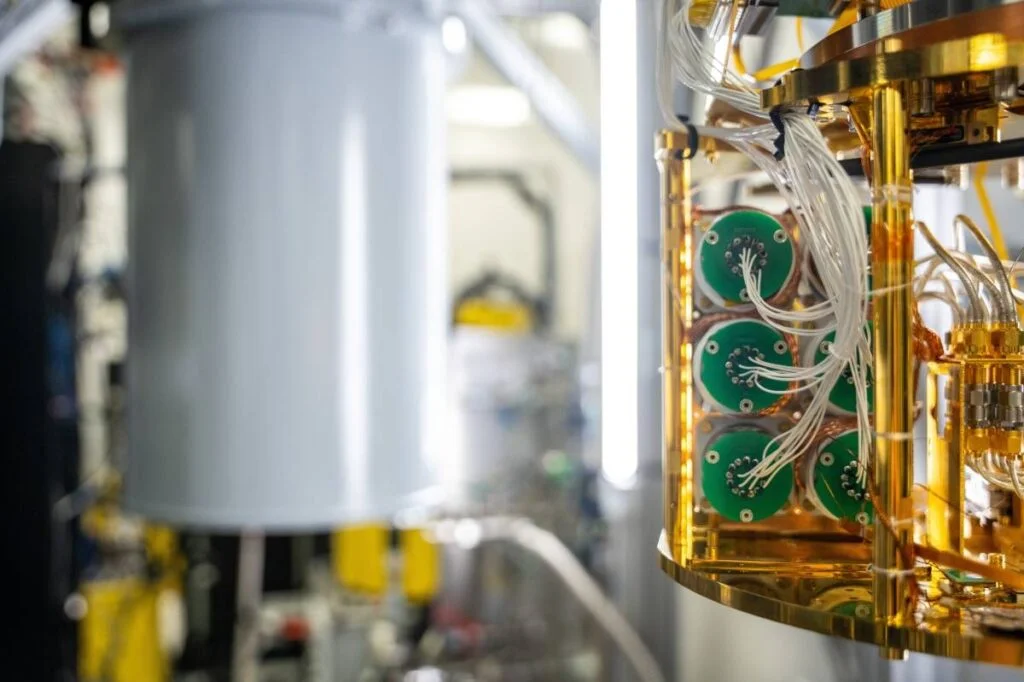

Microsoft Integrates HPC, Quantum Computing, and AI for Chemical Reactions Study

Microsoft integrated HPC, quantum computing, and AI on the Azure Quantum Elements platform to study catalytic reactions, exploring applications of quantum simulations in quantum chemistry.

- What Happened: Researchers conducted over one million density functional theory (DFT) calculations to map chemical reaction networks, identifying more than 3,000 unique molecular configurations. Quantum simulations using logical qubits and error-correction techniques refined results where classical methods encountered limitations.

- Key Findings: Encoded quantum computations achieved chemical accuracy (0.15 milli-Hartree error), surpassing the performance of unencoded methods.

- Significance: The study illustrates the potential of logical qubits in improving the reliability of quantum calculations, a necessary step toward scaling quantum chemistry applications. Future efforts will focus on enhancing error correction methods and improving algorithmic scalability.

Quantinuum Unveils First Contribution Toward Responsible AI — Uniting Power of Its Quantum Processors With Experimental Work on Integrating Classical, Quantum Computing

Quantinuum implemented a scalable Quantum Natural Language Processing (QNLP) model, QDisCoCirc, utilizing quantum computing to address text-based tasks such as question answering. This work explores the integration of quantum computing and AI, with an emphasis on interpretability and scalability.

- What Happened: Researchers developed QDisCoCirc using compositional generalization, a technique inspired by category theory, to process text into smaller, interpretable components. The approach addressed challenges like the “barren plateau” problem, which complicates the scaling of quantum models, and demonstrated the model’s ability to generalize across different tasks.

- Findings: The study showed that quantum circuits provided advantages over classical models, particularly in their ability to generalize beyond simple tasks. QDisCoCirc allowed researchers to examine quantum decision-making processes, which has applications in sensitive fields such as healthcare and finance.

- Significance: This research demonstrates the potential for quantum AI systems to enhance interpretability and efficiency in NLP tasks. It provides a foundation for advancing quantum and AI technologies, with future directions including scaling to more complex linguistic tasks and further development of quantum hardware.

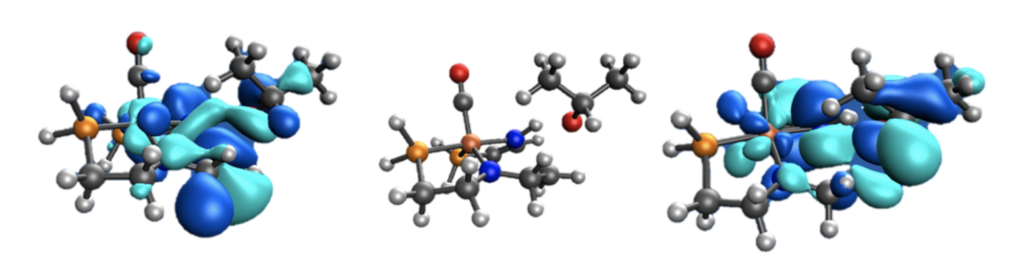

Pasqal and Qubit Pharmaceuticals Use Neutral Atom QPUs to Predict Water Molecule Behavior in Drug Discovery

Researchers from Pasqal, Qubit Pharmaceuticals, and Sorbonne Université developed a quantum-enhanced method using neutral atom quantum processing units to predict solvent configurations, an important step in drug discovery. The approach integrates analog quantum computing with a hybrid quantum-classical algorithm.

- What Happened: The team implemented a quantum algorithm based on quantum adiabatic evolution and the Ising model to predict water molecule placements in protein cavities. Using neutral atom QPUs, they tested the method on real-world protein models, including MUP-I, achieving high-accuracy solvent predictions.

- Key Findings: The results closely aligned with experimental data, outperforming classical approaches in accuracy. A hybrid algorithm using Bayesian optimization mitigated noise and errors, improving the reliability of quantum simulations.

- Significance: The research highlights the potential of quantum computing to address complex challenges in solvent structure prediction. By combining quantum and classical techniques, this method advances molecular modeling and drug design, overcoming current hardware limitations.

Quantum Algorithm Outperforms Current Method of Identifying Healthy Livers For Transplant

Terra Quantum’s hybrid quantum neural network (HQNN) achieved 97% accuracy in identifying healthy livers for transplantation, leveraging federated learning to maintain patient privacy and comply with data protection regulations.

- What Happened: Researchers developed a hybrid model integrating quantum and classical computing layers to analyze liver images. The HQNN, using just 5 qubits, classified organs as suitable or unsuitable for transplant, outperforming traditional algorithms and medical experts while reducing false positives.

- Key Findings: Federated learning enabled collaborative model training across multiple hospitals without sharing sensitive data. The model sustained high performance even with limited data contributions from individual hospitals, ensuring compliance with privacy laws like the EU AI Law.

- Significance: The research highlights the potential of quantum computing in healthcare, improving transplant outcomes by enhancing diagnostic accuracy and reducing complications. It also establishes a framework for secure, collaborative medical AI systems.

Riverlane, MIT Researchers Work on Quantum Algorithms For Fusion Energy

Riverlane and MIT’s Plasma Science and Fusion Center (PSFC) are collaborating to develop quantum algorithms for simulating plasma dynamics, contributing to efforts to achieve fusion energy, one of the National Academy of Engineering’s Grand Challenges for the 21st century.

- What Happened: Supported by the U.S. Department of Energy’s Fusion Energy Sciences program, the project focuses on solving differential equations like the Vlasov equation, which describes plasma behavior. The research also explores advancements in quantum error correction to ensure stable qubit operation.

- Key Findings: Quantum methods demonstrated potential for simulating high-temperature, high-density matter, with applications extending beyond fusion energy to fields such as fluid dynamics in aerospace and oceanography.

- Significance: Efficient quantum simulations of plasma dynamics could address key challenges in fusion energy development, offering a pathway to a clean energy source while expanding quantum computing’s role across multiple industries.

BQP Demonstrates Possibility of Large-Scale Fluid Dynamic Simulations with Quantum Computing

BQP advanced computational fluid dynamics by using its BQPhy® platform to simulate jet engines with a hybrid quantum-classical solver, requiring only 30 logical qubits compared to the 19.2 million compute cores needed by classical methods.

- What Happened: The research utilized BQPhy’s Hybrid Quantum Classical Finite Method (HQCFM) to solve non-linear, time-dependent equations. Experiments scaled from 4 to 11 qubits, achieving high accuracy and preventing error propagation in time-loop simulations, ensuring consistent results for transient problems.

- Key Findings: The quantum approach outperformed classical methods in scalability and efficiency, offering potential for full-aircraft simulations, a capability classical systems are not expected to achieve until 2080.

- Significance: This development could make large-scale simulations more accessible, transforming aerospace design and maintenance with cost-effective, precise tools. Beyond aerospace, BQP’s technology shows promise in applications like gas dynamics, traffic flow, and flood modeling.

Hosting the Universe in a Quantum Computer: Scientists Simulate Cosmological Particle Creation

Researchers from the Autonomous University of Madrid used IBM quantum hardware to simulate particle creation in an expanding universe, offering insights into Quantum Field Theory in Curved Spacetime (QFTCS).

- What Happened: The team developed a quantum circuit to model a scalar quantum field in an expanding universe, illustrating how spacetime stretching can generate particles. Despite noise challenges inherent to NISQ-era devices, error mitigation techniques like zero-noise extrapolation allowed for reliable estimations.

- Key Findings: The simulations aligned with theoretical predictions, demonstrating the potential of quantum computers to study complex phenomena such as the early universe and black hole radiation. The study used IBM’s 127-qubit Eagle processor for large-scale quantum circuits.

- Significance: This research showcases the potential of quantum computing to unify quantum mechanics and general relativity, enabling the study of cosmological processes that are otherwise difficult or impossible to replicate in laboratory settings.

Taming Chaos: IBM Quantum And Algorithmiq-led Scientists Report Today’s Quantum Computers Can Simulate Many-Body Quantum Chaos

Researchers from Algorithmiq and IBM Quantum used a quantum computer with up to 91 qubits to simulate many-body quantum chaos, a phenomenon characterized by unpredictable behaviors in systems with many interacting particles.

- What Happened: The team used IBM’s “ibm_strasbourg” processor with superconducting transmon qubits and dual-unitary circuits to model chaotic quantum behavior. They applied tensor-network error mitigation, a proprietary technique by Algorithmiq, to reduce noise and enhance result reliability. Classical simulations were used to validate the quantum findings for smaller system sizes.

- Key Findings: The study demonstrated that current quantum computers can address complex phenomena like quantum chaos, offering insights relevant to fields such as weather prediction, fluid dynamics, and material science.

- Significance: This research illustrates how today’s quantum systems, despite limitations, can provide valuable insights into complex physical systems. It underscores the potential of quantum computing in advancing areas such as material science, cryptography, and hardware design.

Matrix Re-Reloaded: Quantum Subroutine Improves Efficiency of Matrix Multiplication for AI and Machine Learning Applications

Researchers from the University of Pisa developed a quantum subroutine that directly encodes matrix multiplication results into a quantum state, providing a more efficient method for processing large datasets in machine learning and scientific computing.

- What Happened: The subroutine performs matrix multiplication within a quantum circuit, avoiding intermediate measurements and eliminating data retrieval bottlenecks. By leveraging quantum parallelism, the method has the potential to significantly outperform classical techniques in terms of efficiency.

- Key Findings: The subroutine supports applications such as variance calculations for detecting outliers in machine learning and eigenvalue computations for dimensionality reduction and stability analysis in scientific computing. These tasks are essential for training neural networks, solving complex equations, and modeling physical systems.

- Significance: This development presents a scalable approach to addressing challenges in high-dimensional data spaces, advancing capabilities in AI, data science, and scientific simulations through quantum-enhanced computational methods.

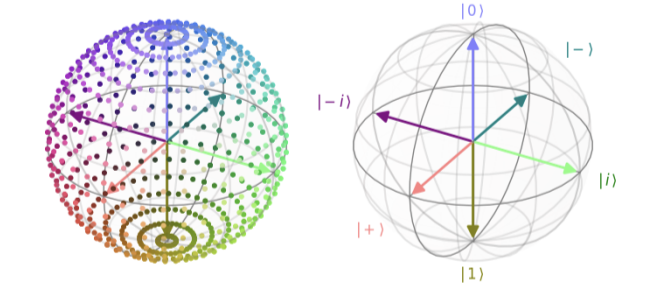

Research Team Achieves First-Ever Topological Qubit, A Step Along The Path Toward Fault-Tolerant Quantum Computing

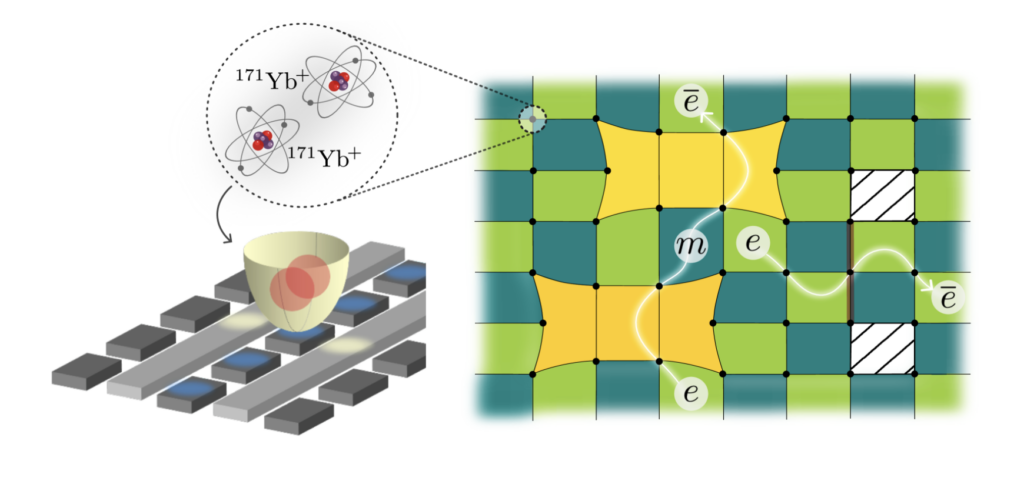

Researchers from Quantinuum, Harvard, and Caltech successfully demonstrated the first experimental topological qubit using a Z₃ toric code, leveraging non-Abelian anyons to encode quantum information with intrinsic error resistance.

- What Happened: The team utilized Quantinuum’s H2 ion-trap quantum processor, featuring 56 fully connected qubits and high gate fidelity (99.8%), to construct a lattice of qutrits representing the Z₃ toric code. By manipulating non-Abelian anyons, they showcased error correction capabilities unique to topological systems.

- Key Findings: The experiments validated theoretical predictions from 2015, confirming the viability of non-Abelian systems for encoding quantum information. The work also provided evidence of computational utility by demonstrating defect fusion and interactions.

- Significance: This research addresses key challenges in quantum error correction, reducing resource demands and advancing scalable quantum computing. It lays the foundation for universal topological quantum systems, with potential applications in cryptography, materials science, and AI. Future objectives include system scaling, achieving universal gate sets, and refining error correction techniques.