Insider Brief

- Researchers at Princeton University, led by Jeff Thompson, have developed a method that identifies errors in quantum computers, making them easier to correct and potentially accelerating progress towards large-scale quantum computing capabilities.

- The team’s approach allows qubits with errors to emit light, facilitating the detection of these errors in real-time without destroying the qubits, a significant shift from traditional methods where checking often introduced additional errors.

- The technique, known as “erasure conversion,” can be applied across various quantum computer architectures and is already being adopted by other groups, including Amazon Web Services and researchers at Yale.

UNIVERSITY RESEARCH NEWS — Princeton, New Jersey/October 11, 2023 — Researchers have developed a method that can reveal the location of errors in quantum computers, making them up to ten times easier to correct. This will significantly accelerate progress towards large-scale quantum computers capable of tackling the world’s most challenging computational problems, the researchers said.

Led by Princeton University’s Jeff Thompson, the team demonstrated a way to identify when errors occur in quantum computers more easily than ever before. This is a new direction for research into quantum computing hardware, which more often seeks to simply lower the probability of an error occurring in the first place.

A paper detailing the new approach was published in Nature on Oct. 11. Thompson’s collaborators include Shruti Puri at Yale University and Guido Pupillo at the University of Strasbourg.

Physicists have been inventing new qubits — the core component of quantum computers — for nearly three decades, and steadily improving those qubits to be less fragile and less prone to error. But some errors are inevitable no matter how good qubits get. The central obstacle to the future development of quantum computers is being able to correct for these errors. However, to correct an error, you first have to figure out if an error occurred, and where it is in the data. And typically, the process of checking for errors introduces more errors, which have to be found again, and so on.

Quantum computers’ ability to manage those inevitable errors has remained more or less stagnant over that long period, according to Thompson, associate professor of electrical and computer engineering. He realized there was an opportunity in biasing certain kinds of errors.

“Not all errors are created equal,” he said.

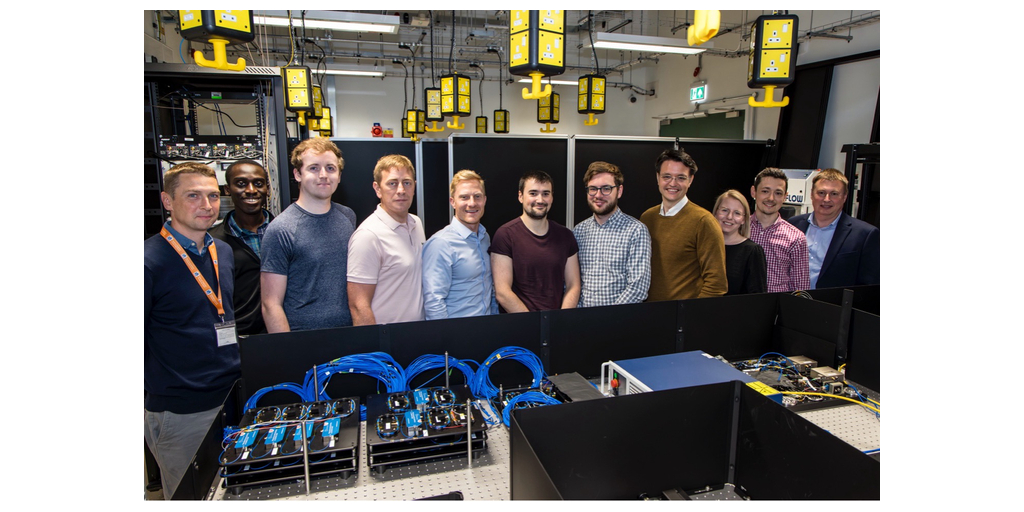

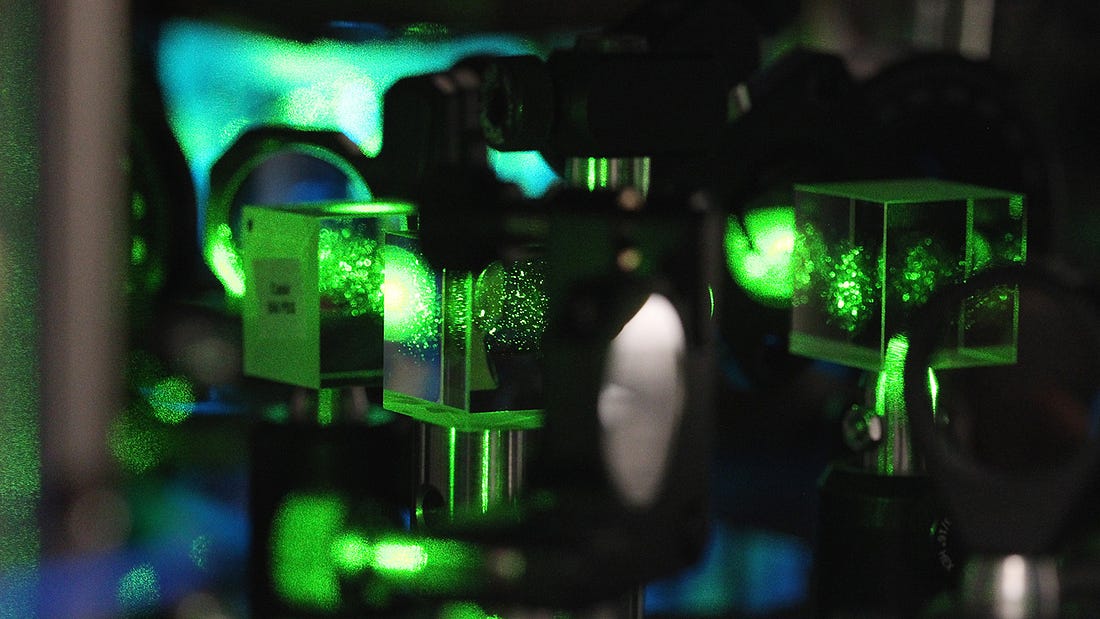

At the heart of this quantum computer, lasers control the states of individual atoms, manipulating information based on the extremely subtle physics of those atoms’ energy levels. Photo by Frank Wojciechowski

Thompson’s lab works on a type of quantum computer based on neutral atoms. Inside the ultra-high vacuum chamber that defines the computer, qubits are stored in the spin of individual ytterbium atoms held in place by focused laser beams called optical tweezers. In this work, a team led by graduate student Shuo Ma used an array of 10 qubits to characterize the probability of errors occurring while first manipulating each qubit in isolation, then manipulating pairs of qubits together.

They found error rates near the state of the art for a system of this kind: 0.1 percent per operation for single qubits and 2 percent per operation for pairs of qubits.

A team led by Jeff Thompson, associate professor of electrical and computer engineering, pioneered an approach to more efficient error correction in quantum computers. (Illustration by Gabriele Meilikhov/Muza Productions)

However, the main result of the study is not only the low error rates, but also a different way to characterize them without destroying the qubits. By using a different set of energy levels within the atom to store the qubit, compared to previous work, the researchers were able to monitor the qubits during the computation to detect the occurrence of errors in real time. This measurement causes the qubits with errors to emit a flash of light, while the qubits without errors remain dark and are unaffected.

This process converts the errors into a type of error known as an erasure error. Erasure errors have been studied in the context of qubits made from photons, and have long been known to be simpler to correct than errors in unknown locations, Thompson said. However, this work is the first time the erasure-error model has been applied to matter-based qubits. It follows a theoretical proposal last year from Thompson, Puri and Shimon Kolkowitz of the University of California-Berkeley.

In the demonstration, approximately 56 percent of one-qubit errors and 33 percent of two-qubit errors were detectable before the end of the experiment. Crucially, the act of checking for errors doesn’t cause significantly more errors: The researchers showed that checking increased the rate of errors by less than 0.001 percent. According to Thompson, the fraction of errors detected can be improved with additional engineering.

The researchers believe that, with the new approach, close to 98 percent of all errors should be detectable with optimized protocols. This could reduce the computational costs of implementing error correction by an order of magnitude or more.

Other groups have already started to adapt this new error detection architecture. Researchers at Amazon Web Services and a separate group at Yale have independently shown how this new paradigm can also improve systems using superconducting qubits.

“We need advances in many different areas to enable useful, large-scale quantum computing. One of the challenges of systems engineering is that these advances that you come up with don’t always add up constructively. They can pull you in different directions,” Thompson said. “What’s nice about erasure conversion is that it can be used in many different qubits and computer architectures, so it can be deployed flexibly in combination with other developments.”

Additional authors on the paper “High-fidelity gates with mid-circuit erasure conversion in a metastable neutral atom qubit” include Shuo Ma, Genyue Liu, Pai Peng, Bichen Zhang, and Alex P. Burgers, at Princeton; Sven Jandura at Strasbourg; and Jahan Claes at Yale. This work was supported in part by the Army Research Office, the Office of Naval Research, DARPA, the National Science Foundation and the Sloan Foundation.

Written by the Office of Engineering Communications, Princeton University

Featured image: Researchers led by Jeff Thompson at Princeton University have developed a technique to make it 10 times easier to correct errors in a quantum computer. Photos by Frank Wojciechowski

If you found this article to be informative, you can explore more current quantum news here, exclusives, interviews, and podcasts.