Quantum computing has been hailed as the next big thing in computing, with the potential to revolutionize many fields, including cryptography, drug discovery, and optimization problems.

And over the last decade, we have seen great progress in increasing the number of qubits, the fundamental building blocks of quantum computers.

But Quantum computers, despite their many promises, are not yet a mature technology. The error rates of qubit and inter-qubit operations are too high to allow current devices to be useful. In essence, what we have today are really engineering prototypes of quantum computers – useful only for physicists and engineers to learn how to build better quantum computers.

Over the last decade, we have made significant progress in increasing the number of qubits in quantum computers. However, despite this progress, quantum computers have been stuck around 0.25% error rate per operation in that same time period. Even in the Google “quantum supremacy” result from 2019, 99.8% of the results were noise. The experiment had to be run 30,000,000 times in order to gather sufficient statistics to prove the claimed result. At such error rates, even 100 qubits are unusable.

In order to build fault-tolerant quantum computers that can perform useful calculations, error rates must be reduced by at least an order of magnitude. This is a significant challenge, as errors can arise from a variety of sources, including qubit noise, control electronics, and environmental factors.

One approach to reducing error rates is to use error-correcting codes, which can detect and correct errors as they arise. However, these codes require a large number of qubits to be effective, which means that reducing error rates is still a critical challenge.

Ultimately, to make quantum computers scale, we need to aim for error-correcting fault-tolerant quantum computers, which will require hundreds of thousands of qubits to be effective. However, before we can achieve this, we must first reduce error rates by at least an order of magnitude.

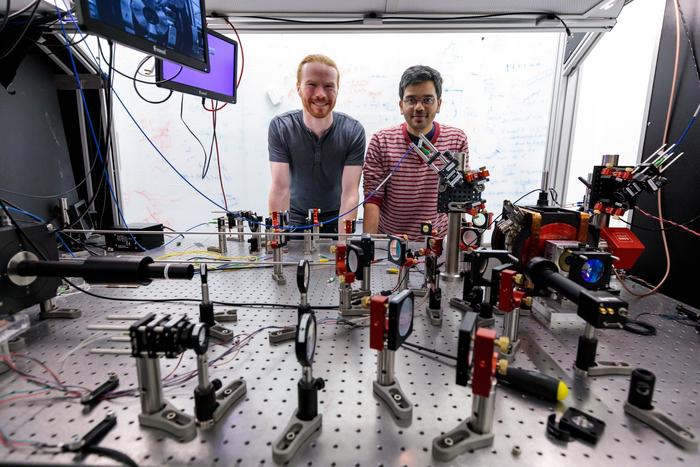

The reason we have not been able to reduce error rates is that qubits are like snowflakes – each is slightly different (or its environment and/or control pathways are slightly different). To get low error rates, we must measure dozens of parameters per qubit per operation and characterize in detail the multitude of noises that affect qubit operations. This will allow us to deeply understand what are the root causes of the remaining errors.

Unfortunately, this requires a very large number of highly qualified people to execute. That is too much work – even for very large companies such as IBM and Google, let alone smaller startups or academic labs.

THE LIGHT BULB DID NOT COME FROM THE CONTINUOUS IMPROVEMENT OF CANDLES… (Oren Harari)

The solution to this problem is an ML physicist. ML assistants will become pervasive in most fields of human endeavour over the next decade, and experimental physics is no exception. And an ML physicist can help us make rapid progress in reducing the error rate in quantum computers.

An ML Physicist is a narrow AI that is specifically designed to work alongside human physicists in development labs. By taking on the tedious work of measuring parameters and analyzing data, it frees up the human physicists to focus on more high-level tasks, such as addressing key issues in the next iteration of hardware. This allows for faster improvements and ultimately leads to the development of useful quantum computers.

An ML Physicist uses machine learning algorithms to identify patterns in the data that may not be immediately obvious to human physicists. By providing new insights and perspectives, it can help to guide the efforts of the human physicists towards the most important areas of development. This collaboration between human and AI can lead to breakthroughs that may not have been possible with either one alone.

Overall, the ML Physicist represents a powerful tool for accelerating the development of quantum computers. By automating certain tasks and providing new insights, it can help to optimize the efforts of human physicists and ultimately lead to the creation of more useful and practical quantum computing systems.

In conclusion, machine learning is a necessary tool to make quantum computers scale. We have made great progress increasing the number of qubits over the last decade, but commensurate improvement in error rates are lacking, due to the complexity of the devices in question. An ML Physicist could accelerate progress on this problem. And as ML technology continues to advance, it is likely that it will play an increasingly important role in the development of useful quantum computers.

Shai Machnes is CEO and co-founder of Qruise

If you found this article to be informative, you can explore more current quantum news here, exclusives, interviews, and podcasts.