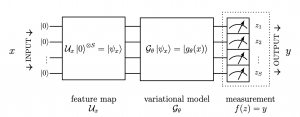

A team of researchers from IBM Quantum, University of KwaZulu-Natal and ETH Zurich reported that well-designed quantum neural networks offer an advantage over classical neural networks through a higher effective dimension and faster training ability. The researchers verified these results on real quantum hardware using IBM’s 27-qubit device called Montreal.

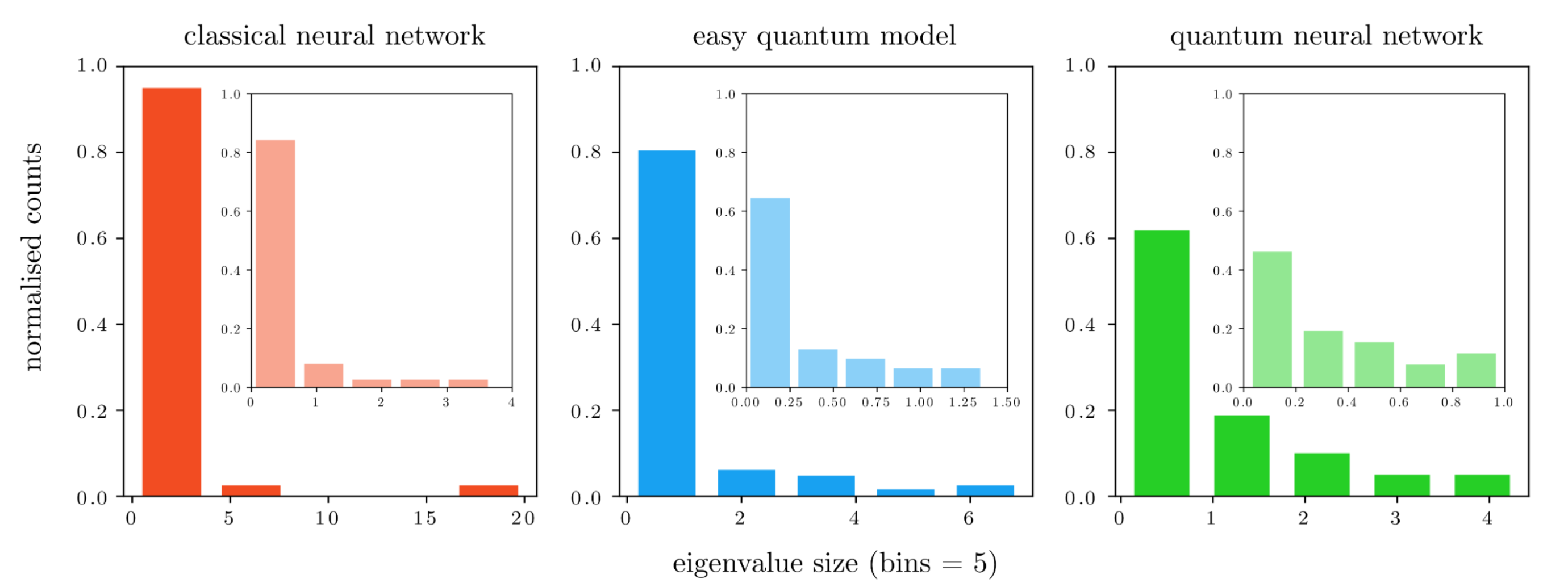

In the study, the researchers found that a class of quantum models have a significantly higher ability to express different functions compared to classical feedforward neural networks. Neural networks are a type of machine learning model that has been likened to the way neurons in the human brain work.

According to Amira Abbas, Quantum Research Advocate with IBM Quantum, who was first author on the study, the work addresses the potential and current limitations of quantum computers. Specifically, fault-tolerant quantum computers — which avoid errors that noisy near-term quantum computing devices are susceptible to — offer the promise of dramatically improving machine learning through speed-ups in computation or improved model scalability. The benefits of quantum machine learning on the current devices, however, was not so clear until their recent results.

“The effective dimension intuitively measures the power or size of a statistical model. Our work shows a few interesting things. One, quantum neural networks can be more expressive or powerful than classical neural networks, since they have a bigger effective dimension. And two, these quantum neural networks also have desirable optimization qualities. Whilst these results are promising, they open many avenues for further research. In particular, figuring out why these specific quantum models offer these favorable properties is what we plan to do next,” said Abbas.

“The effective dimension intuitively measures the power or size of a statistical model. Our work shows a few interesting things. One, quantum neural networks can be more expressive or powerful than classical neural networks, since they have a bigger effective dimension. And two, these quantum neural networks also have desirable optimization qualities. Whilst these results are promising, they open many avenues for further research. In particular, figuring out why these specific quantum models offer these favorable properties is what we plan to do next,” said Abbas.

The researchers also found that the quantum models they designed are capable of training to lower loss values, which they validated using actual quantum hardware. In addition, they offered evidence of a generalization bound using the effective dimension as a capacity measure for neural networks.

Abbas and her colleagues reported their findings in arXiv, which is a preprint research server which allows scientists to gain feedback on their work before official peer review.

David Sutter, Christa Zoufal, Aurelien Lucchi, Alessio Figalli and Stefan Woerner also contributed to the study.

For more market insights, check out our latest quantum computing news here.